Behavior recognition technical method based on deep learning

A recognition technology and deep learning technology, applied in the field of computer video recognition, can solve the problems of video surveillance system intelligence that needs to be improved, and achieve the effect of improved recognition rate and good feature expression ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

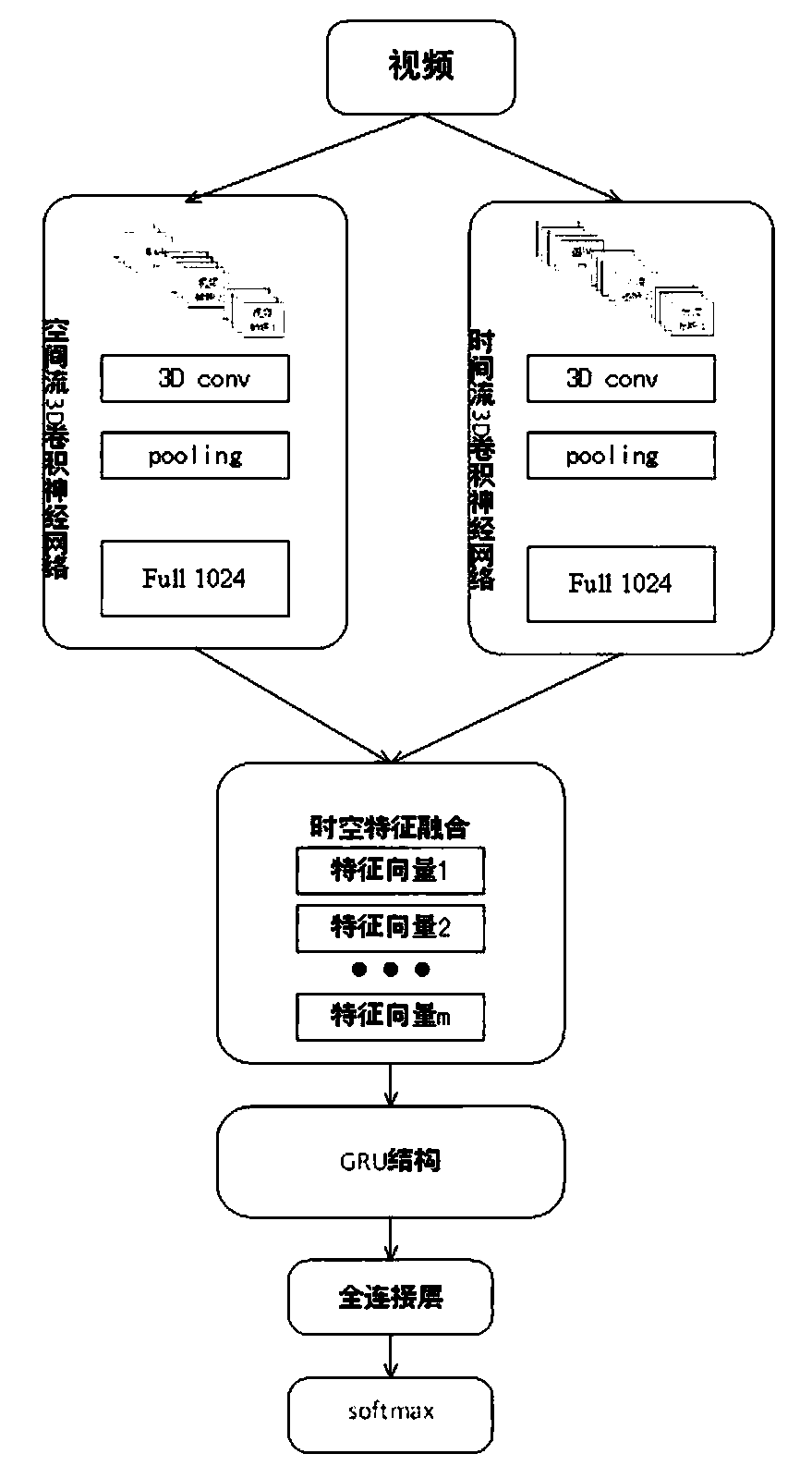

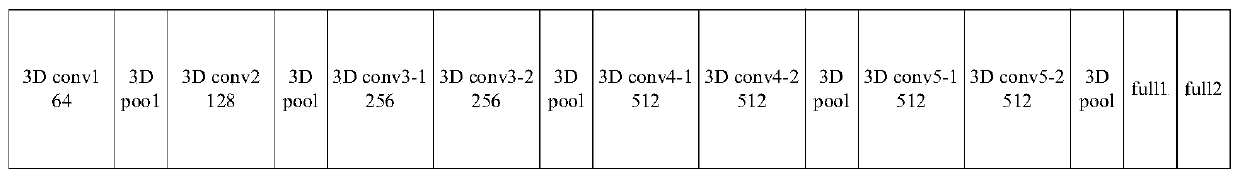

[0048] The behavior recognition technology method based on deep learning of the present invention will be further described below in conjunction with accompanying drawing and specific embodiment: Contain following steps, step 1, introduce 3D convolutional neural network into double-stream convolutional neural network, and adopt dual-stream convolutional neural network A deeper spatio-temporal dual-stream CNN-GRU neural network model is built by combining network and GRU network;

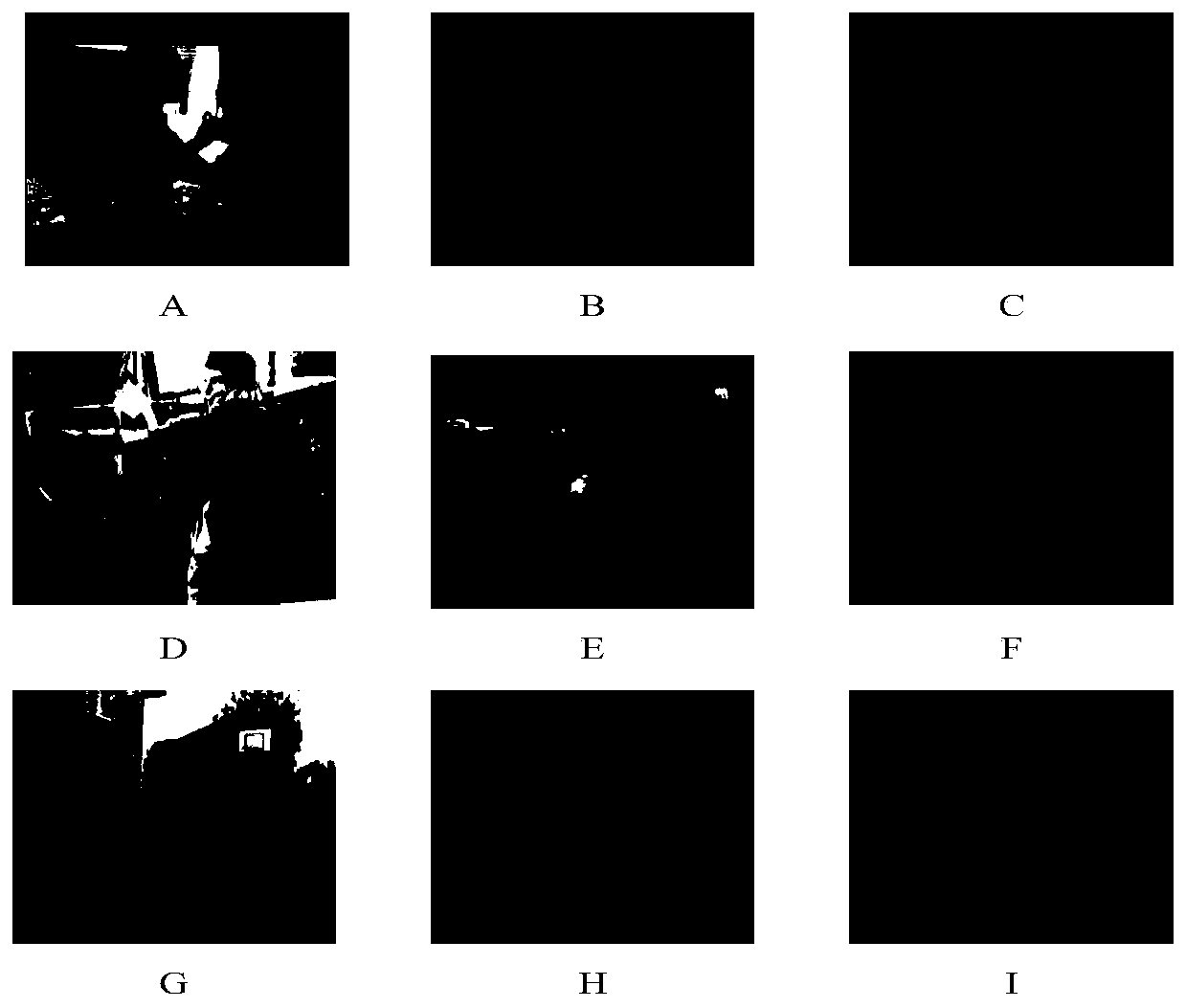

[0049] Step 2. Use the 3D convolutional neural network for the spatial stream and the temporal stream in the spatio-temporal dual-stream convolutional neural network, input more video frames into the network to participate in the training of the network, and extract the time domain and spatial domain features of the video;

[0050] Step 3. Fusion of time-domain features and space-domain features into a time-ordered spatio-temporal feature sequence, using the spatio-temporal feature sequence as the inp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com