Third-order low-rank tensor completion method based on GPU

A third-order tensor and completion technology, which is applied in the field of high-performance computing, can solve the problems of unsuitable large-scale tensor processing and running time increase, and achieve the effect of improving computing efficiency and improving computing efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

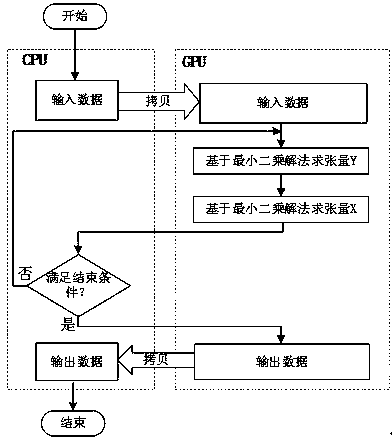

[0045] A GPU-based third-order low-rank tensor completion method, the steps are as follows figure 1 shown, including:

[0046] Step 1: The CPU transmits the input data DATA1 to the GPU, and the number of initialization cycles l=1;

[0047] Step 2: GPU obtains a third-order tensor Y of a current cycle l based on the least squares solution l ;

[0048] Step 3: GPU obtains a third-order tensor X of the current cycle l based on the least squares solution l ;

[0049] Step 4: The CPU checks whether the end condition is satisfied, if it is satisfied, go to step 5, otherwise, increase the number of cycles l by 1 and go to step 2 to continue the loop;

[0050]Step 5: The GPU transmits the output data DATA2 to the CPU.

Embodiment 2

[0051] Embodiment 2: This embodiment is basically the same as Embodiment 1, and the special features are as follows:

[0052] The step 1 includes:

[0053] Step 1.1: Allocate space in GPU memory;

[0054] Step 1.2: Transfer the input data DATA1 in the CPU memory to the allocated space in the GPU memory. DATA1 contains the following data:

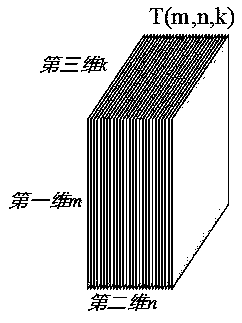

[0055] (1) A third-order tensor T∈R to be completed m×n×k . R represents a real number, and m, n, and k are the sizes of the first, second, and third dimensions of the tensor T, respectively. The total number of elements of this tensor is m×n×k, and the tensor elements whose first, second, and third dimensions are i, j, and k respectively are recorded as T i,j,k .

[0056] (2) An observation set S∈o×p×q, and o≤m, p≤n, q≤k.

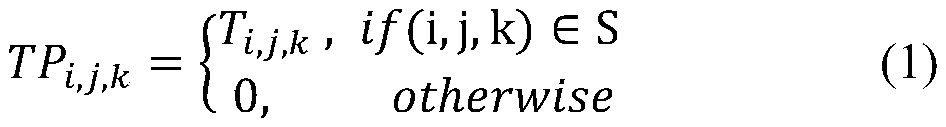

[0057] (3) An observation set S and tensor T∈R to be completed m×n×k The observation tensor TP ∈ R m×n×k . TP is obtained by using the observation function ObserveS() on T, that is, TP=ObserveS(T). Among them,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com