Aneural network acceleration system based on a block cyclic sparse matrix

A neural network and sparse matrix technology, which is applied in the field of neural network acceleration systems based on block cyclic sparse matrices, can solve problems such as the inability to effectively utilize excitation and weight sparsity, irregularity, and load imbalance, and improve processing energy efficiency. and throughput, reducing capacity requirements, reducing the effect of excessive access

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] The solution of the present invention will be described in detail below in conjunction with the accompanying drawings.

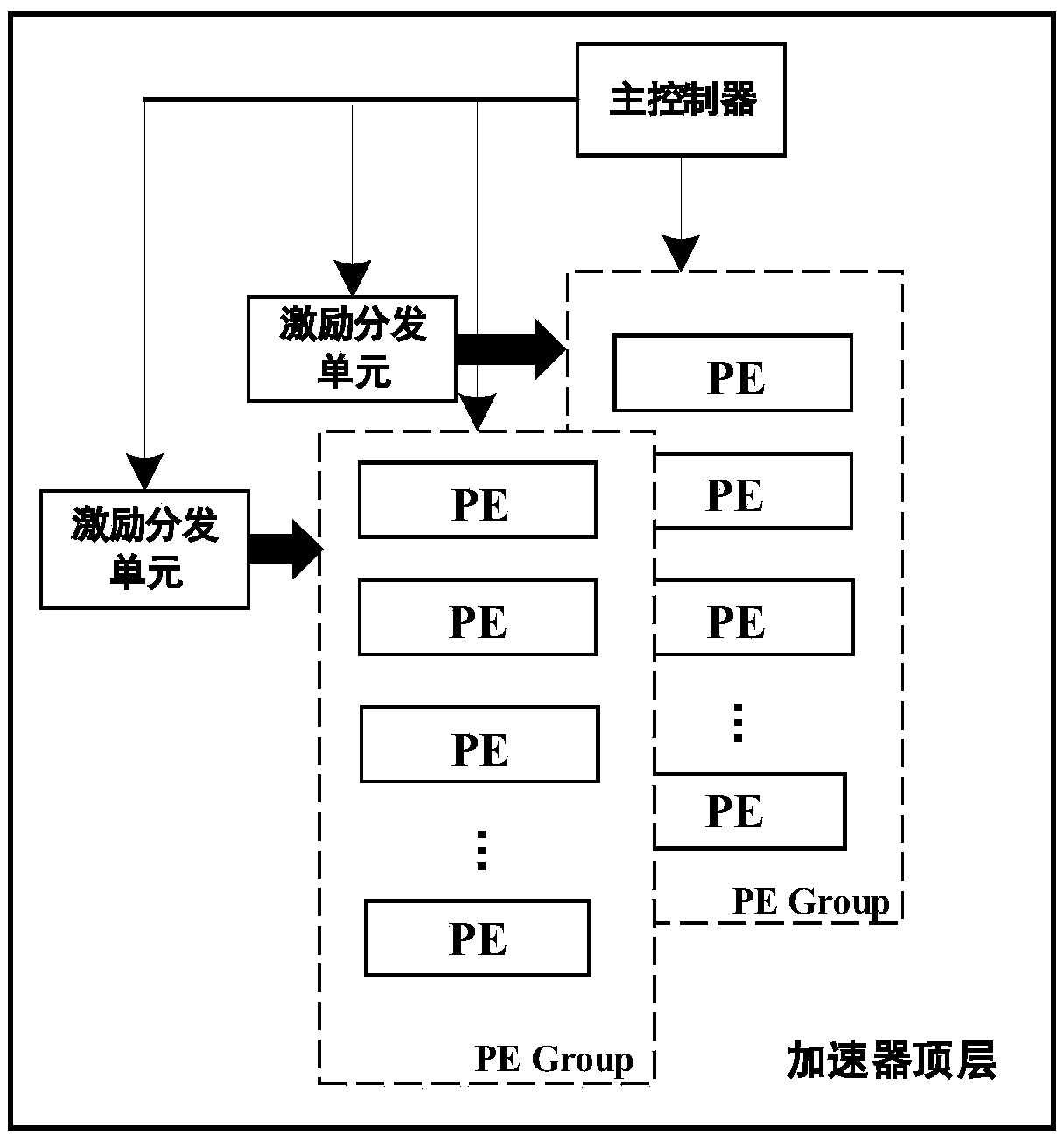

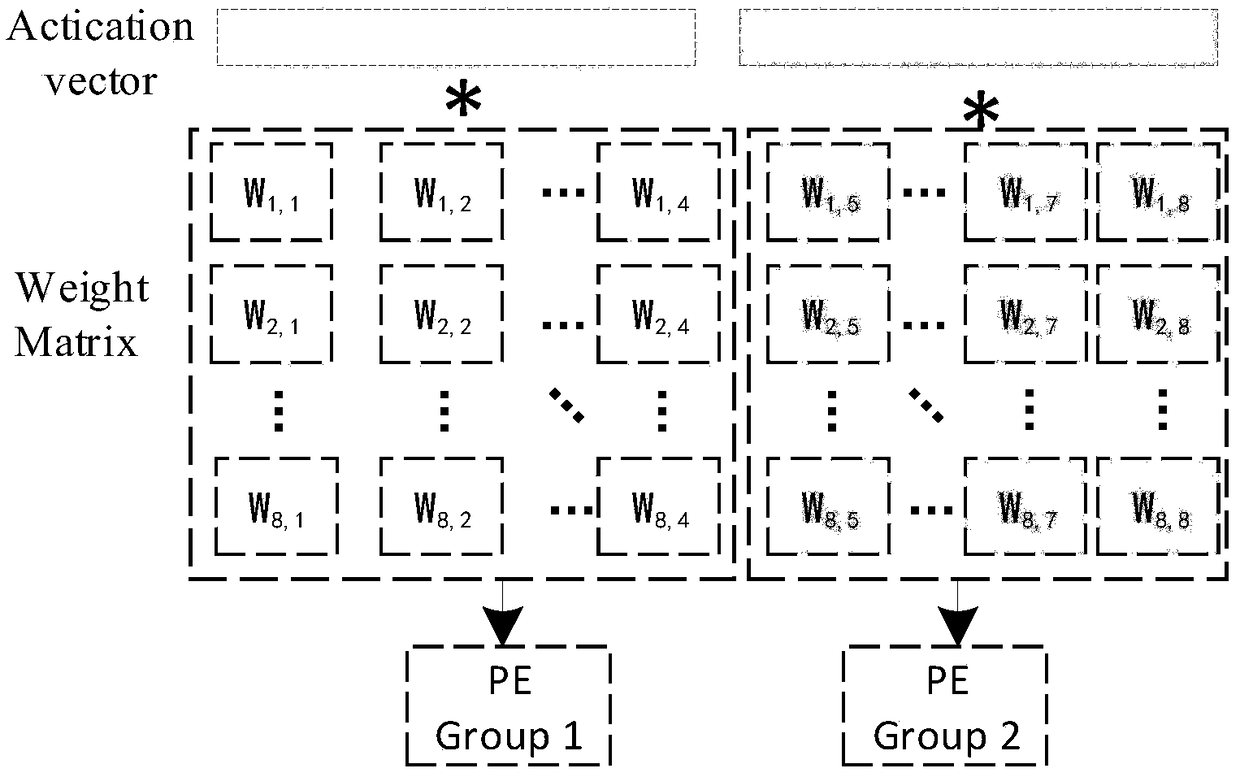

[0031] Such as figure 1 , the accelerator system of this embodiment combines the two compression methods of cycle and sparsification, and utilizes the characteristics of the compressed neural network for acceleration. The architecture effectively utilizes the characteristics of compressed weights and incentives, and has the advantages of high throughput and low latency.

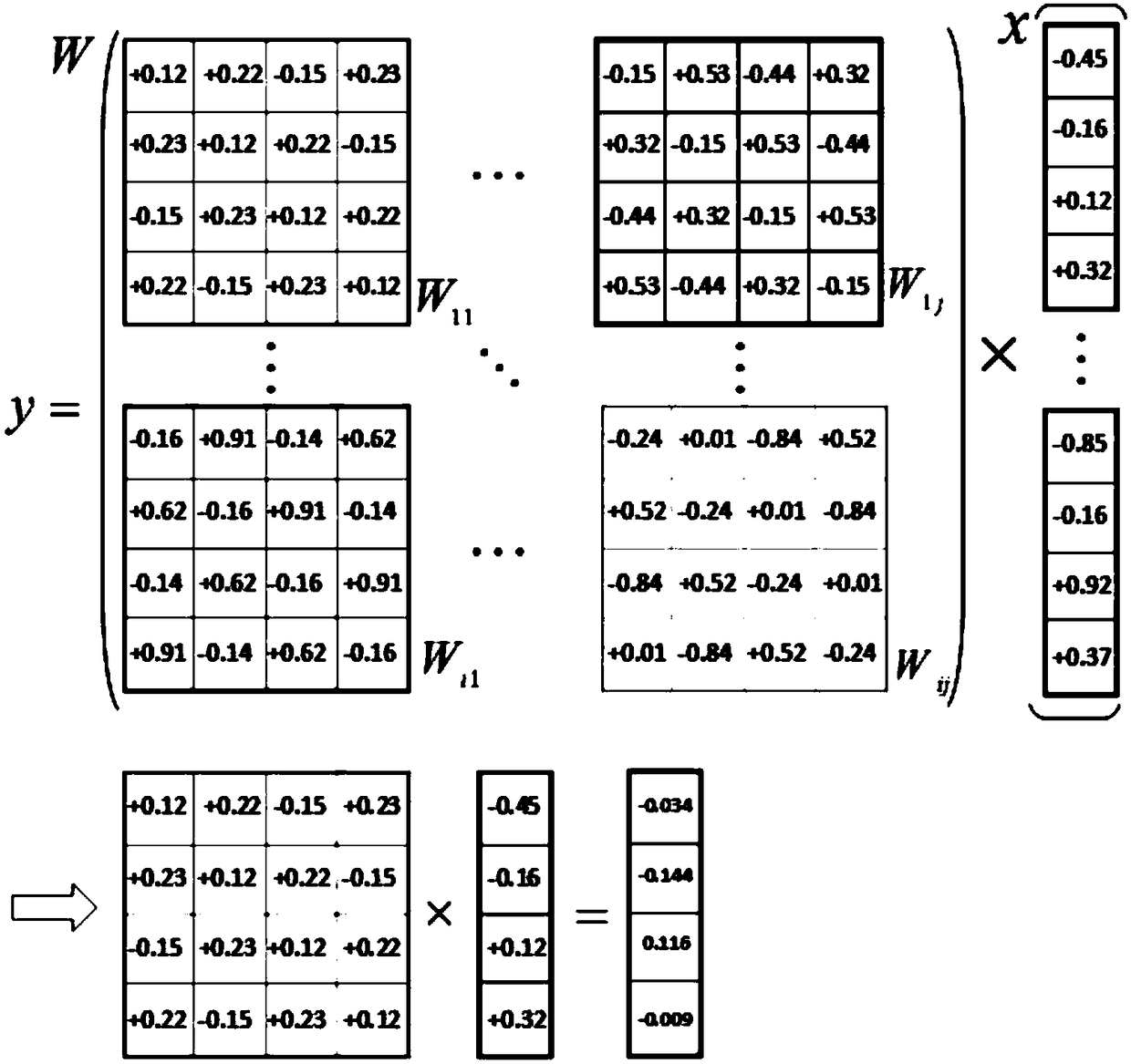

[0032] The calculation formula of the fully connected layer algorithm is as follows:

[0033] y=f(Wa+b) (1)

[0034] Among them, a is the excitation vector of the calculation input, y is the output vector, b is the bias, f is the nonlinear function, and W is the weight matrix.

[0035] The operation of each element value of the output vector y in formula (1) can be expressed as:

[0036]

[0037] Therefore, the main operations of the fully connected layer are divided into: mat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com