Panoramic inertial navigation SLAM method based on multiple key frames

A key frame, panorama technology, applied in the direction of navigation through velocity/acceleration measurement, camera devices, etc., can solve the problems of large influence of scale estimation, visual SLAM method cannot be used normally, light sensitivity, etc., to improve accuracy and robustness performance, verify the real-time performance of the system, and take into account the effect of computing efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

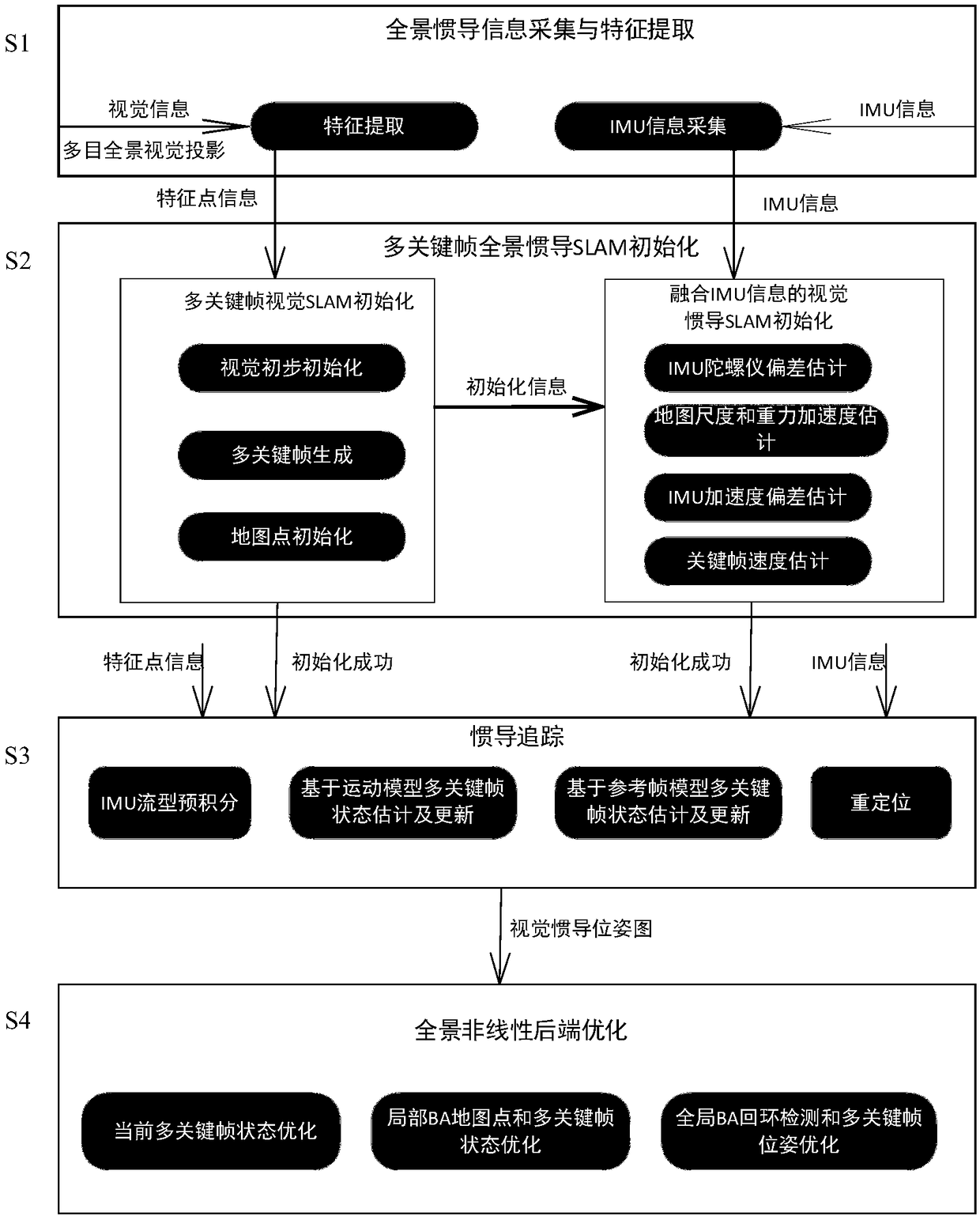

[0034] This embodiment provides a multi-keyframe-based panoramic inertial navigation SLAM method, please refer to figure 1 , is shown as a flow diagram of the multi-keyframe-based panoramic inertial navigation SLAM method. like figure 1 Described, described panoramic inertial navigation SLAM method comprises the following steps:

[0035] S1, panoramic inertial navigation information collection and feature extraction. In this embodiment, through hardware synchronous triggering, the inertial unit is operated in real time at a frequency of 100HZ, and the three cameras are operated in real time at a frequency of 20HZ. AGAST corner points are used to extract features from each camera image and descriptors are expressed using the task-based BRIEF online learning algorithm. In this embodiment, the multi-eye panoramic vision projection determines the multi-body frame by the external reference relationship of the three cameras, and extracts the projection relationship between the fea...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com