Dynamic and static characteristic-based video classification method

A video classification, dynamic and static technology, applied in the cross field, can solve the problems of unsatisfactory, high hardware requirements, poor real-time performance, etc., to achieve good accuracy and effectiveness, improve accuracy, and increase accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

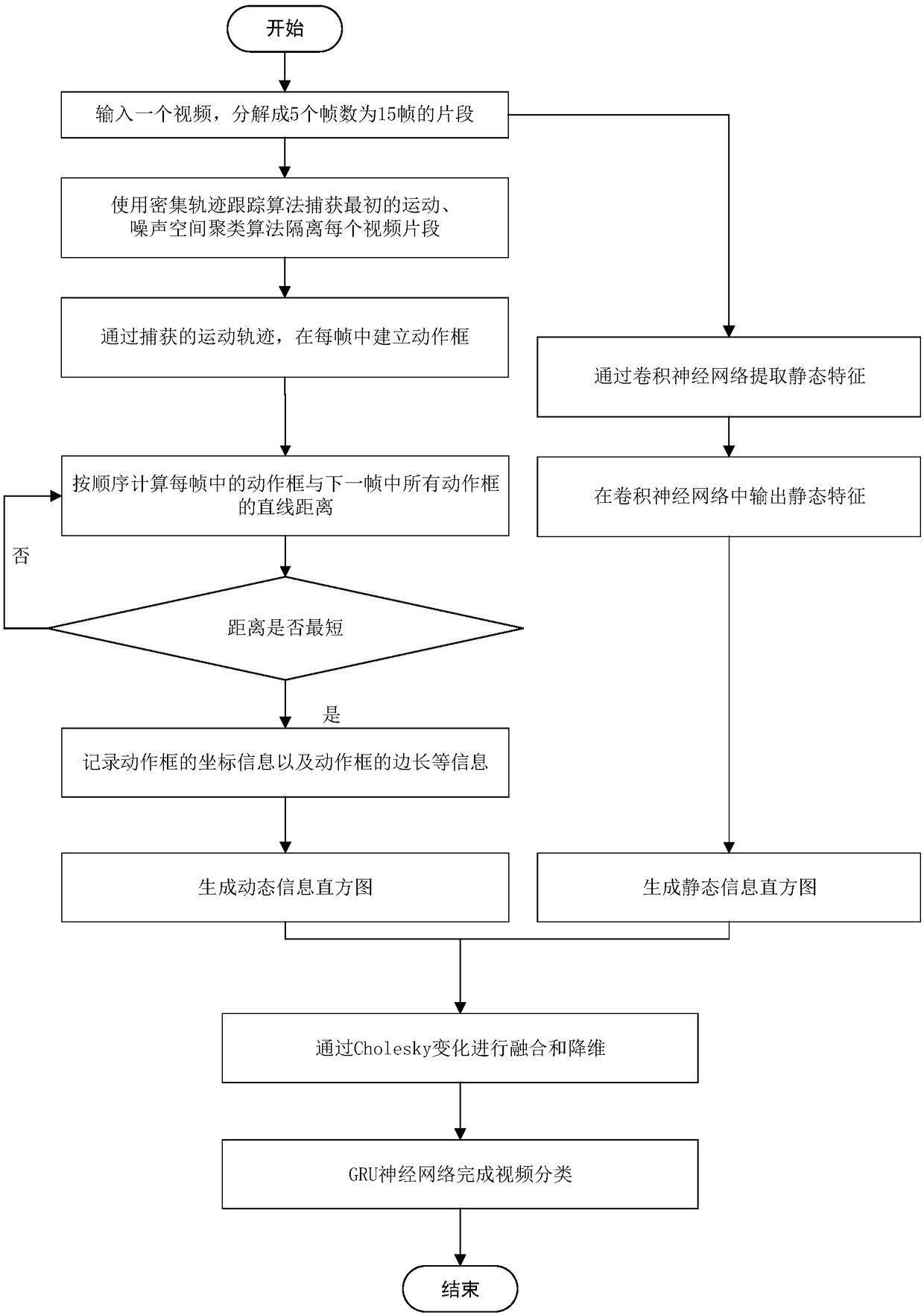

[0051] Below in conjunction with accompanying drawing, technical scheme of the present invention is described in further detail:

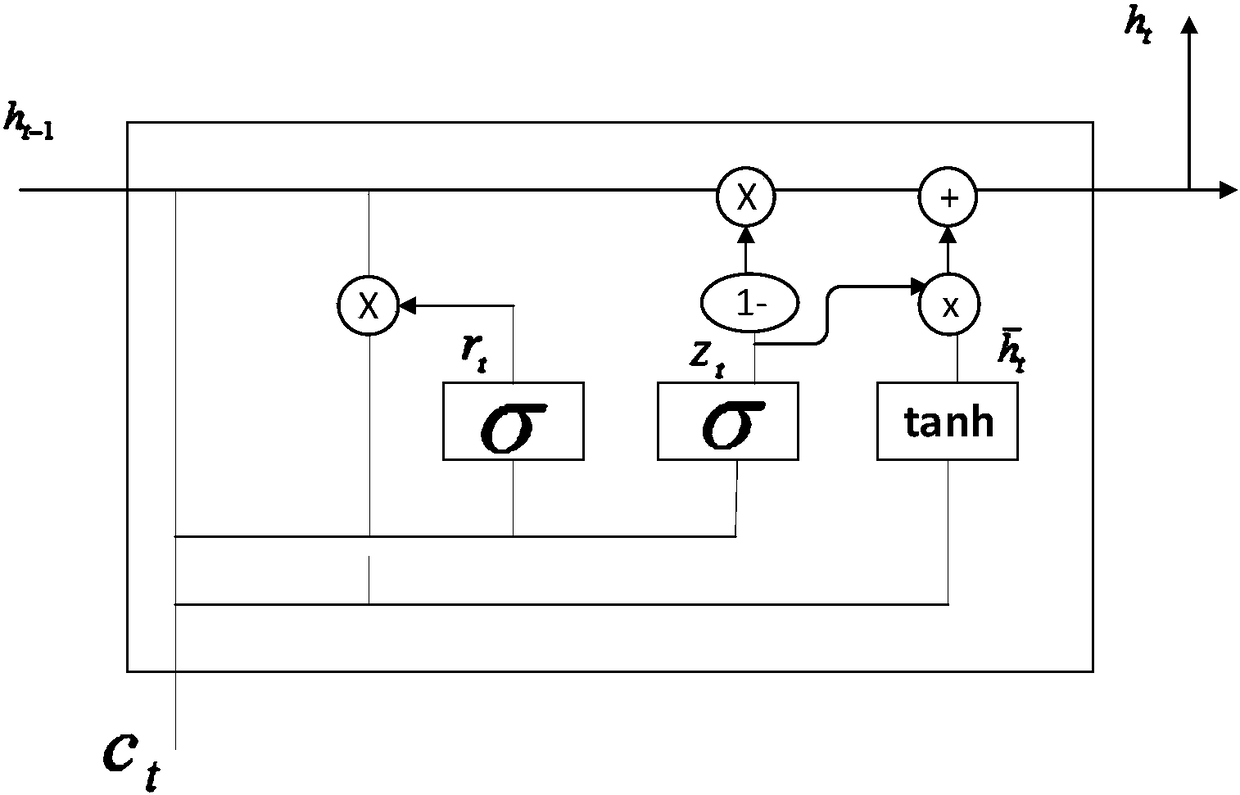

[0052] A kind of video classification method based on static and dynamic features of the present invention comprises the following steps:

[0053] Step 1) input 1 video, described video is the video of user input, this video is decomposed into the video segment that has 1 frame, wherein the interval of each video segment is 5 frames;

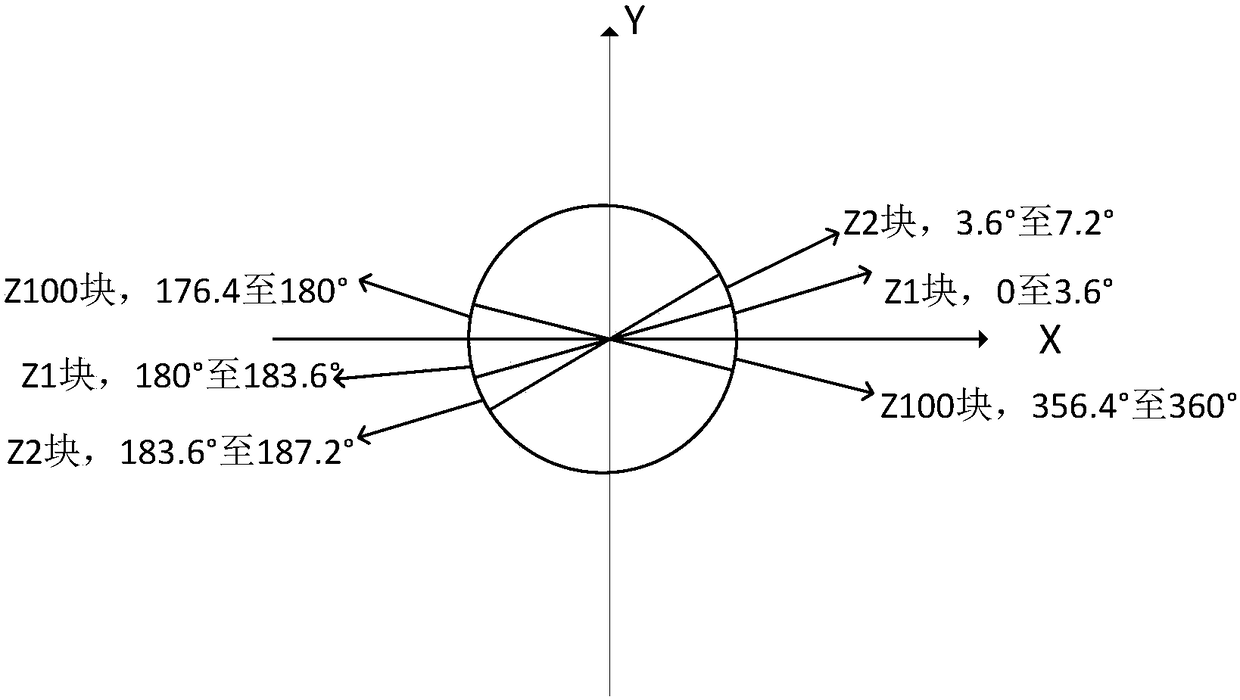

[0054] Step 2) track the moving object in the input video in step 1) through the dense trajectory tracking algorithm (DT algorithm), and use the density-based noise space clustering algorithm (DBSCAN clustering algorithm) to isolate each frame of video to achieve the above The capture and tracking of dynamic information in the video; the DT algorithm is to densely sample feature points on multiple scales of the picture through grid division; the DBSCAN clustering algorithm starts from a selected core point and contin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com