Object and indoor small scene restoring and modeling method based on RGB-D camera data

A modeling method and object technology, applied in image data processing, instruments, calculations, etc., can solve the problems of low scene accuracy and slow speed, and achieve the effects of short time, high accuracy rate, and memory saving

Inactive Publication Date: 2017-10-10

TONGJI UNIV

View PDF1 Cites 45 Cited by

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

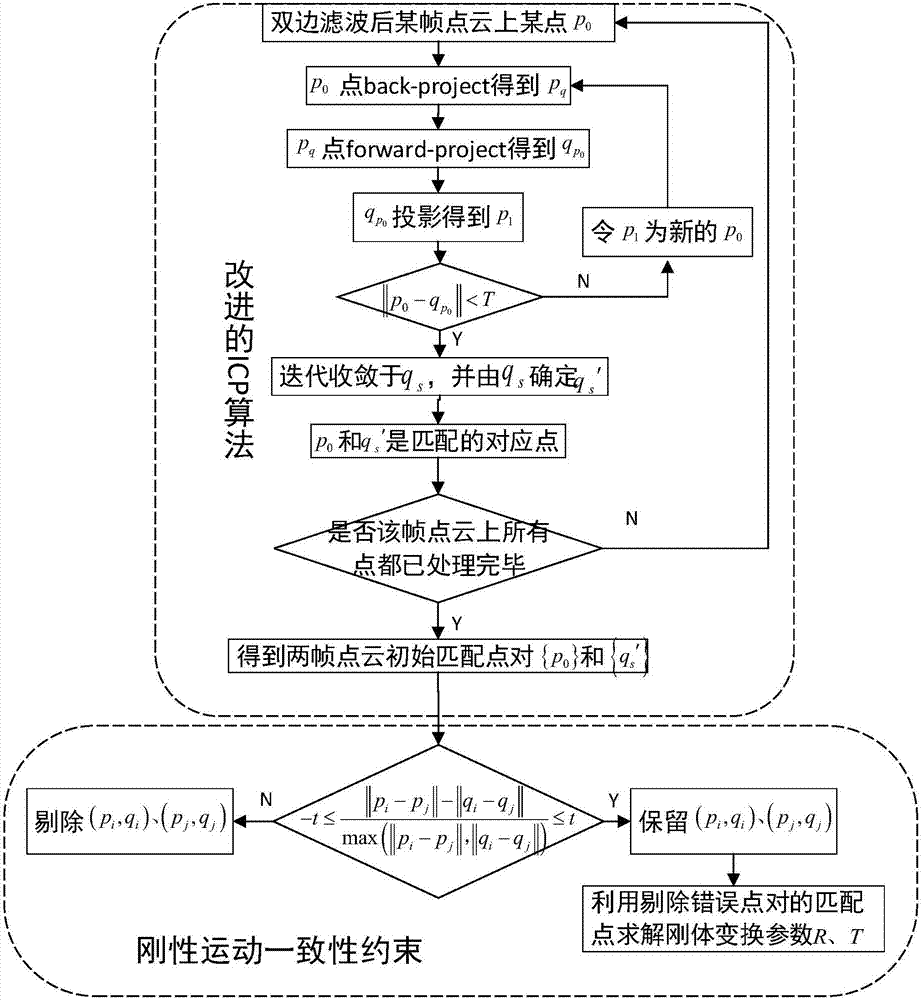

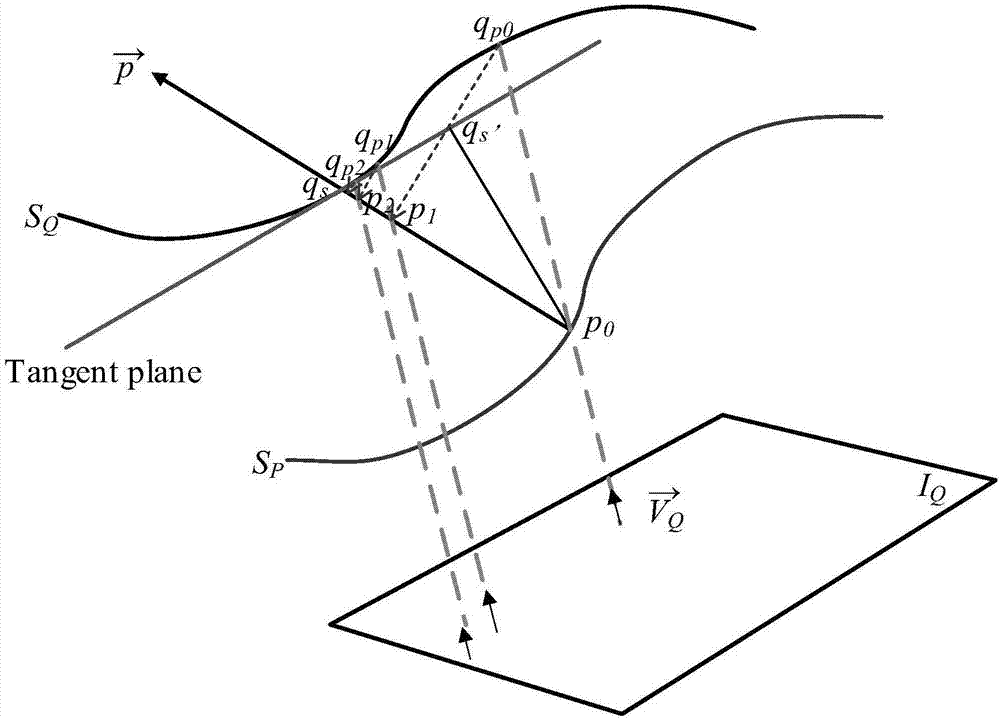

The registration method in the present invention not only takes into account the speed advantages of the point-to-projection algorithm, but also integrates the accuracy advantages of the point-to-tangent plane algorithm, overcomes the problems of slow speed and low scene accuracy of the traditional point cloud registration method, and can be fast, Accurately find the corresponding point of a point on the source point cloud on the target point cloud to realize multi-view point cloud splicing

Method used

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

View moreImage

Smart Image Click on the blue labels to locate them in the text.

Smart ImageViewing Examples

Examples

Experimental program

Comparison scheme

Effect test

Embodiment 1

[0056] For the conference room scene in the implementation example, a total of 151 frames of data are collected, and the time interval of data sampling is 3s.

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More PUM

Login to View More

Login to View More Abstract

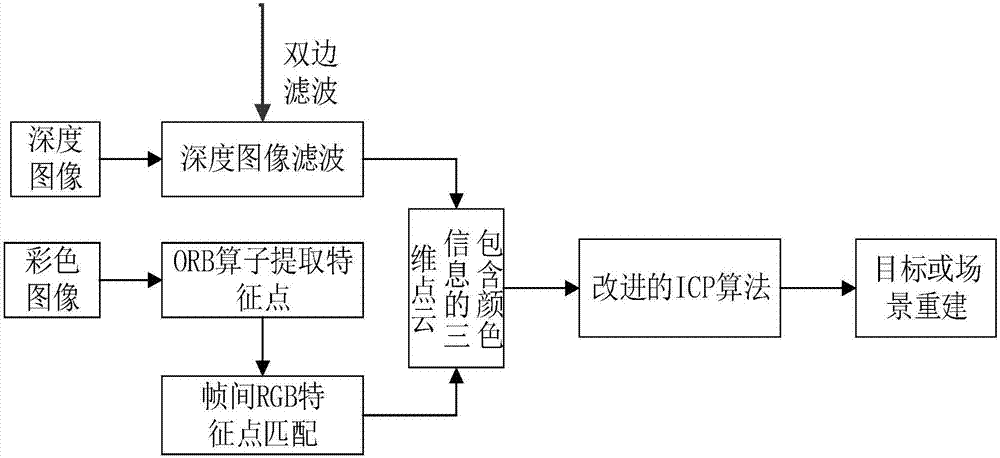

The purpose of the invention aims to provide an object and indoor small scene restoring and modeling method based on RGB-D camera data. In summary, the method comprises: in the RGB-D depth data (point cloud) registering and modeling, integrating the restraining conditions for point-to-face and point-to-projection together so that they are applied to the accurate registering of the sequence depth data (point cloud) obtained by the RGB-D camera; and finally obtaining a point cloud model (.ply format) for an object or a small scene wherein the model is able to be used for object measurement and further CAD modeling. The registering method of the invention considers the speed advantage of the point-to-projection algorithm and integrates the precise advantage of the point-to-tangent plane algorithm. This overcomes the problems with the slow speed and low scene precision in a traditional point cloud registering method, and is capable of finding out the corresponding point of a point on the source point cloud to a target point cloud quickly and accurately so as to realize the cloud splicing of multiple view points.

Description

technical field [0001] The invention belongs to the field of photogrammetry and computer vision, in particular to a method for restoring and modeling objects and small indoor scenes based on RGB-D camera data. Background technique [0002] In recent years, 3D reconstruction has increasingly become a research hotspot and focus in the field of computer vision, and has been widely used in many fields such as industrial surveying, cultural relics protection, reverse engineering, e-commerce, computer animation, medical anatomy, photomicrography, and virtual reality. Commonly used object and indoor modeling methods mainly use laser scanner LIDAR to obtain point cloud data for modeling, or use cameras to obtain overlapping images based on binocular stereo vision for modeling. The former is expensive and the instrument operation is cumbersome; Or the data processing is complex and time-consuming, has requirements on lighting conditions, and requires special calibration of the camera...

Claims

the structure of the environmentally friendly knitted fabric provided by the present invention; figure 2 Flow chart of the yarn wrapping machine for environmentally friendly knitted fabrics and storage devices; image 3 Is the parameter map of the yarn covering machine

Login to View More Application Information

Patent Timeline

Login to View More

Login to View More Patent Type & Authority Applications(China)

IPC IPC(8): G06T7/33G06T7/55

CPCG06T7/33G06T7/55G06T2207/10028

Inventor 叶勤姚亚会林怡宇洁叶张林姜雪芹

Owner TONGJI UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com