Target tracking method for RGB-D (RGB-Depth) data cross-modal feature learning based on sparse deep denoising autoencoder

A mode feature and self-encoder technology, applied in the field of target tracking, can solve the problems of ignoring the correlation between RGB mode and Depth mode, and achieve high accuracy and strong robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

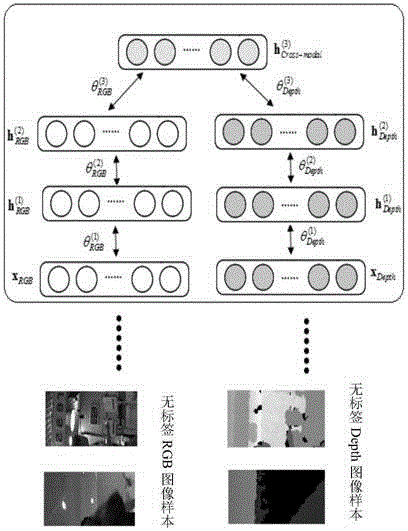

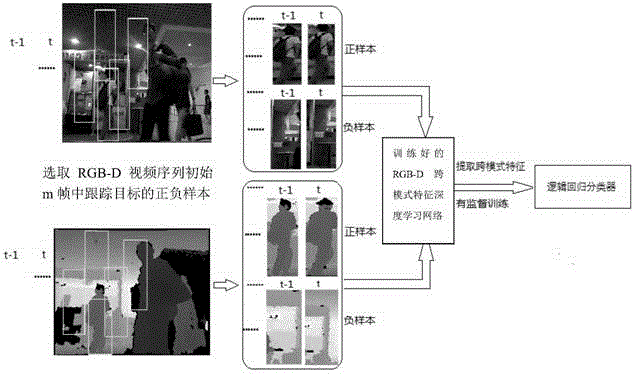

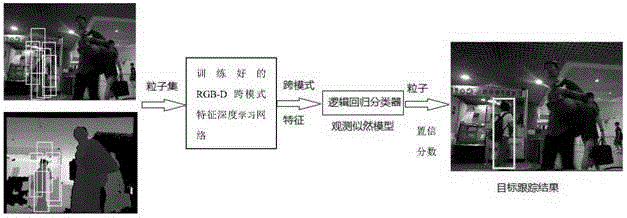

[0037] like image 3 , Figure 4 , Figure 5 , Figure 6 and Figure 13 A target tracking method for cross-modal feature learning of RGB-D data based on sparse depth denoising self-encoder is shown, including the following steps:

[0038] Step 1: Construct an RGB-D cross-modal feature deep learning network with a sparse-limited denoising autoencoder;

[0039] Step 2: collect the RGB-D video unlabeled sample set; the RGB-D video unlabeled sample set includes unlabeled RGB image samples and unlabeled Depth image samples;

[0040] Step 3: using an unsupervised learning method and the RGB-D video unlabeled sample set to train the RGB-D cross-mode feature deep learning network;

[0041] Step 4: For the RGB-D video sequence that contains the tracking target, select the positive and negative samples of the tracking target in the initial m frames as the initial template in the target sample library; the initial template includes the positive and negative RGB images of the trackin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com