Prosthetic hand control method and device based on facial expression-driven EEG signals

A technology of facial expressions and control methods, which is applied in the field of intelligent robots, can solve the problems of affecting control accuracy, lack of functional functions, and low control accuracy, so as to increase the degree of freedom of thumb side swing, improve flexible use of space, and ensure high precision. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

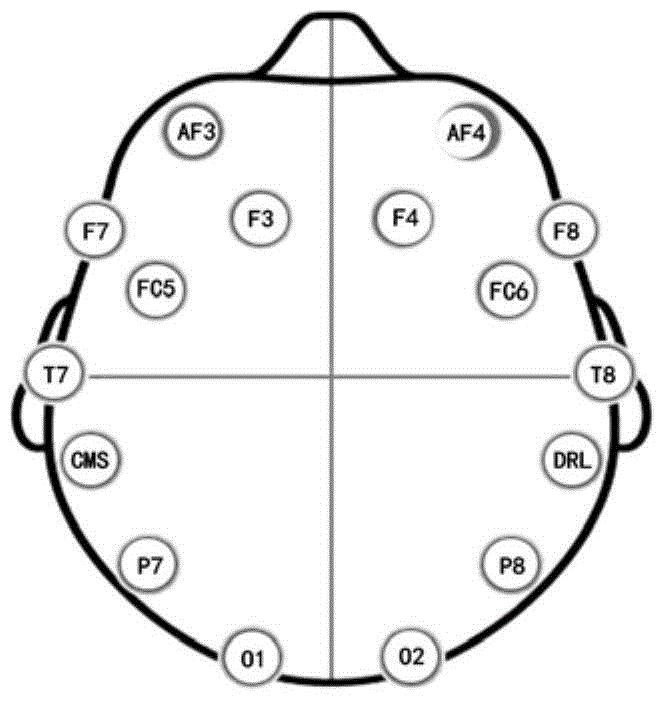

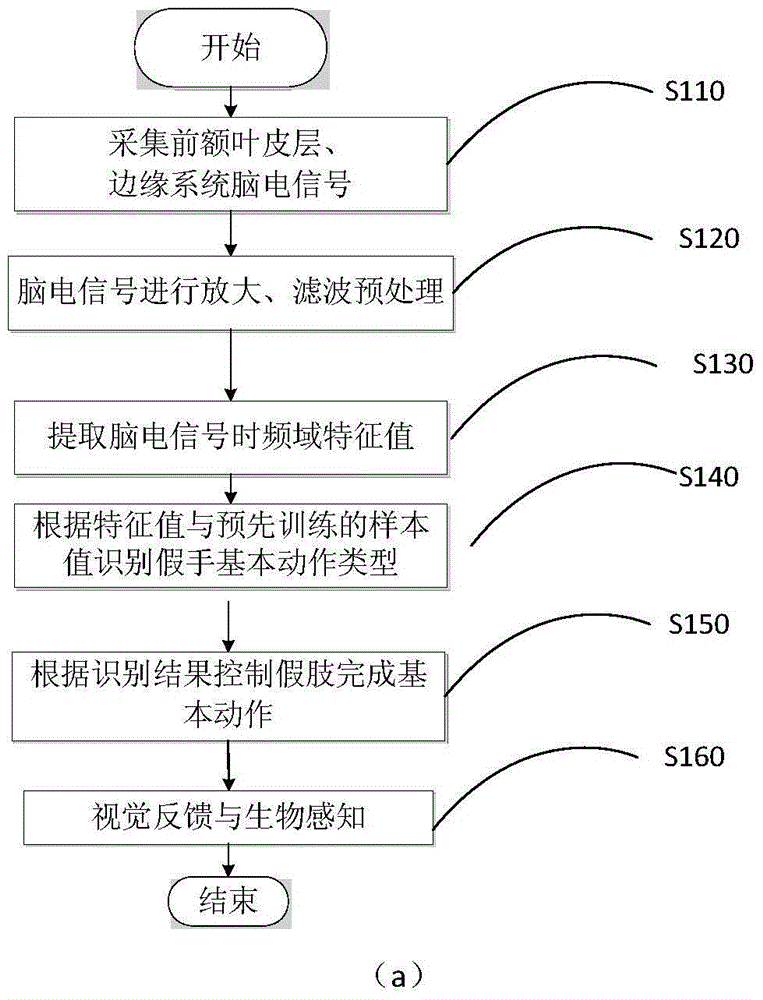

[0026] refer to figure 1 , image 3 (b), the prosthetic hand control device involved in the present invention includes an EEG signal acquisition module 310 placed on the subject's head, preferably a portable 16-channel wireless EEG acquisition device, which is located in the prefrontal cortex under the international standard 10 / 20 The EEG signals of FC5, FC6 and F7, F8 in the limbic system. The EEG signal collection module amplifies and filters the collected EEG signals and sends them to the portable signal processing module 330 through the Bluetooth transmission module 320 .

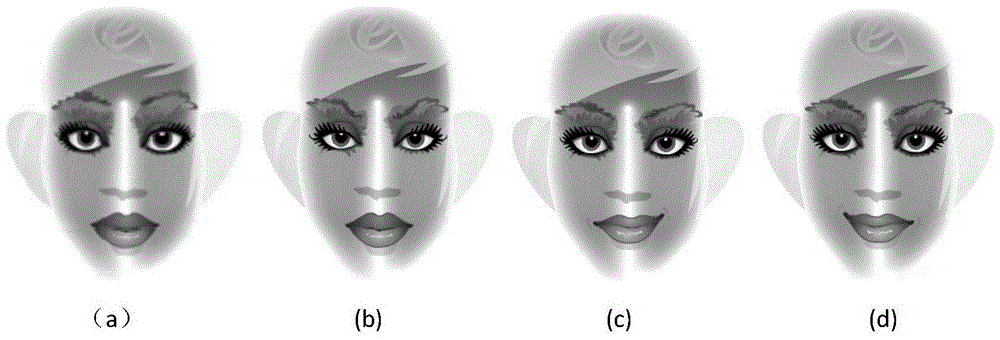

[0027] When the device starts to work, the EEG acquisition signal collection module collects the EEG signals generated by the subject’s expression drive, amplifies and filters them, and then transmits them to the signal processing module through a Bluetooth technology. The signal processing module is responsible for processing the EEG signals. Feature extraction and pattern recognition, the recognitio...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com