Distributed cache architecture with task distribution function and cache method

A distributed cache and task distribution technology, applied in the field of big data processing, can solve the problems that traditional databases cannot cope with large data volume processing and access, and achieve the effects of achieving availability, improving resource utilization, and automatically balancing data partitions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0045] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

[0046] The present invention is a distributed cache architecture with task distribution function, such as figure 1 As shown, it includes relational database Mysql2, redis distributed cache system 3 and distributed task scheduling system 1 connected to the client through the network;

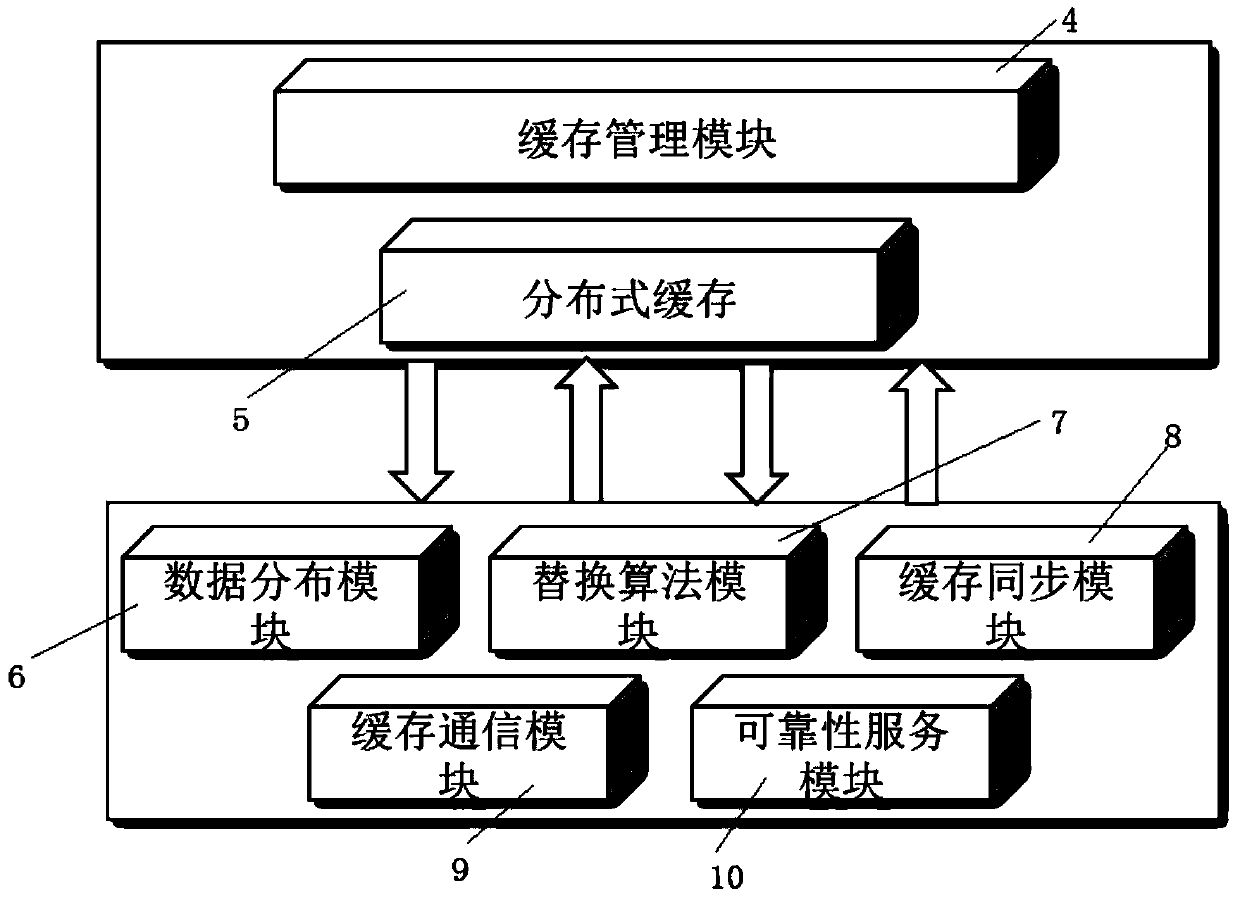

[0047] Such as figure 2 As shown, the redis distributed cache system 3 includes a cache management module 4 , a distributed cache module 5 , a data distribution module 6 , a replacement algorithm module 7 , a cache synchronization module 8 , a cache communication module 9 , and a reliability service module 10 connected in sequence.

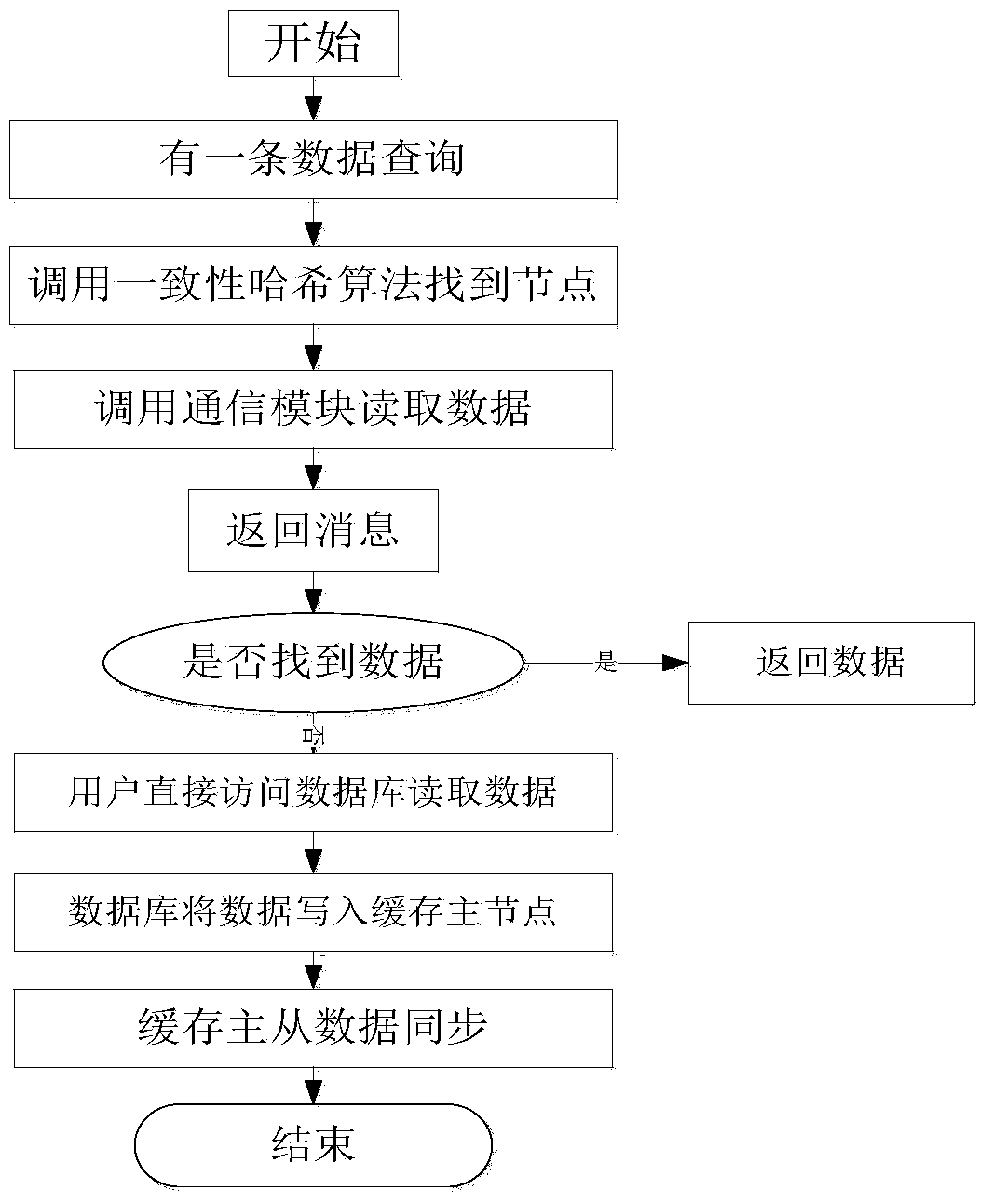

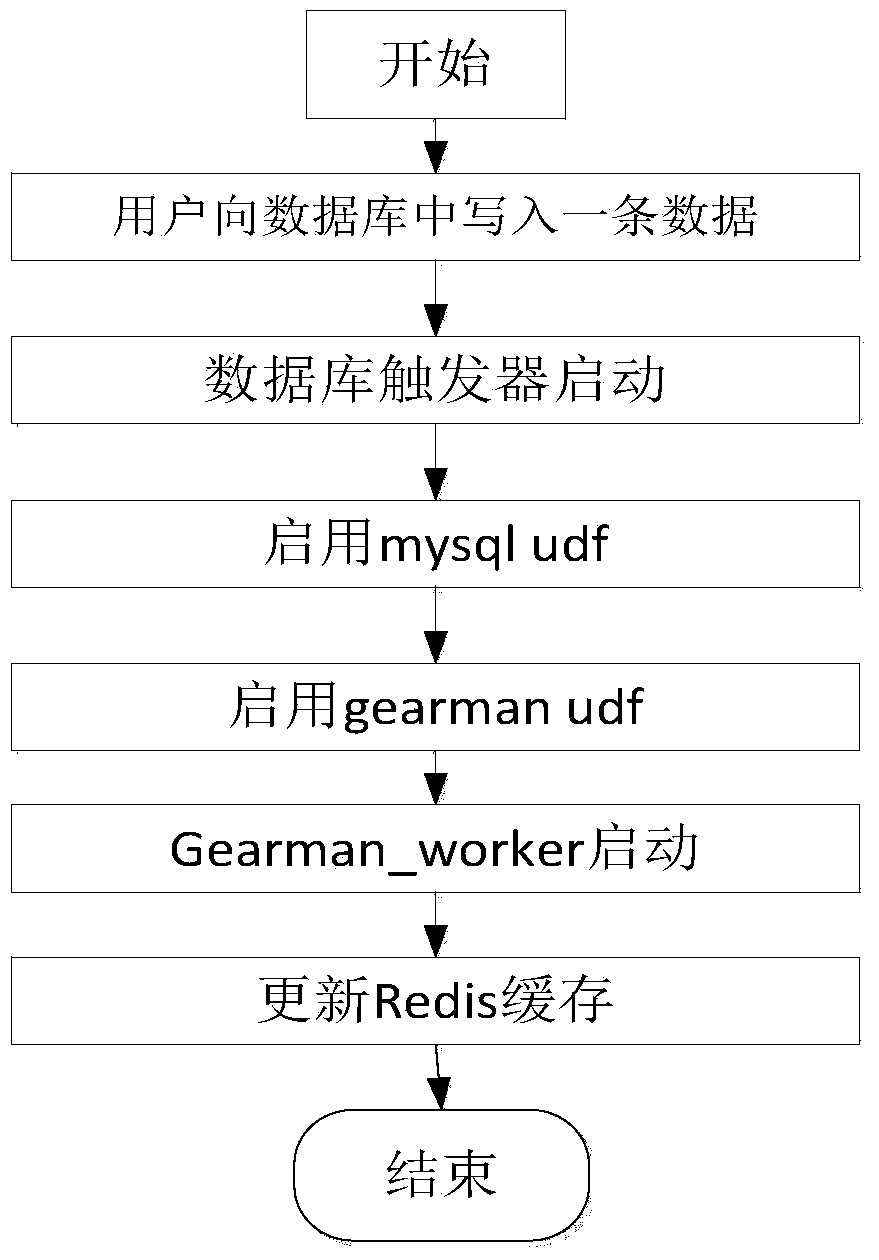

[0048] The working principle of the cache structure of the present invention is as follows: first, start the server end of the distributed task distribution system; secondly, the user writes a specific task processing module as a ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com