Reference data access management method and device

A reference data and access management technology, applied in the field of video codec, can solve the problems of not using H.264 macroblock parallel encoder, difficult design and verification, and complicated design, so as to reduce on-chip RAM overhead and low hardware overhead , Eliminate the effect of buffer block storage

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

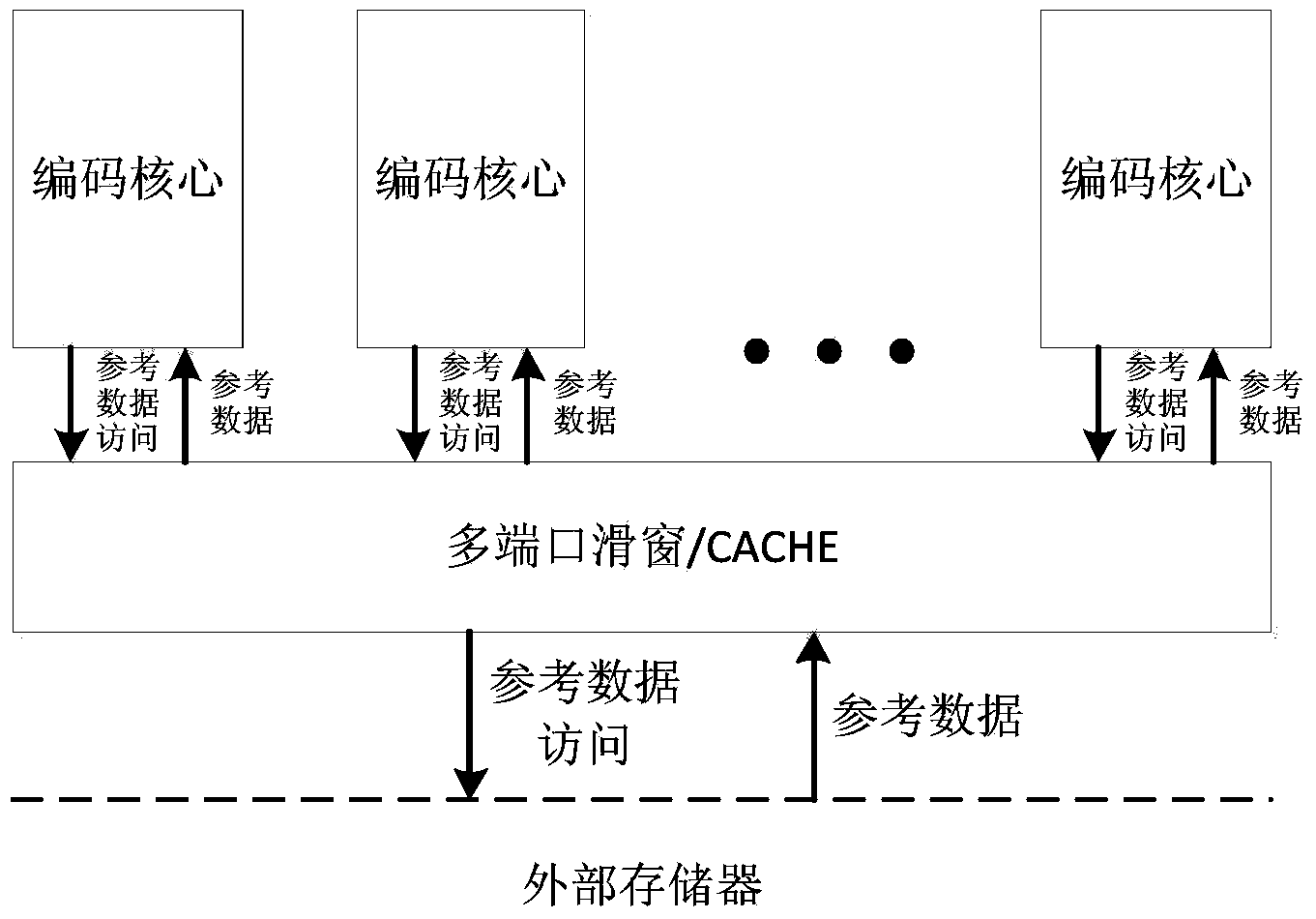

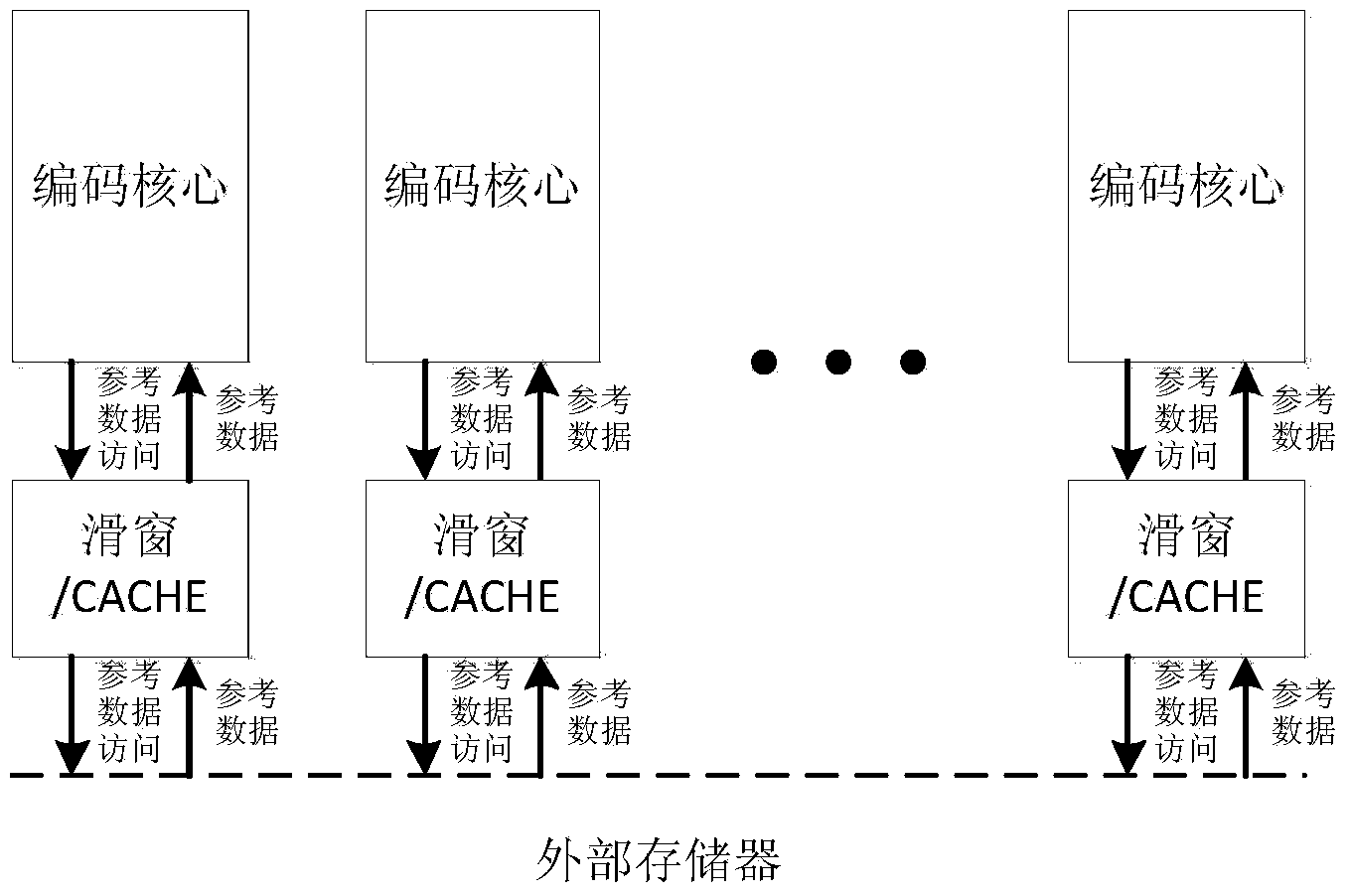

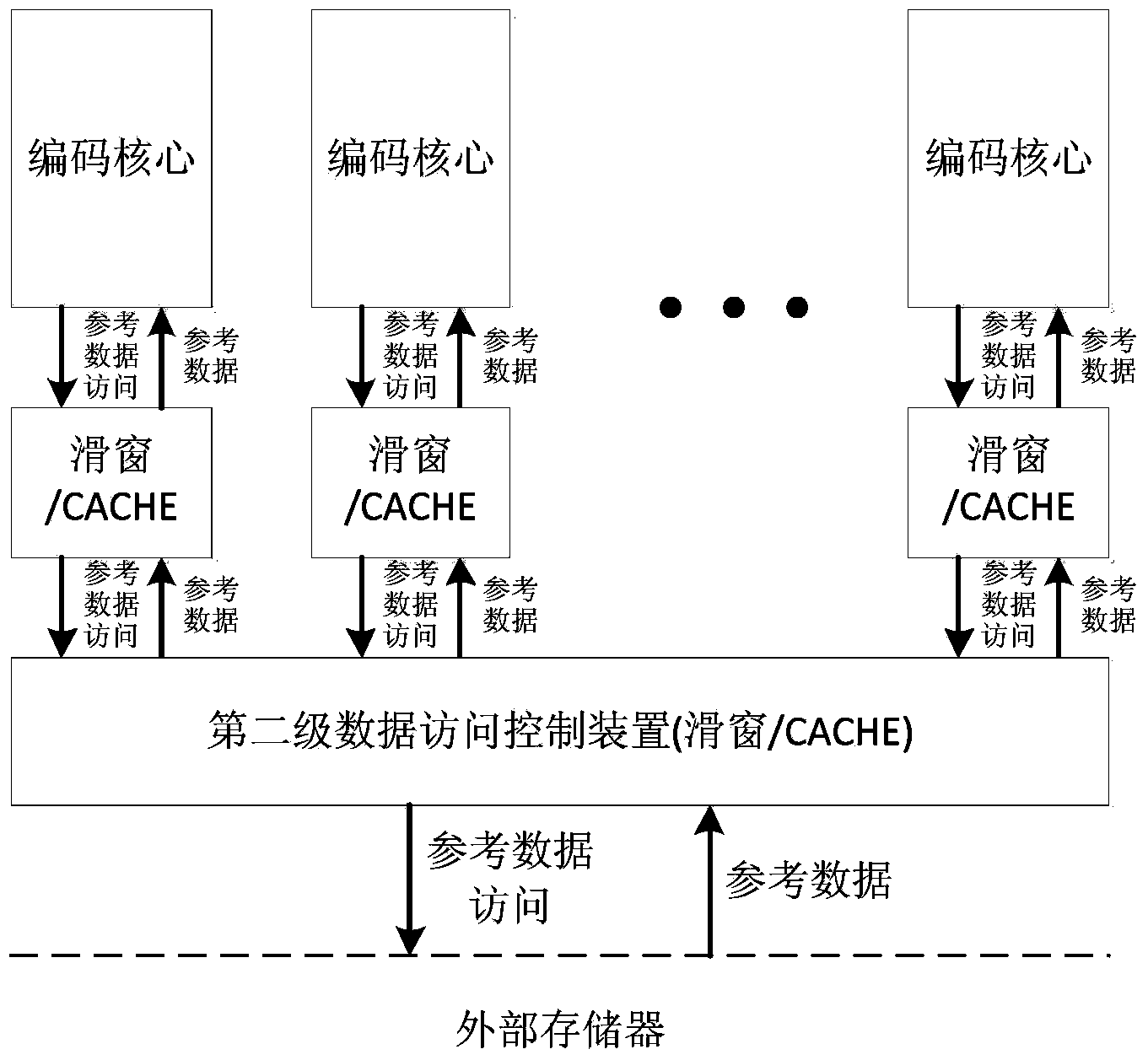

[0038] see first Figure 4 As shown, the present invention is composed of multiple first-level cache units 101 (L1cache) exclusive to each encoding core 100, a second-level cache unit 102 (L2cache) shared by all encoding cores, and a bus 103 connecting the two.

[0039] The L1 cache unit 101 is a first multi-port 2D cache. The L1 cache unit 101 is connected to the L2 cache unit 102 through the bus 103 , and is connected to the motion estimation module of the corresponding encoding core 100 . It directly provides reference data for the motion estimation module of the encoding core 100, and requests reference data from the outside in the form of caching two-dimensional image blocks with a specified buffer block size. Therefore, the L1 cache unit 101 converts the access to the reference data from inside the encoder into an external ima...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com