Method for performing on-demand play quantity prediction and memory scheduling on programs

A technology for storing scheduling and on-demand volume, applied in the field of communication, can solve the problems of low accuracy and low efficiency of prediction of popular programs, and achieve the effect of improving the accuracy and the efficiency of prediction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0024] The neural network is also called the error backpropagation neural network. It not only has input nodes and output nodes, but also has more than one layer of hidden nodes. In theory, a three-layer BP with only one hidden layer can approximate any Boolean function. Because the neural network has the advantages of good nonlinear function approximation ability and fast training speed, it can achieve good results by using the neural network to predict the amount of on-demand programs.

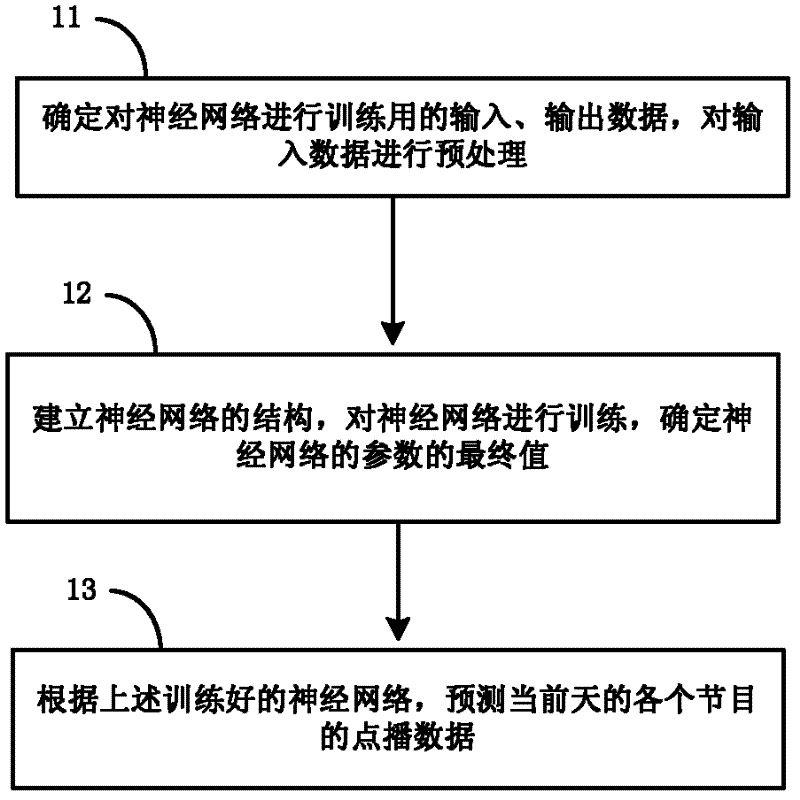

[0025] The processing flow of the method for predicting the amount of on-demand programs based on the neural network provided by this embodiment is as follows: figure 1 As shown, the following processing steps are included:

[0026] Step 11. Determine input and output data for training the neural network, and preprocess the input data.

[0027] For hot program prediction, since there are only two types of predicted programs, hot or not, the output data of the neural network in the embodimen...

Embodiment 2

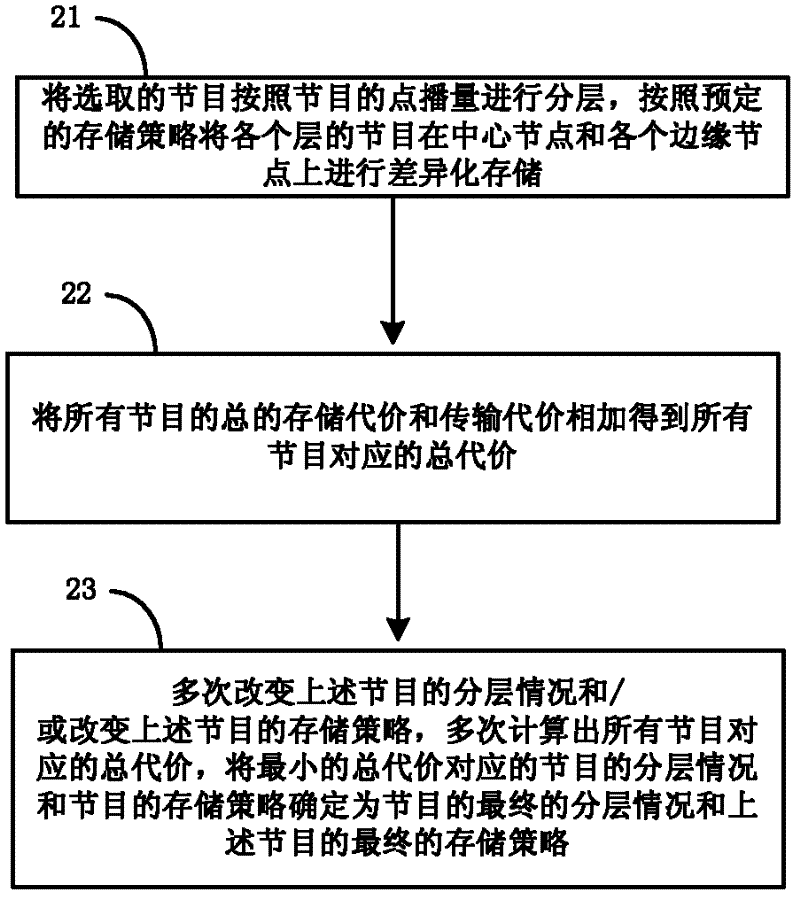

[0071] Based on the above-mentioned predicted on-demand volume of each program in the current day, the processing flow of a method for storing and scheduling programs among multiple nodes provided by this embodiment is as follows: figure 2 shown, including the following steps:

[0072] Step 21: Layer the selected programs according to the on-demand volume of the programs, and store the programs of each layer in a differentiated manner on the central node and each edge node according to a predetermined storage strategy.

[0073] Sort all the programs from high to low according to the amount of demand, select the top N programs according to the demand, and divide the N programs into L layers according to the amount of demand, and the threshold of each layer is: T 1 >T 2 >T 3 >...>T L , the number of programs stored in each layer corresponds to: H 1 , H 2 , H 3 ,...H L . When the above threshold T 1 ,T 2 ,T 3 ,T L When choosing different values, H 1 , H 2 , H 3 ,....

Embodiment 3

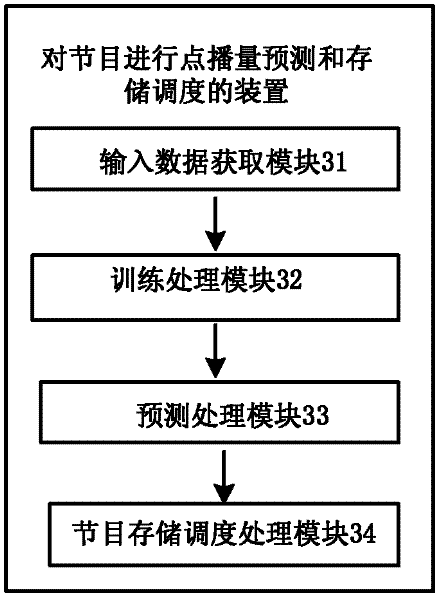

[0085] This embodiment provides a device for forecasting the amount of on-demand and storing and scheduling programs, and its specific structure is as follows: image 3 shown, including:

[0086] The input data acquisition module 31 is used to obtain the input data of the neural network according to the on-demand volume of each program in the previous setting number days of the designated day;

[0087] The training processing module 32 is used to train the pre-established neural network according to the input data acquired by the input data acquisition module and the on-demand volume of each program in the specified day;

[0088] The prediction processing module 33 is used to input the on-demand volume of each program in the previous set number of days of the current day into the neural network after training as input data, and use the output data obtained by calculating the neural network after the training as prediction The on-demand volume of each program in the current da...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com