High speed cache data pre-fetching method based on increment type closed sequence dredging

A high-speed cache and data prefetching technology, which is applied in the direction of electrical digital data processing, special data processing applications, memory systems, etc., can solve problems such as inability to mine, small change range, high frequency, etc., to achieve improved hit rate, small change range, The effect of high frequency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

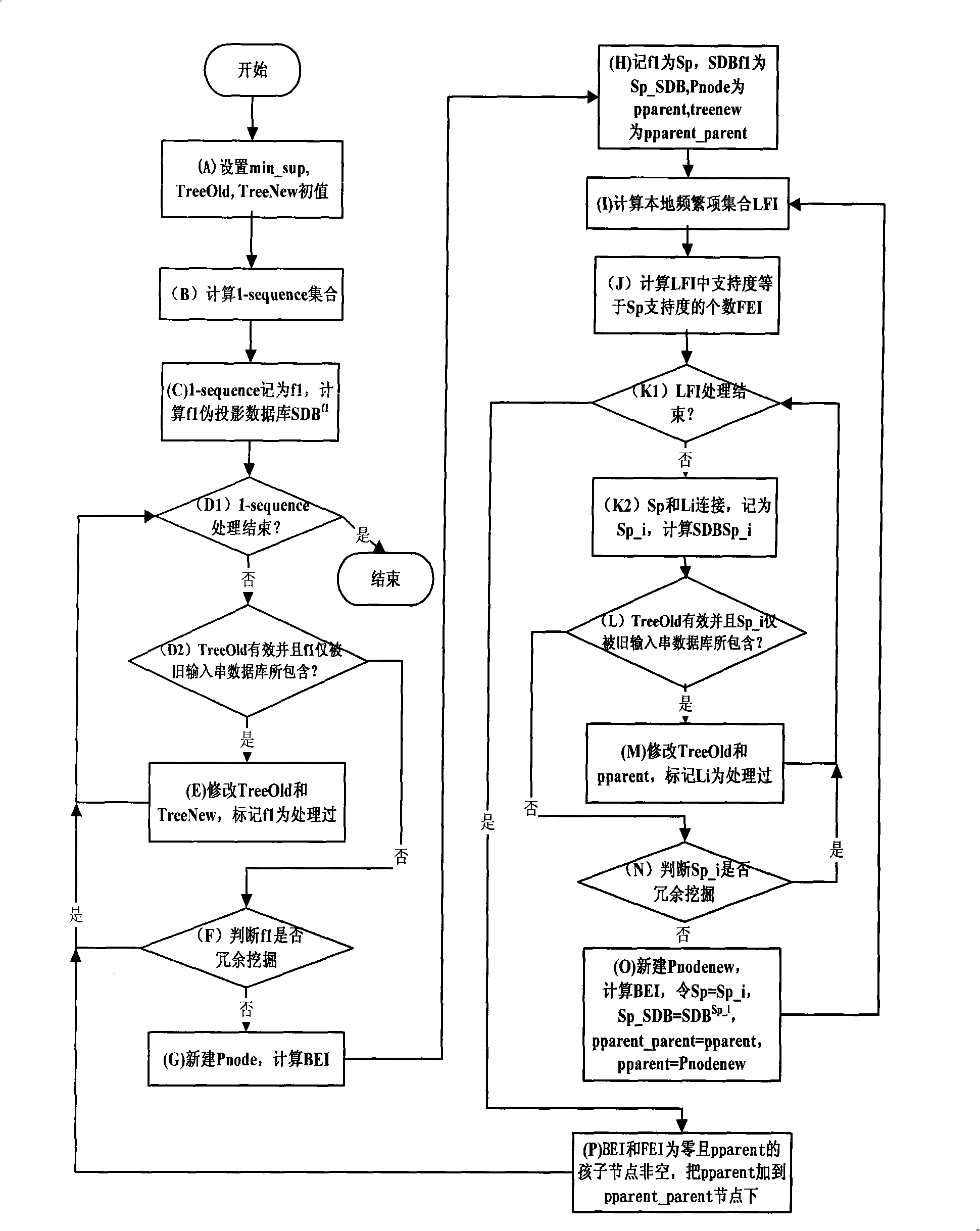

[0039] The processing flow of the present invention can be found in figure 2 shown, is applied to figure 1 In the data prefetch step in the cache data prefetch module. First, by collecting the data access sequence requested by the CPU to the memory, convert it into a sequence and input it into the database, then use the incremental closed sequence mining algorithm to mine frequent closed sequences, extract the cache data prefetch rules, and finally use it to guide the cache The data prefetching improves the cache hit ratio. Combine below figure 2 Introduce the processing flow of the present invention:

[0040] 1. Acquisition: Real-time record the sequence of logical block numbers in the file system requested by the CPU (for example, FAT32 is a cluster composed of sectors), and the logical block number of each file system is an item in the sequence; for example, real-time acquisition The CPU access memory access sequence to be {CAABCABCBDCABCABCEABBCA}.

[0041] 2. Prepr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com