Target edge extraction method, image segmentation method and system

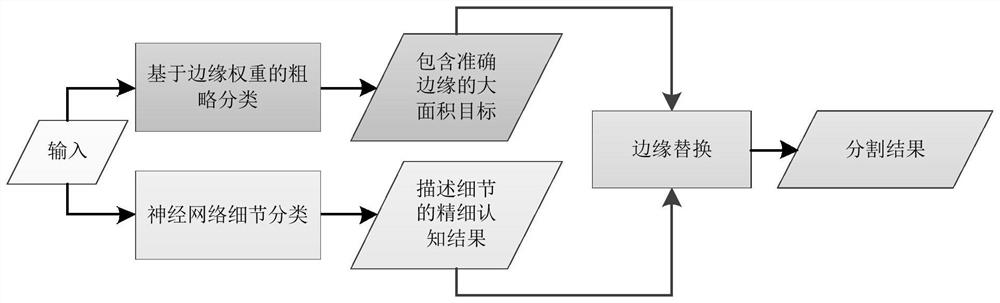

An edge extraction and image segmentation technology, applied in the field of image processing, can solve problems such as difficult to recognize granularity, unable to protect accurate edges of large-area targets, and easy to lose small-area details.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

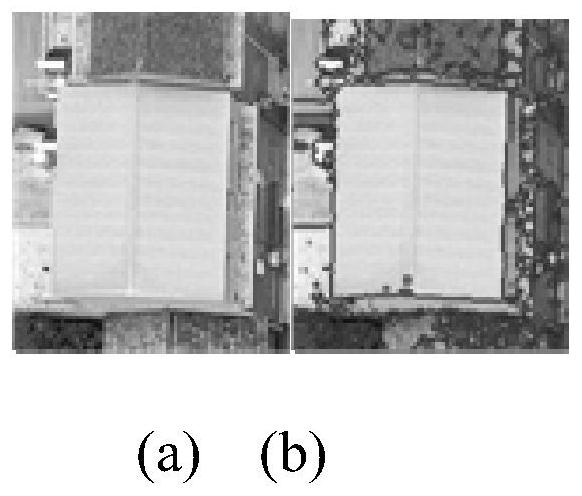

[0042] The example of this embodiment adopts the edge extraction method of the present invention image 3 The original image image (a) is processed to obtain the results shown in the graph (b). In the target edge extraction method of this embodiment, n = 7, and the dual -linear interpolation method in Step 1.1 uses the two linear interpolation. In Step1.2, the Gaussian filter uses the edge extraction of the image pyramid. In Step1.3, the most adjacent interpolation re -sampling method is used to adjust each layer in the edge pyramid.

Embodiment 2

[0044] The embodiment of the images of the invention is paired by the embodiment of this embodiment. image 3 and 4 The original image dataset of the example is divided;

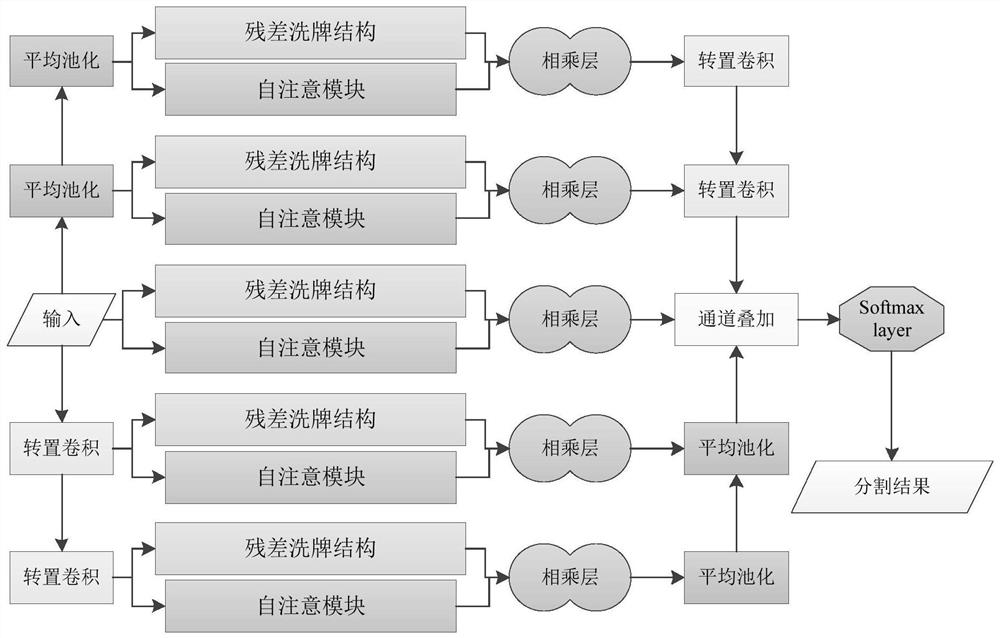

[0045] In the target edge extraction method of this embodiment, n = 7, STEP1.1 uses a dual -linear interpolation heavy sampling method to establish an image pyramid to the original image, and the Gaussra Peslas filter is used in Step1.2 In Step1.3, each layer of the edge pyramid is adjusted to adjust each layer in the edge pyramid;

[0046] The initial network of the image segmentation neural network of this embodiment is an improved reshuffle neural network. The training data set used in the process is that it is collected by itself. Figure 4 (A) and 5 (a) data sets shown in the original picture, Figure 4 (b) and and Figure 5 (B) The ground value of the corresponding original image obtained by manual labeling.

Embodiment 3

[0048] This embodiment is different from the embodiment 1 that the initial network of the image segmentation network is MASK-RCNN, and the network is trained with exactly the same samples as the network 1 network.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com