Underwater vision SLAM (Simultaneous Localization and Mapping) method based on camera motion constraint and distortion correction

A camera motion and distortion correction technology, applied in computer parts, image analysis, image enhancement, etc., can solve problems such as feature point processing, feature point mismatch, feature matching error, etc., to improve quality and prevent feature point mismatch. , Eliminate the effect of underwater cumulative error

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

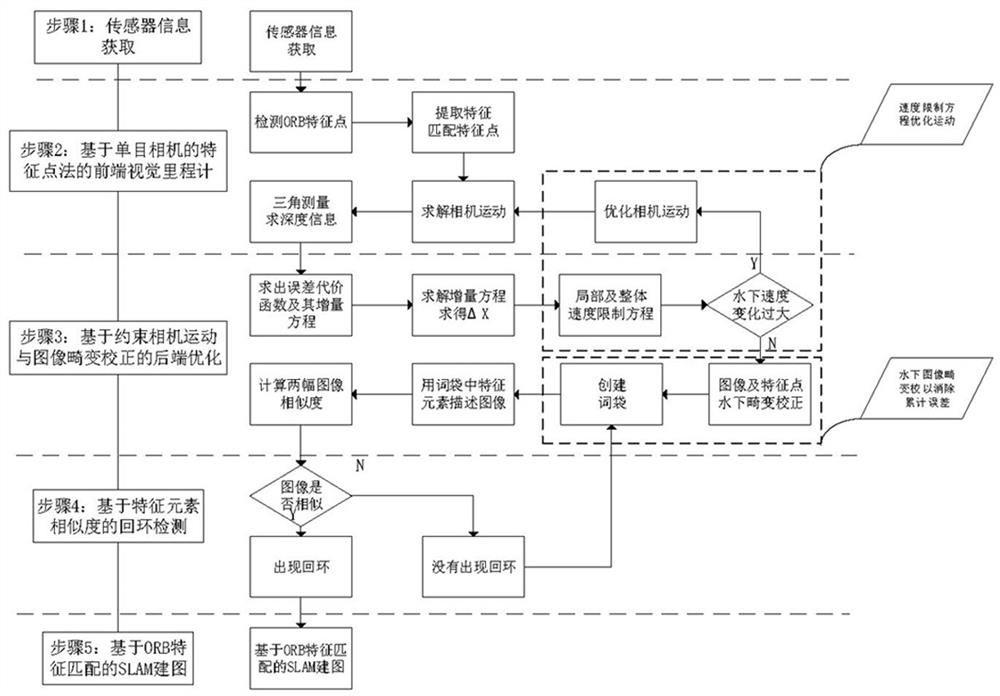

[0039] like figure 1 An underwater vision SLAM method based on constrained camera motion and distortion correction is shown, including the following steps:

[0040] Step 1) sensor information acquisition;

[0041] A camera captures the visible light or electromagnetic spectrum as a picture and represents it as a digital image, that is, represented by a two-dimensional matrix with finite numerical values, called pixels. Sensor information reading in SLAM is mainly the reading and preprocessing of camera image information.

[0042] Step 2) Front-end visual odometry based on the feature point method of the monocular camera;

[0043] Front-end visual odometry based on feature points is the mainstream method of visual odometry. Its motion is stable, and it is not sensitive to illumination and dynamic objects. It is a relatively mature solution at present. Its work is mainly divided into two steps:

[0044] First, the feature points are extracted from the image, and the feature ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com