Hardware accelerator of convolutional neural network based on parallel multiplexing and parallel multiplexing method

A technology of convolutional neural network and hardware accelerator, which is applied in the field of artificial intelligence hardware design, can solve problems such as high computing parallelism, low hardware complexity, and large waste of resources, so as to improve network multiplexing, improve flexibility, shorten the effect of time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

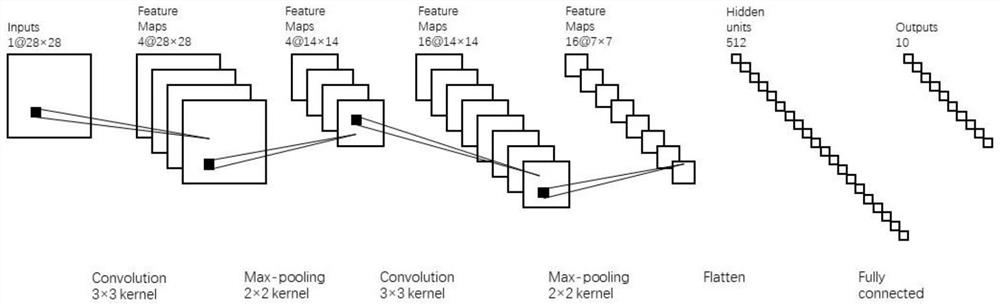

[0091] In this embodiment, the convolutional neural network includes K layers of convolution layers, K+1 layers of activation layers, K layers of pooling layers, and 2 layers of fully connected layers; and the convolutional neural network is trained based on the MNIST handwritten digit set, get the weight parameter;

[0092] The convolutional neural network used in this embodiment is a handwritten digit recognition network, such as figure 1 As shown, its structure includes: 2 layers of convolution layers, 3 layers of activation layers, 2 layers of pooling layers, 2 layers of fully connected layers, using a 3 × 3 convolution kernel; except for the activation layer immediately after the convolution layer It has no effect on the feature map size. The other six-layer networks are arranged as follows: the first layer is a convolutional layer, the input feature size is 28×28×1, the weight matrix size is 3×3×1×4, and the length of the bias vector is is 4, the output feature size is ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com