Multimodal medical image fusion method based on global information fusion

A medical image and global information technology, applied in the field of medical image fusion based on deep learning, can solve the problems of limited fusion performance, time-consuming dictionary learning, insufficient multi-modal image information, etc., to enhance the fusion effect and improve the mosaic phenomenon Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

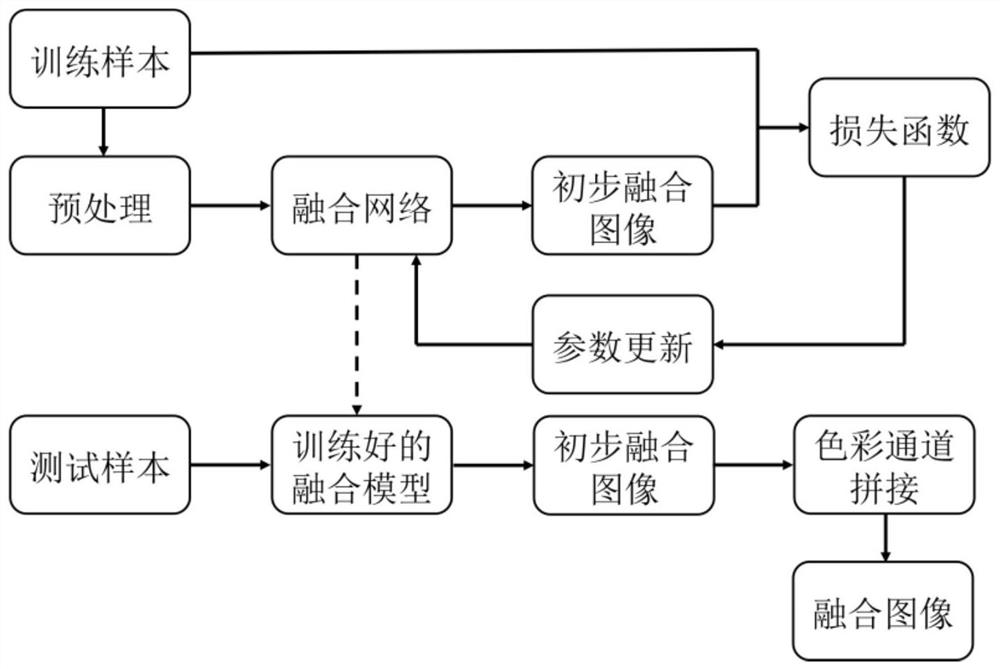

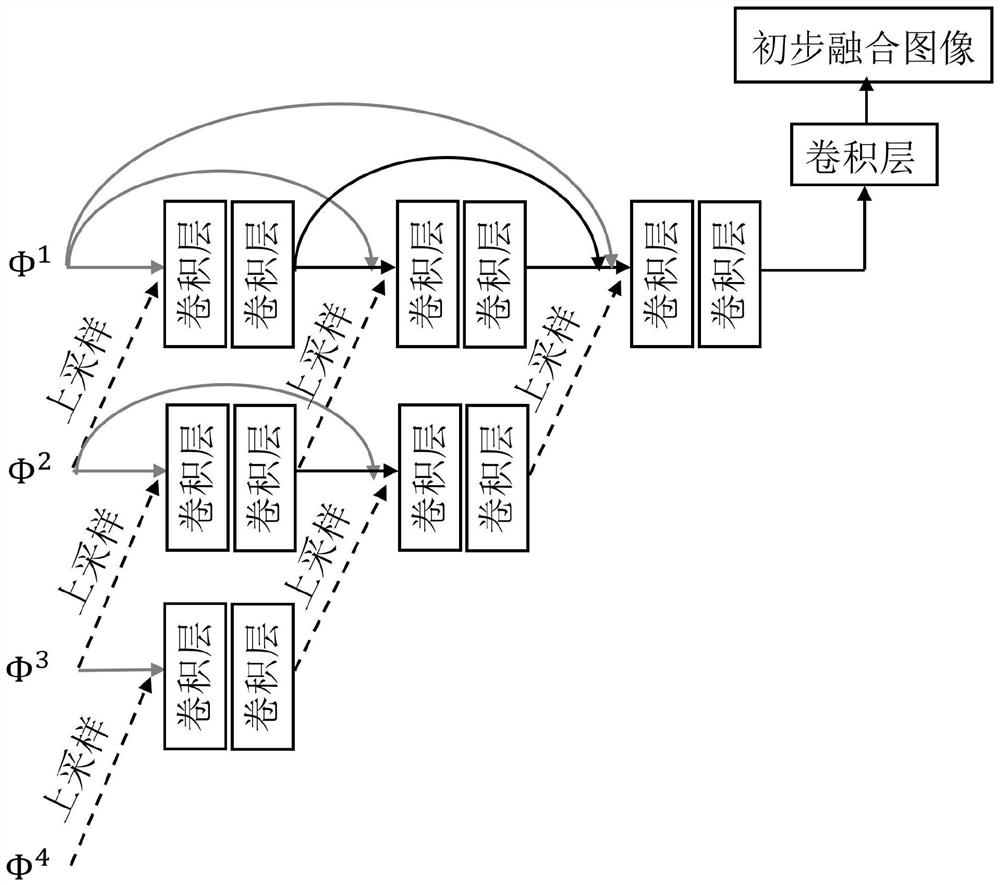

[0054] In this embodiment, a multimodal image fusion method based on global information fusion, such as figure 1 shown, including the following steps:

[0055] Step 1. Obtain M original medical images of different modalities and perform preprocessing of color space conversion and image clipping to obtain the preprocessed image block set of all modalities {S 1 , S 2 ,...,S M}, where S mDenote the set of image patches for the mth modality, m ∈ {1, 2, ..., M}:

[0056] Step 1.1, obtain the original medical images of multiple modalities required for the experiment from the Harvard Medical Image Dataset website (http: / / www.med.harvard.edu / AANLIB / home.html); this embodiment uses the public data The set collects medical images of M=2 modalities, including 279 pairs of MR-T1 and PET images and 318 pairs of MR-T2 and SPECT images, where MR-T1 and MR-T2 are grayscale anatomical images, and the number of channels is 1, PET and SPECT are functional images in RGB color space, and the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com