Multi-modal sentiment classification method based on attention guidance two-way capsule network

A sentiment classification, multimodal technology, applied in other database clustering/classification, character and pattern recognition, instruments, etc., to promote and enhance bimodal homogeneity and improve learning efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0060] The method of the present invention will be described in detail below in conjunction with the accompanying drawings.

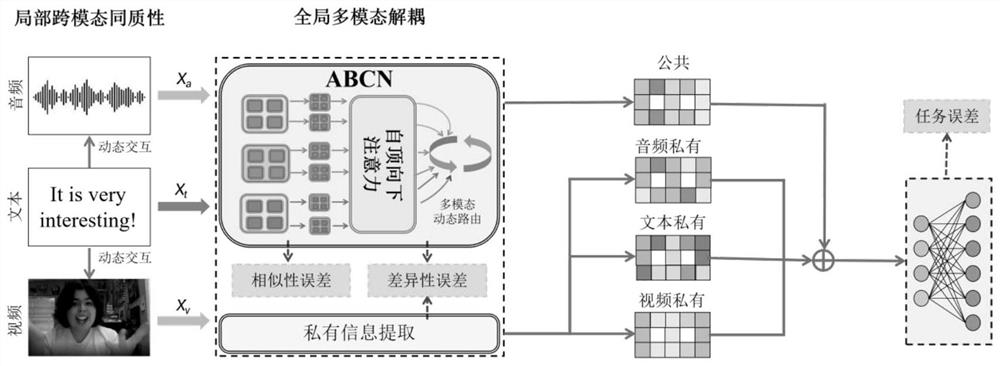

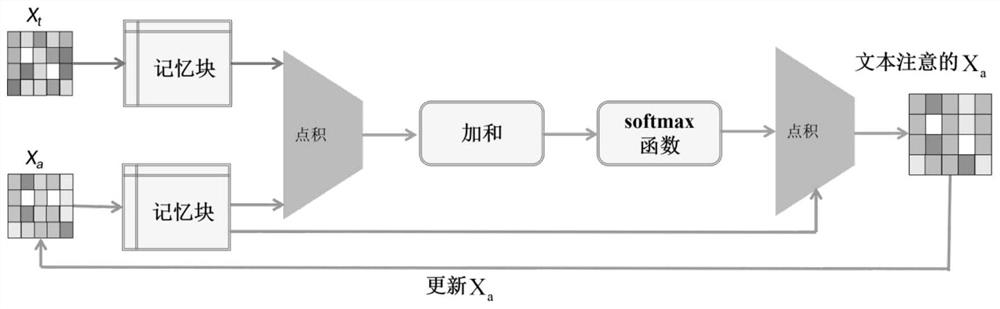

[0061] Such as figure 1 and 2 As shown, a multi-modal emotion classification method based on attention-guided bidirectional capsule network, the specific steps are as follows:

[0062] Such as figure 1 As shown, the attention-guided bidirectional capsule network adopted by this method consists of two important components: 1) a multimodal dynamic interaction enhancement module, which is used to enhance cross-modal homogeneity at the feature level; 2) ABCN, which uses for exploring global multimodal public cues. Include the following steps:

[0063] Step 1. Acquire multimodal data

[0064] Multimodal data represents multiple types of modal data: such as audio modality, video modality, and text modality; the purpose of multimodal fusion is to obtain complementarity and consistency between multiple modality data under the same task The two public sent...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com