Gait Control Method of Humanoid Robot Based on Model-Dependent Reinforcement Learning

A humanoid robot and reinforcement learning technology, applied in the field of humanoid robot gait control based on model-related reinforcement learning, can solve the problem that reinforcement learning is inconvenient to apply, the humanoid robot system is complex, and the PID controller cannot perfectly meet the system control. needs, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] The present invention will be further described below in conjunction with specific embodiments.

[0050] The gait control method of a humanoid robot based on model-related reinforcement learning in this embodiment includes the following steps:

[0051] 1) Defining a reinforcement learning framework for the stability control tasks of the humanoid robot before and after walking;

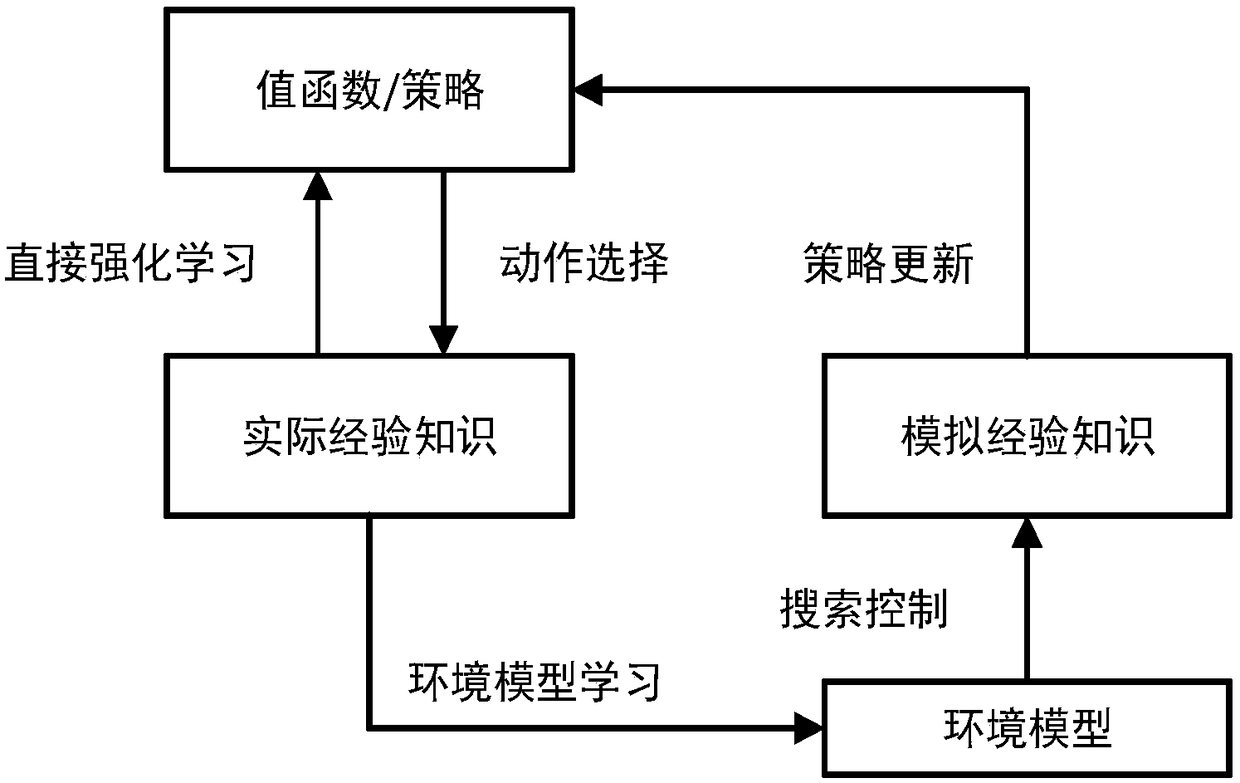

[0052] 2) Use model-related reinforcement learning methods based on sparse online Gaussian processes to control the gait of humanoid robots;

[0053] 3) The PID controller is used to improve the action selection method of the reinforcement learning humanoid robot controller. The improved operation is to use the PID controller to obtain the optimal initial point of the action selection operation of the reinforcement learning controller.

[0054] The present invention uses reinforcement learning to control the stability of the humanoid robot before and after walking. First, a framework for reinforcement lea...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com