Small sample image classification method based on memory mechanism and graph neural network

A neural network and classification method technology, applied in the field of small sample image classification, can solve the problems that the learned concepts cannot be predicted and ignored by model reasoning, and achieve the effect of strong practicability and simple method.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

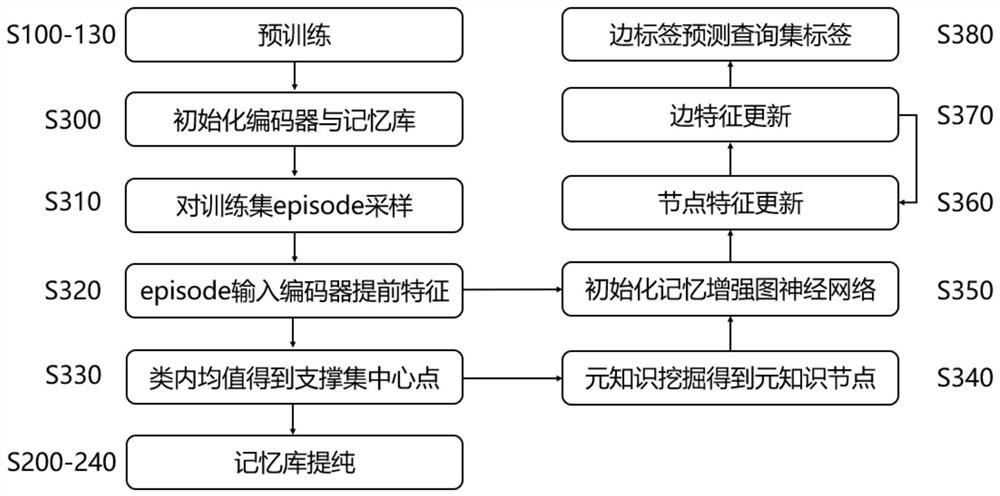

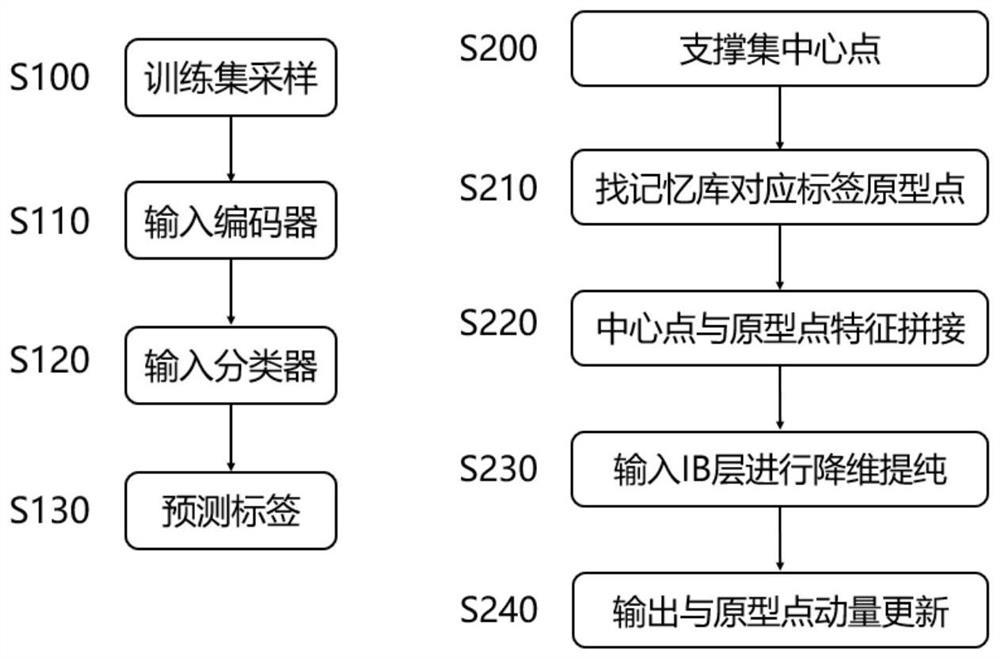

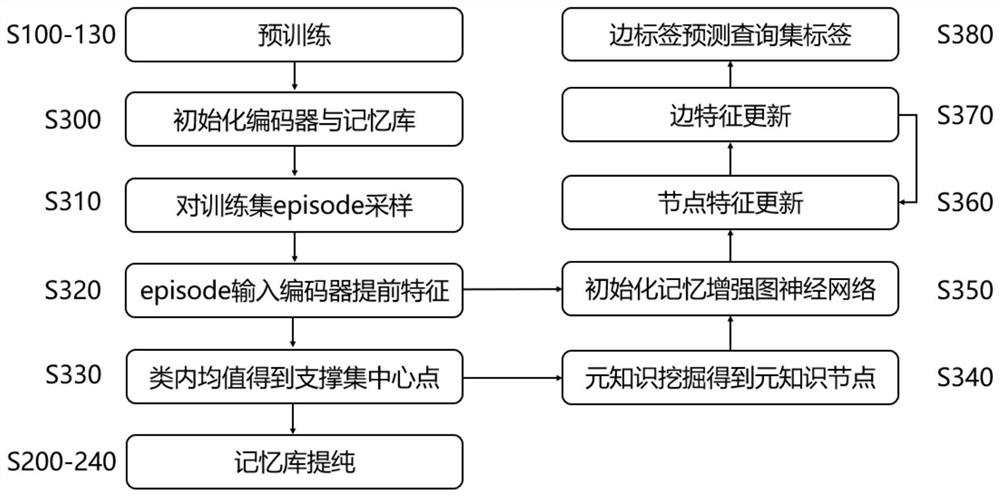

[0062] refer to figure 1 , the present invention utilizes graph neural network and memory mechanism to help small-sample models perform reasoning and prediction with the help of learned conceptual knowledge. The specific operation steps are as follows:

[0063] S300: Obtain the trained encoder and classifier from the pre-training stage, and use the encoder and classifier as the initialization of the feature extractor and memory bank in the meta-training stage, respectively.

[0064] S310: Using the episode sampling strategy, sample N classes from the training set, and each class has K samples as a support set, and then from the same N classes, each class samples 1 sample as a query set.

[0065] S320: Input the support set and query set sampled in step S310 into the feature extractor to obtain the feature representation of each sample.

[0066] S330: Make the intra-class mean value of the sample features of the support set to obtain the class center points of the N classes, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com