Intelligent compression storage method and system for neural network check point data

A neural network and intelligent compression technology, applied in neural learning methods, biological neural network models, electrical components, etc., can solve problems such as insufficient equipment life, achieve the goals of improving storage capacity and life, efficient compression, and reducing data writing Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

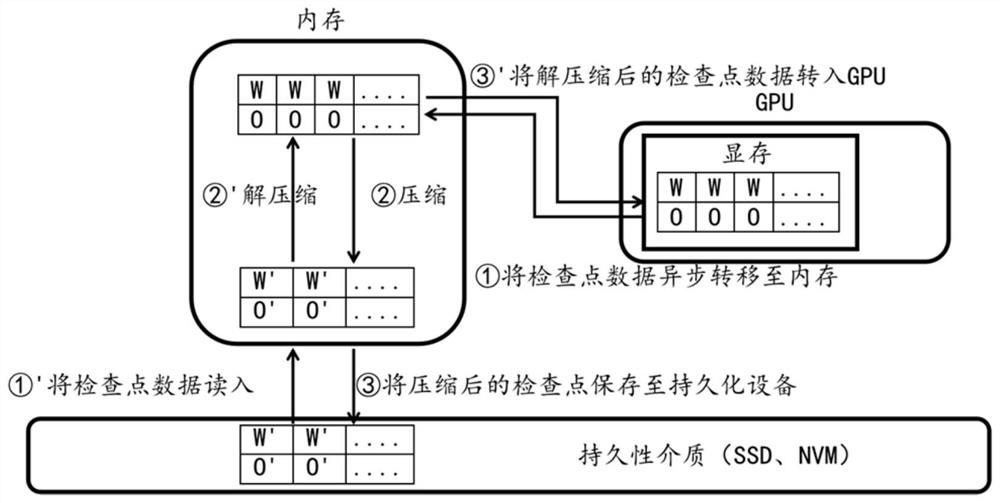

[0037] The present invention proposes an intelligent compression storage method for neural network checkpoint data, specifically:

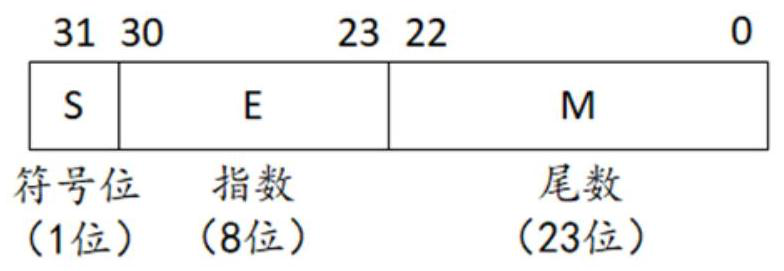

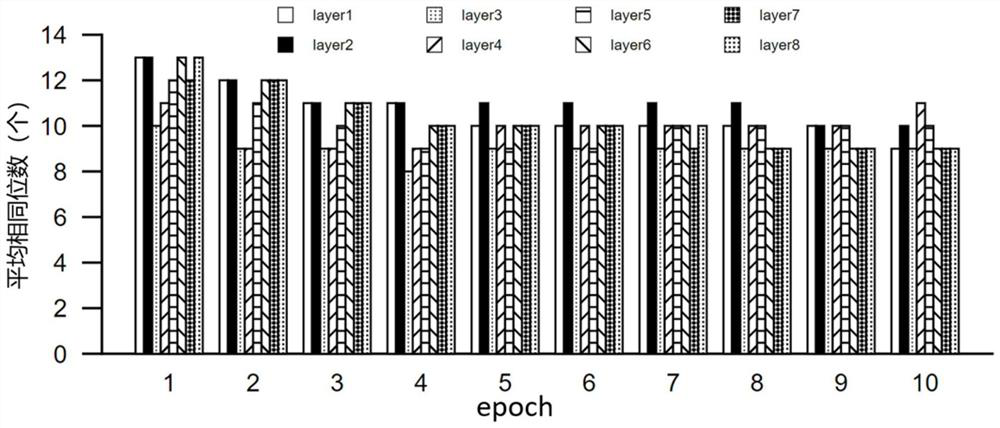

[0038] Use the incremental compression method to compress and store the weight floating-point number data after each round of neural network training; and / or use index value mapping to replace part or all of the first n bits of optimizer floating-point number data after each round of neural network training to store. Wherein, the number of digits of the index value is less than n.

[0039] The present invention will be further described below in conjunction with specific embodiment according to accompanying drawing:

[0040] Intelligent compression method of the present invention specifically comprises the following steps:

[0041] Step 1: After a round of deep learning training is over, the system will open up a new space in the GPU memory, and copy the weight floating-point data and optimizer floating-point data to this area.

[0042] Step 2:...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com