RGB-D saliency detection method based on dynamic filtering decoupling convolutional network

A RGB-D, dynamic filtering technology, applied in the field of computer vision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

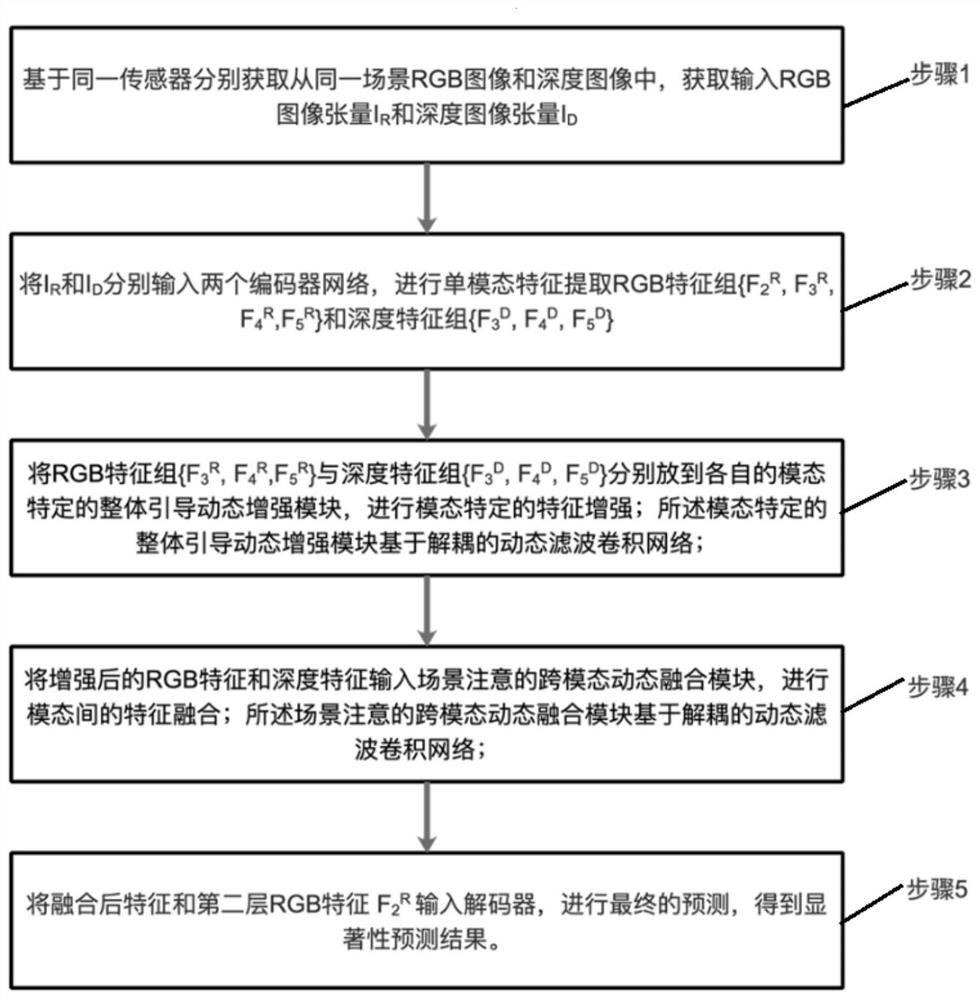

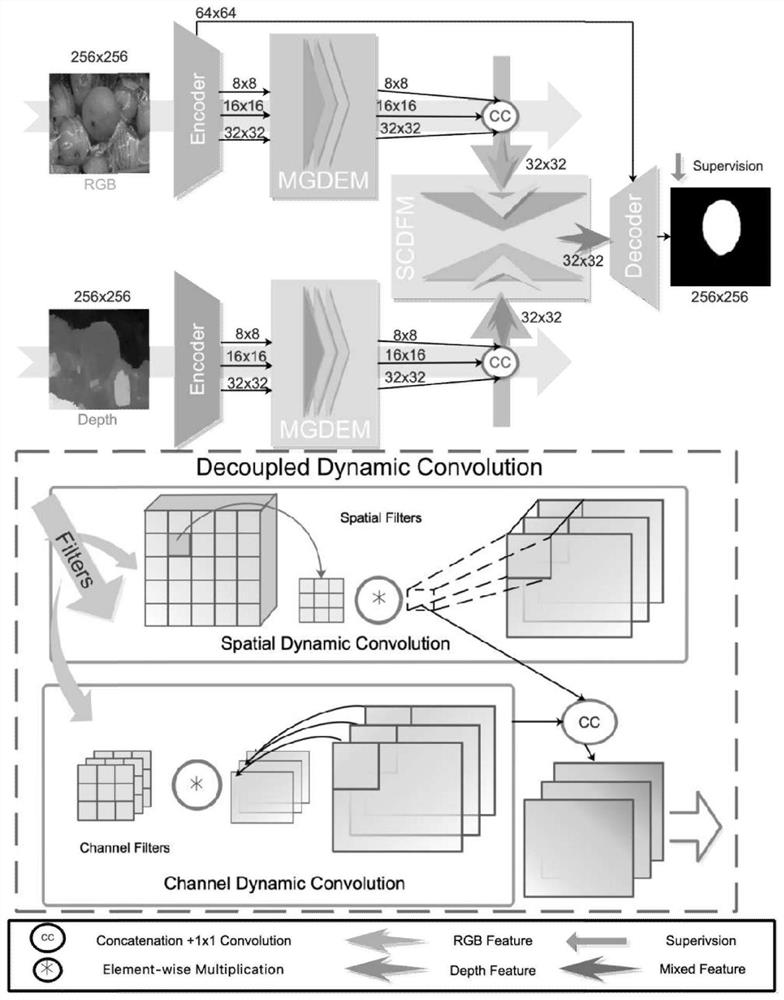

[0055] The object of the present invention is to provide a RGB-D saliency detection method based on a dynamic filter decoupling convolutional network to effectively fuse RGB and depth two modal information to achieve high-quality saliency detection results in complex scenes . The first challenge faced by the object of the present invention is to design a module that can dynamically adapt to the specific presence in each modality, and the second challenge is to dynamically build complementary interactions for inter-modal fusion.

[0056] The core idea of the present invention is to design a decoupled dynamic filtering saliency detection network to dynamically promote feature interaction by decoupling the dynamic convolution to the spatial dimension and the channel dimension to deal with both intra-modal and inter-modal issues .

[0057] In order to enable those skilled in the art to better understand the solutions of the present invention, the following will clearly and comp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com