Text image generation method based on fusion compensation generative adversarial network

A technology for generating images and synthesizing images, which is applied in the generation of 2D images, biological neural network models, and image data processing. It can reduce the space complexity and time complexity, reduce the training difficulty and time, and avoid the expensive calculation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] In order to facilitate those skilled in the art to understand the technical content of the present invention, the content of the present invention will be further explained below in conjunction with the accompanying drawings.

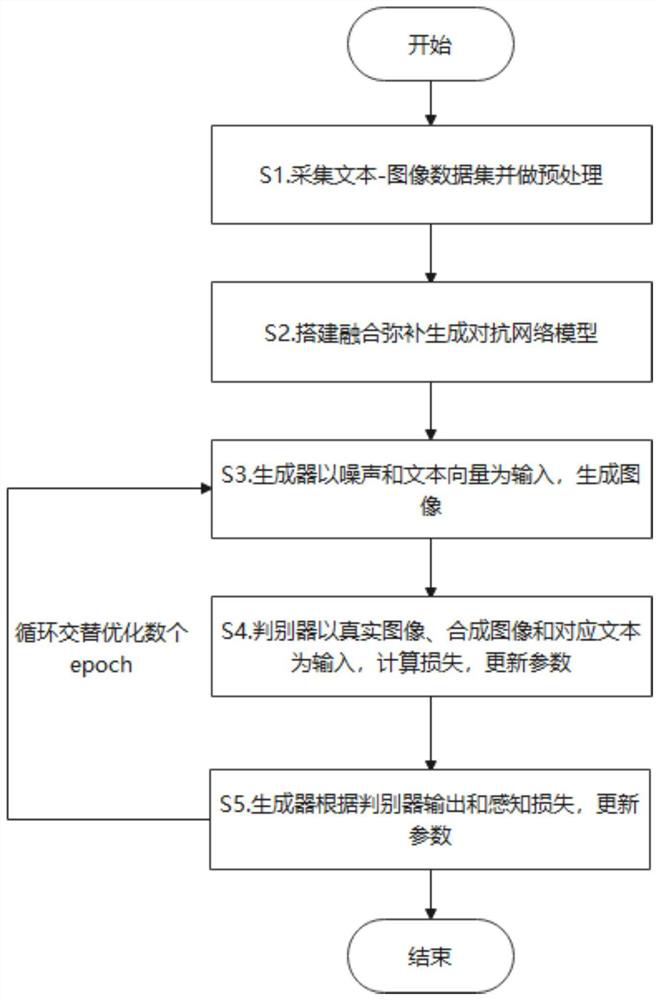

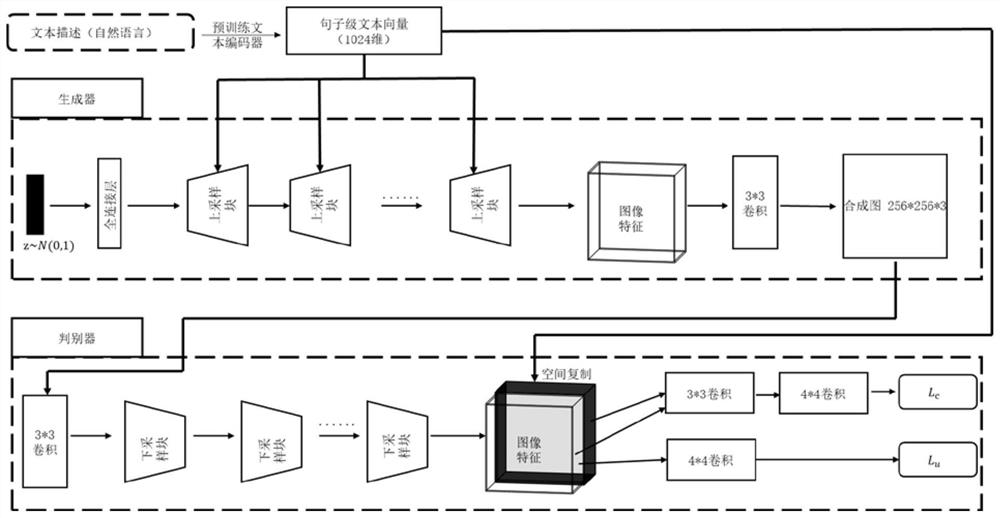

[0029] Such as figure 1 As shown, the text generation image method based on fusion compensation generation confrontation network of the present invention comprises the following steps:

[0030] S1. Establish a data set and perform preprocessing;

[0031] The dataset used by the text-to-image task consists of multiple text-image pairs, where the text is a natural language description of the subject in that image. An image can correspond to more than ten different text descriptions, and each sentence uses different words to describe the image from different angles. Such as figure 2 The images shown correspond to the text descriptions of the following 10 different angles:

[0032] 1. The medium sized bird has a dark gray color, a black downward...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com