Incremental remote sensing image three-dimensional reconstruction method based on space occupancy probability fusion

A space occupation and remote sensing image technology, applied in image analysis, image enhancement, image data processing, etc., can solve problems such as inability to optimize building models and low precision of 3D building structure features, and achieve the effect of saving manpower and material resources

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

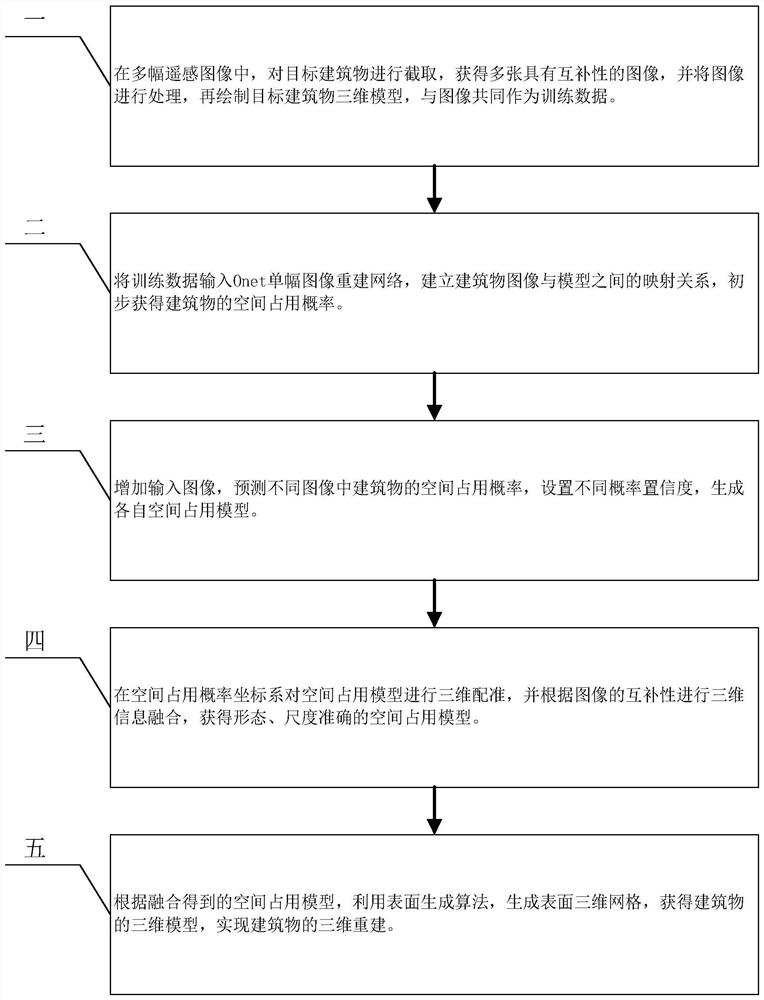

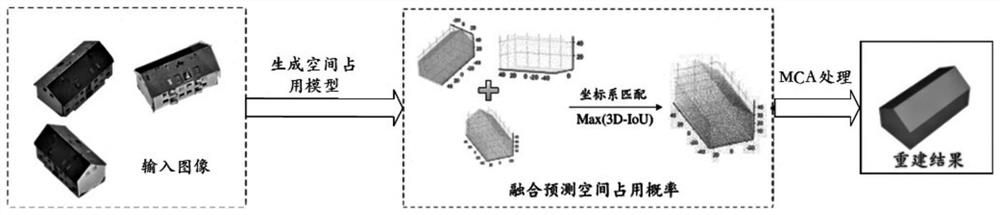

[0017] Specific implementation mode one: refer to figure 1 Specifically explaining this embodiment, the three-dimensional reconstruction method of an incremental oblique remote sensing image based on spatial occupancy probability feature fusion described in this embodiment includes:

[0018] Step 1. From multiple remote sensing images containing multiple buildings, select all building target images to be reconstructed, and clip each target building image as a separate target to obtain a single building under multiple different angles Object remote sensing images, based on the existing model data, use modeling tools to manually model each target building to obtain a building model that corresponds to each building one by one, and combine all building remote sensing images with the corresponding image The building model is used as the training data; the shape center of the building should be in the center of the image and the proportion of the building in the image should be mor...

specific Embodiment approach 2

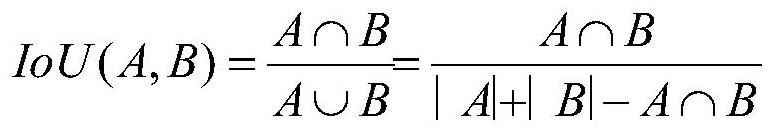

[0023] Embodiment 2: The difference between this embodiment and Embodiment 1 is that the expression of calculating 3D-IoU in the step 4 is as follows:

[0024]

[0025] Among them, A and B are the space occupancy models of the building.

[0026] Other steps and parameters are the same as those in Embodiment 1.

specific Embodiment approach 3

[0027] Embodiment 3: The difference between this embodiment and Embodiment 1 or 2 is that in step 4, all space occupancy models are fused with three-dimensional information. When merging, the fusion weight is optimally set, and the building can be obtained according to each image. The angle information and position information of each sampling point are assigned the corresponding weight μ to the space occupancy probability of each sampling point, and the obtained sampling point space occupancy probability is calculated by the following formula:

[0028] P b =μ 1 P 1 +μ 2 P 2 +…+μ n P n

[0029] Among them, P b Indicates the space occupancy probability after model fusion; μ n Indicates the weight corresponding to the nth model space occupancy probability, μ 1 +μ 2 +,...,μ n =1.

[0030] Other steps and parameters are the same as those in Embodiment 1 or Embodiment 2.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com