Federal learning privacy protection method based on homomorphic encryption and secret sharing

A technology of secret sharing and homomorphic encryption, which is applied in the direction of homomorphic encryption communication, neural learning methods, digital data protection, etc., to achieve the effect of preventing easy recovery

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

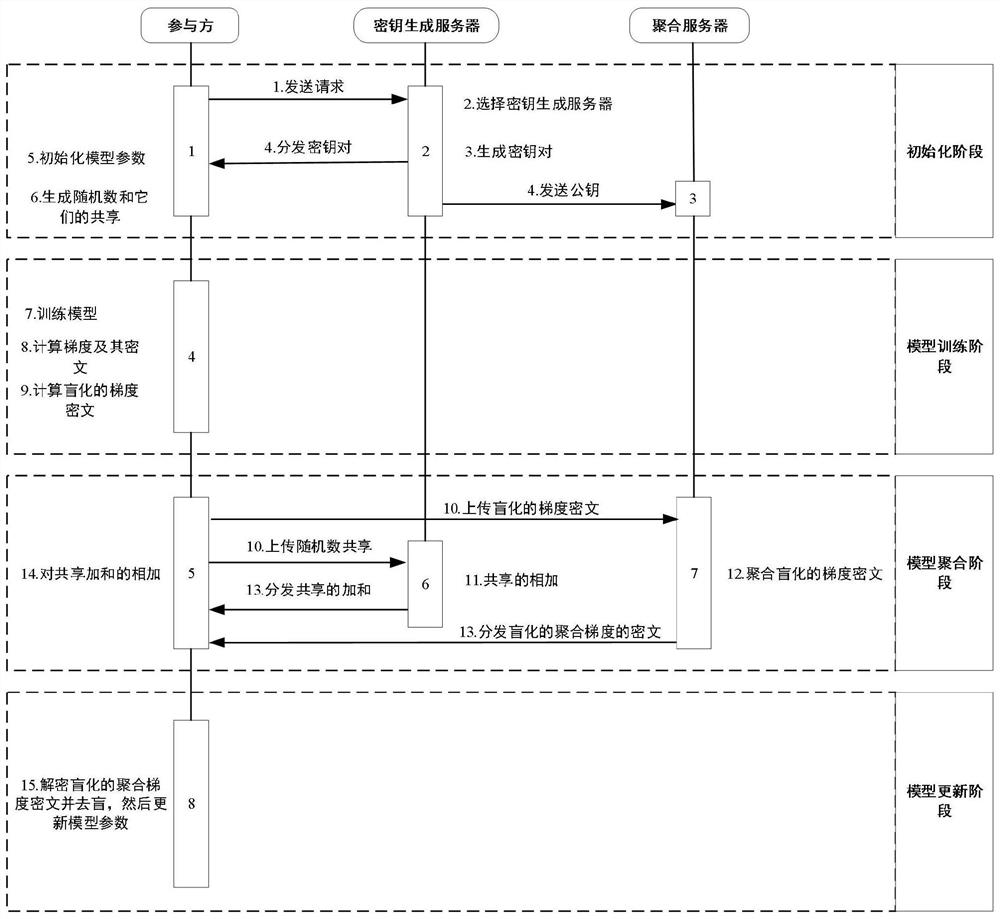

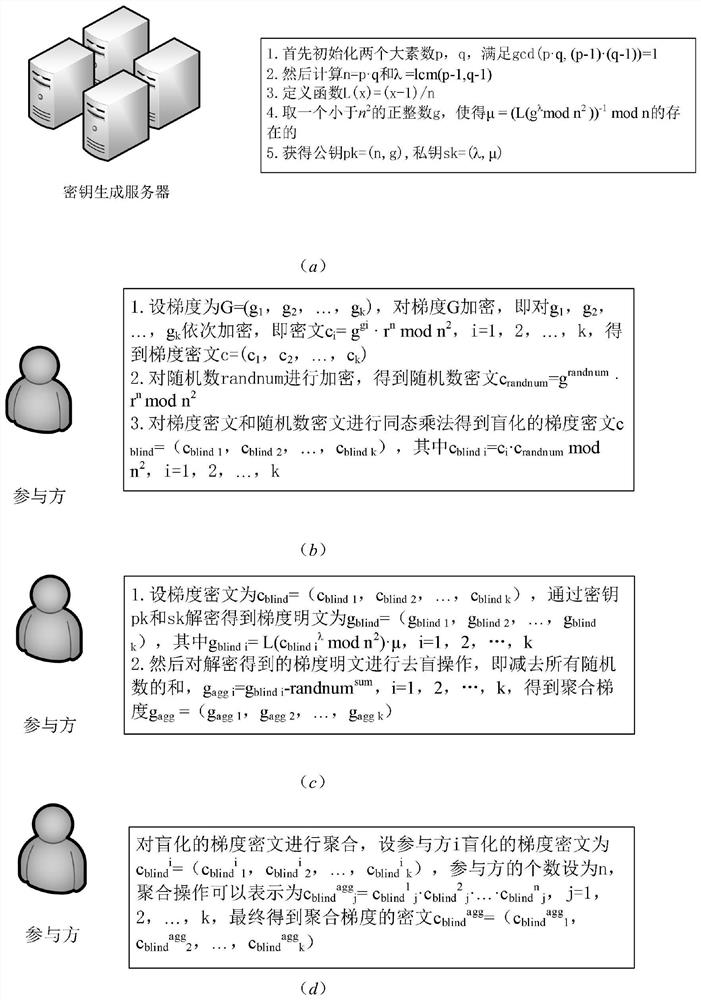

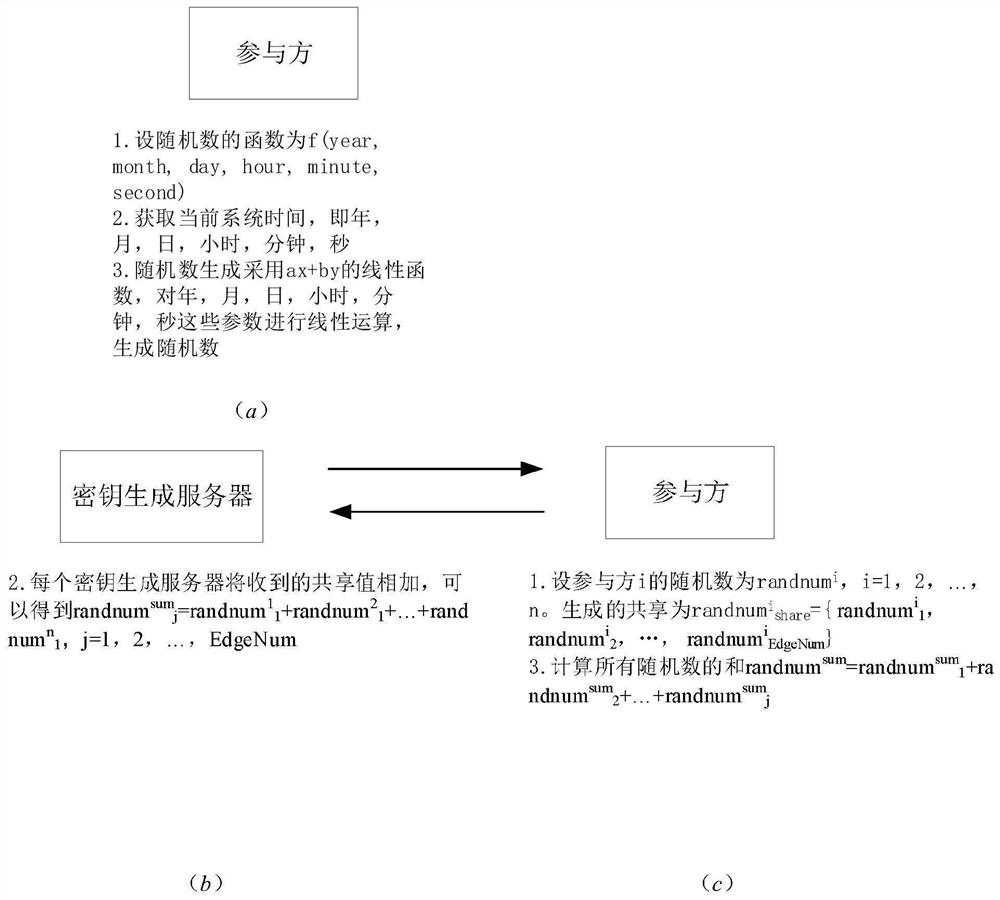

[0025] The specific implementation process of the federated learning privacy protection method based on homomorphic encryption and secret sharing in the present invention is as follows: figure 1 shown, including the following steps:

[0026] Step 1: Initialization phase.

[0027] Participants complete the initialization of various parameters locally, including model parameters, key pairs, random numbers and shares.

[0028] Step 1.1: Initialization of model parameters.

[0029] (1) The participant initializes the neural network model nn locally, the learning rate α and the number of training rounds epoch, and the nn, α and epoch of each participant are the same.

[0030] Step 1.2: Initialization of the key pair.

[0031] (1) The key generation server completes the generation of the public key pk and private key sk, and distributes them to e...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com