Distributed training gradient compression acceleration method based on AllReduce

A distributed, gradient technique applied in the field of deep learning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0018] The principles and features of the present invention are described below in conjunction with the accompanying drawings, and the examples given are only used to explain the present invention, and are not intended to limit the scope of the present invention.

[0019] An AllReduce-based distributed training gradient compression acceleration method provided by an embodiment of the present invention. The following is a detailed explanation.

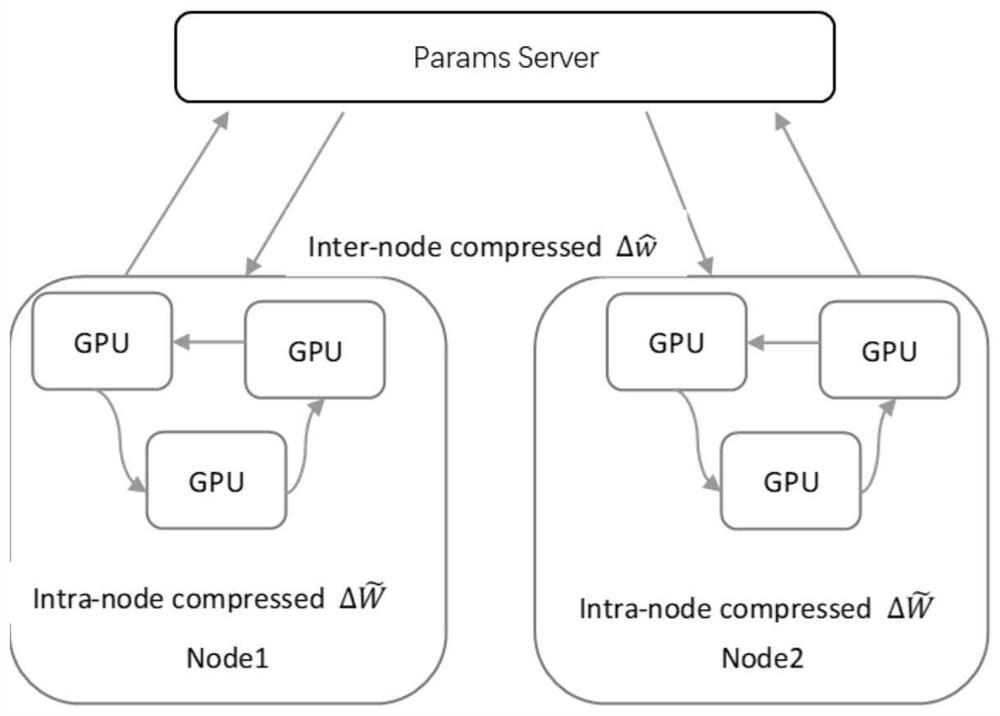

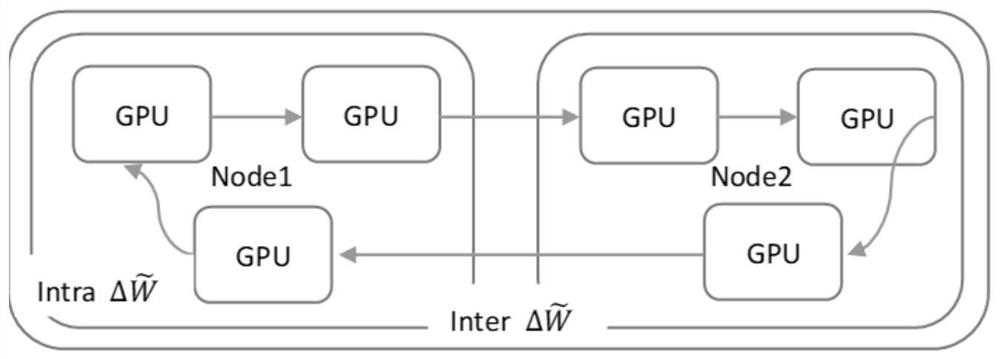

[0020] figure 1 It is a distributed deep gradient compression training architecture with Params Server (PS) structure, figure 2 It is an AllReduce-based distributed deep gradient compression training framework according to the embodiment of the present invention. Among them, the GPUs of each machine in the PS architecture form a closed loop to transmit the gradient after Intra-node compression; there is no communication connection between the working machines, and the gradient after inter-node compression is transmitted between the w...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com