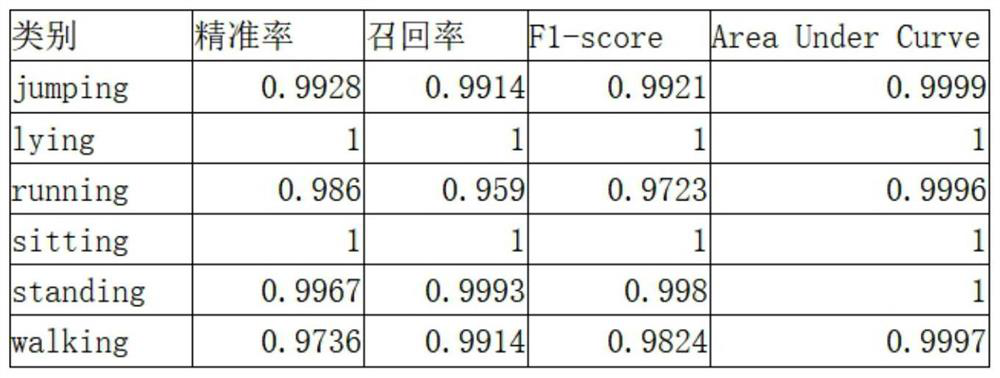

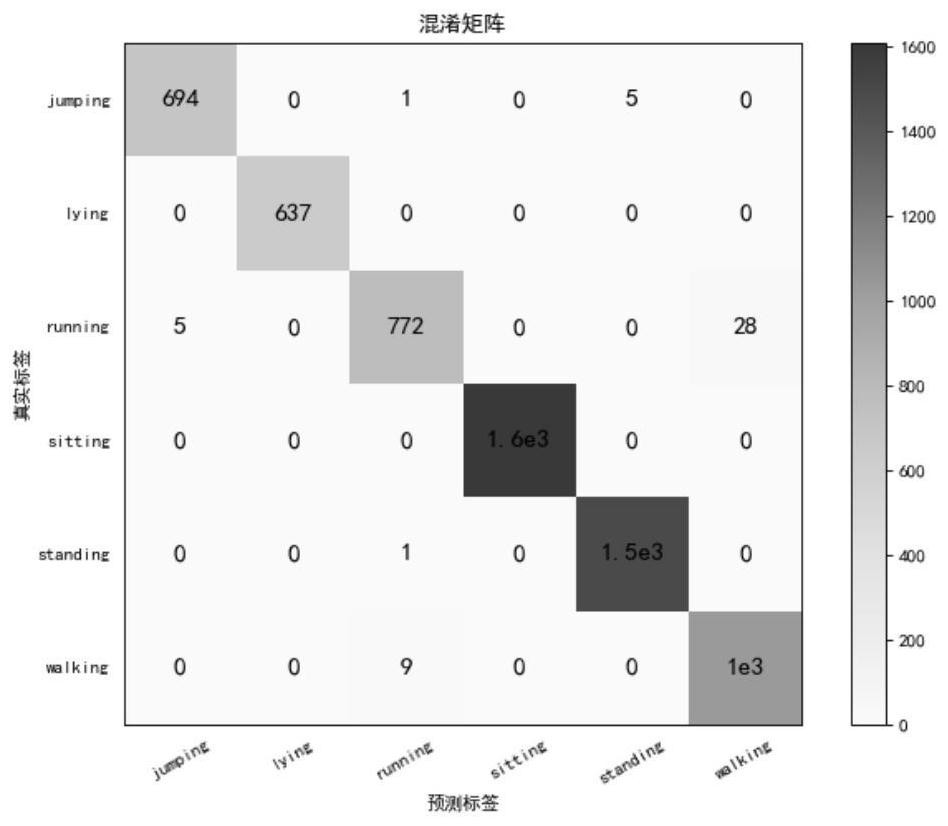

Infrared human body behavior recognition method based on MobileNetV3 network model

A network model and recognition method technology, applied in the field of deep learning, can solve problems such as waste of computing resources, overfitting, insufficient training data, etc., and achieve the effect of improving accuracy, reducing parameters and calculation amount

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] In order to make the purpose, technical solutions and advantages of the embodiments of the present invention clearer, the technical solutions in the embodiments of the present invention will be clearly and completely described below in conjunction with the accompanying drawings in the embodiments of the present invention. Obviously, the described embodiments It is some embodiments of the present invention, but not all of them. Based on the implementation manners in the present invention, all other implementation manners obtained by persons of ordinary skill in the art without creative efforts fall within the protection scope of the present invention. Accordingly, the following detailed description of the embodiments of the invention provided in the accompanying drawings is not intended to limit the scope of the claimed invention, but merely represents selected embodiments of the invention. Based on the implementation manners in the present invention, all other implement...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com