Adaptive network suitable for high-reflection bright spot segmentation in retinal optical coherence tomography image

An optical coherence tomography, adaptive network technology, applied in image analysis, image enhancement, image data processing and other directions, can solve the problems of poor segmentation performance of small targets, inability to adaptively segment objects, etc., achieve good segmentation performance, and optimize the design model. , Overcome the effect of data inconsistency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

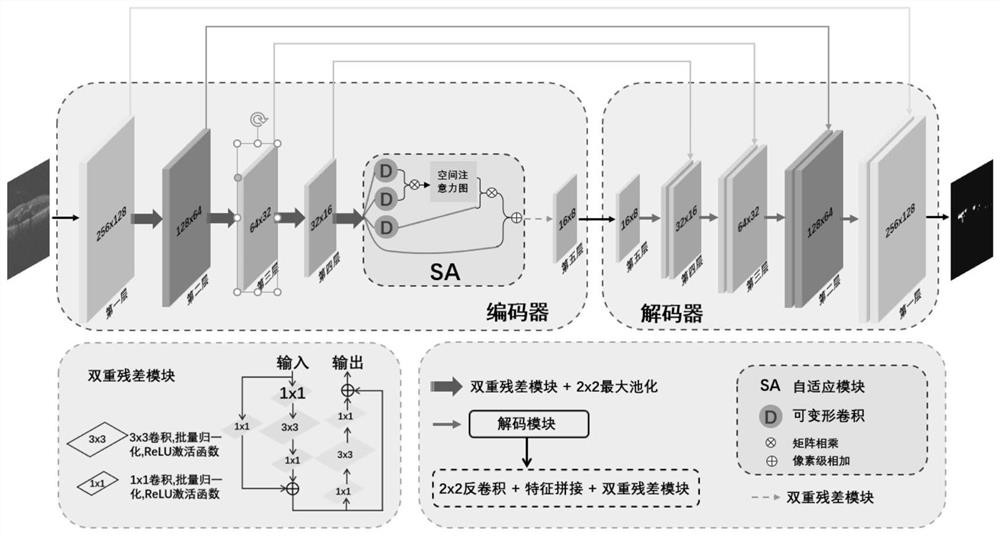

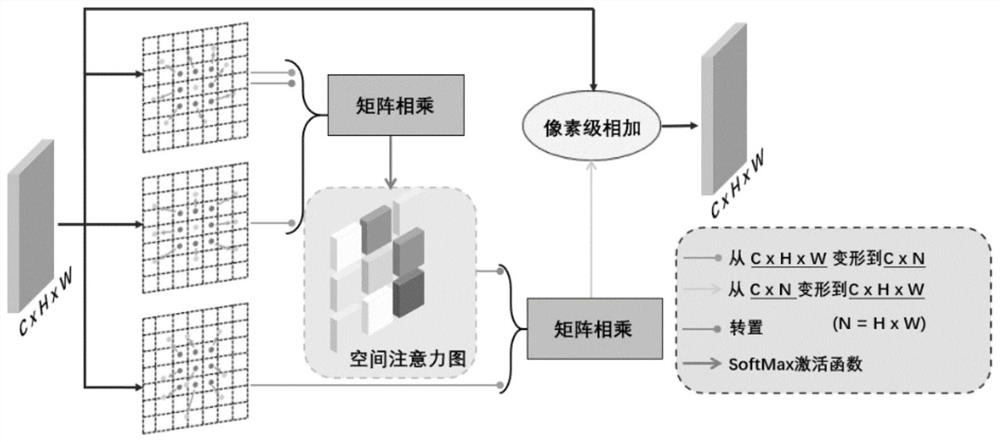

[0022] Example: Reference figure 1 An adaptive network suitable for the segmentation of highly reflective bright spots in retinal optical coherence tomography images is shown, including a feature encoding module, an adaptive SA module applied to the deep layer of the encoder module, and multiple features set in the decoder channel decoding module.

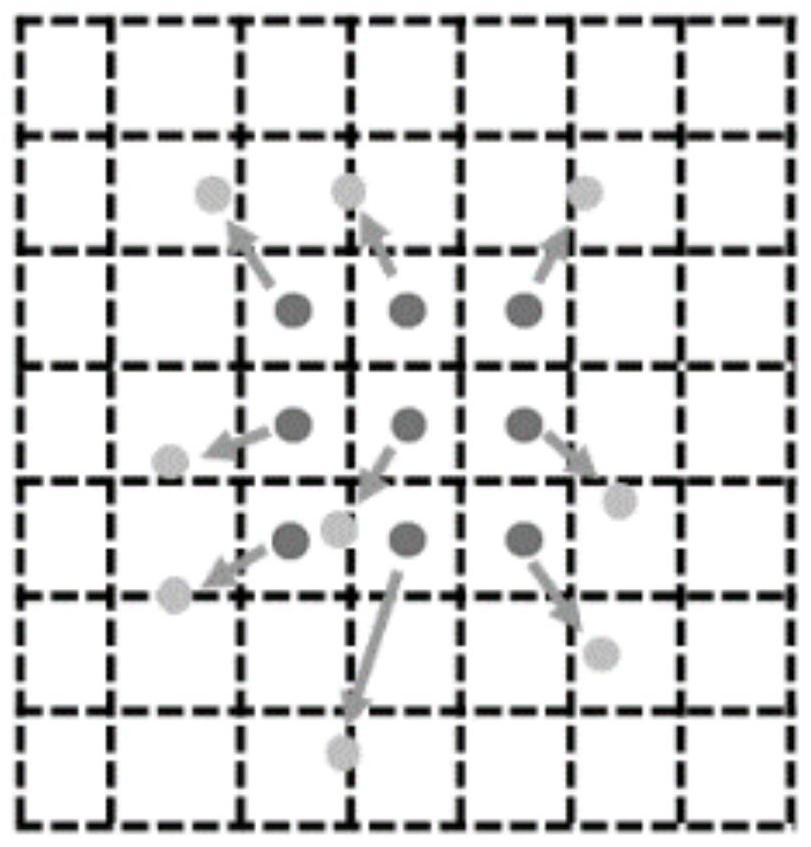

[0023] The feature encoding module includes a feature extraction unit and a dual residual DR module embedded in the downsampling position of the feature extraction unit. The dual residual DR module includes two residual blocks. The residual block includes a 1×1 convolutional layer, a 3 ×3 convolutional layer, 1×1 convolutional layer, batch normalization processing layer, ReLU activation function. In this embodiment, the feature extraction unit is a U-Net encoder layer, and the output of the fourth layer of the feature extraction unit is connected to the feature input terminal. In order to obtain representative feature maps, the e...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com