Method and device for pruning neural network model

A neural network model and pruning technology, applied in the field of deep learning, can solve problems such as the promotion of unfavorable pruning schemes

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

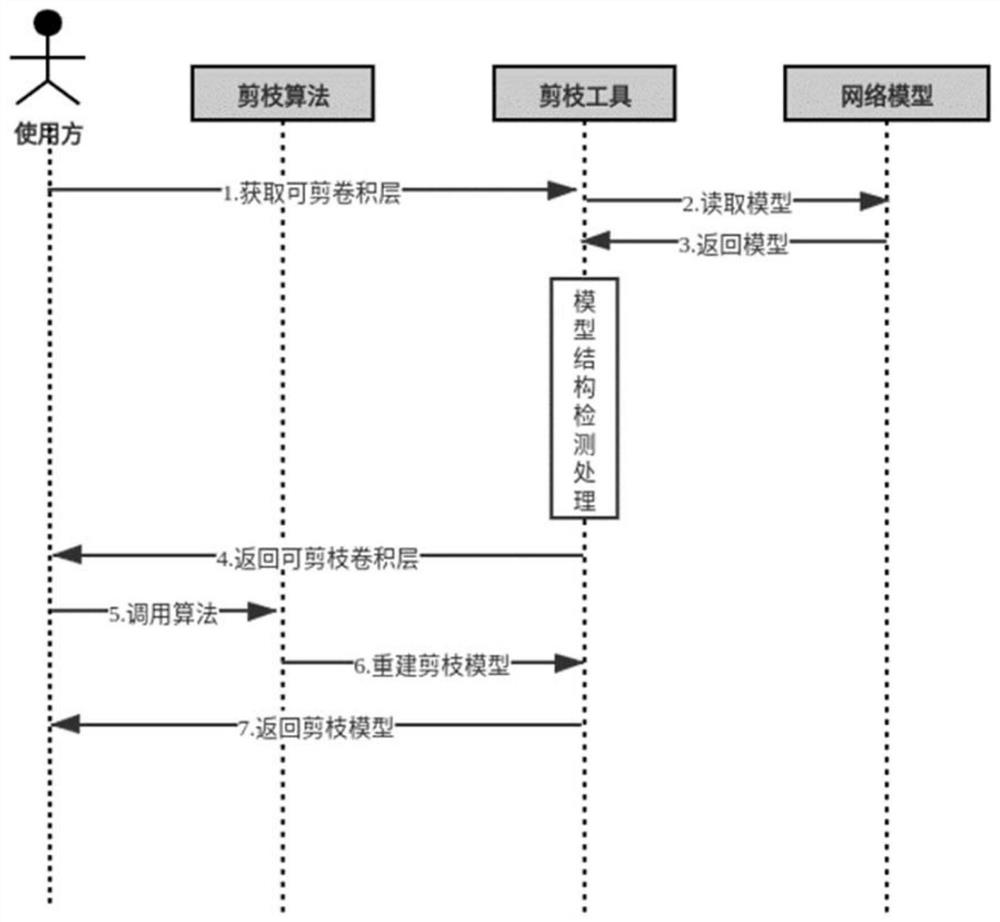

[0031] figure 1 It is a flow chart of an embodiment of a method for pruning a neural network model provided in Embodiment 1 of the present application. Generally, the pruning technique of the neural network can include the following four stages: 1. Find channels that can be pruned. 2. Calculate the pruning result according to the upper layer pruning algorithm. 3. Get the cropped model. 4. Fine-tune or retrain the cropped model. In this embodiment, stage 1 of the pruning technology "find channels that can be pruned" and stage 3 "obtain the pruned model" are optimized to eliminate the gap between algorithms and engineering and realize fully automatic pruning .

[0032] The method for pruning a neural network model in the embodiment of the present application may be performed by a pruning tool or a pruning device, and may specifically include the following steps:

[0033] Step 110, acquire model structure data of the target neural network model, where the model structure dat...

Embodiment 2

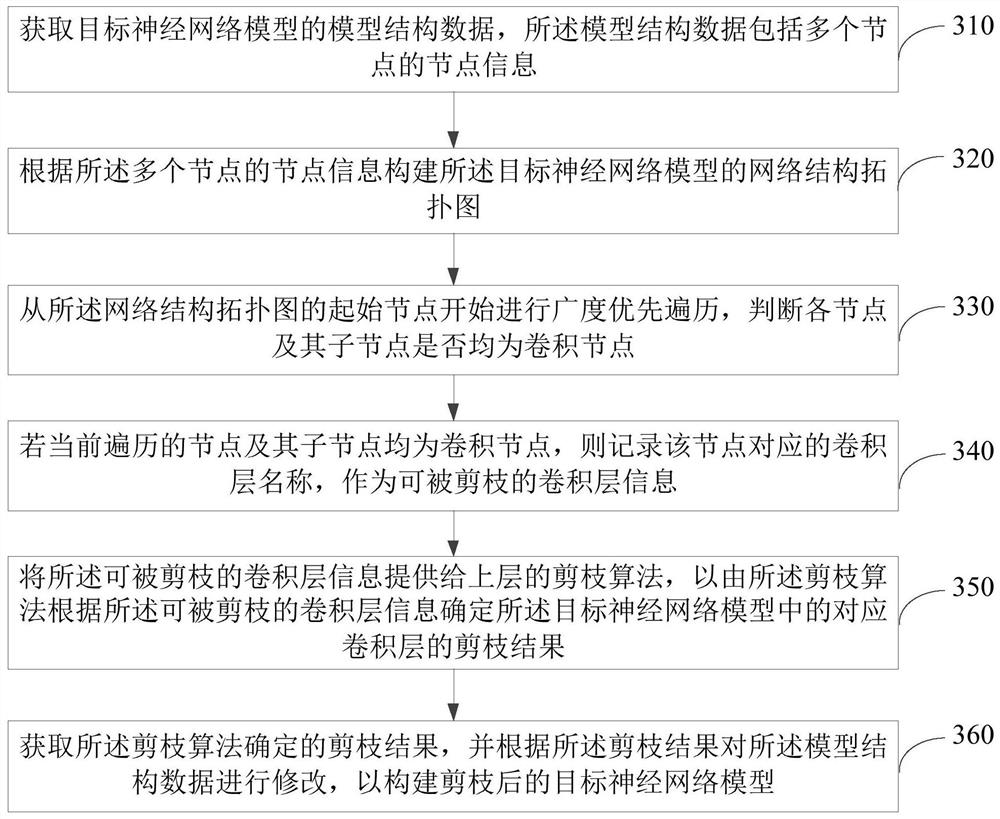

[0059] image 3 It is a flowchart of another embodiment of a method for pruning a neural network model provided in Embodiment 2 of the present application.

[0060] Step 310, acquire model structure data of the target neural network model, where the model structure data includes node information of multiple nodes.

[0061] As an example, the model structure data of the target neural network model may include node information of multiple nodes. Wherein, the node information may include but not limited to: the name of the convolutional layer where the node is located, operation attribute information, parent node index information, channel data, and the like.

[0062] In one embodiment, the front-end framework of the target neural network model can be the MXNet framework, the model structure data can be the json data of the model structure, and the model structure function of the MXNet framework can be called to obtain the json of the model structure of the target neural network...

Embodiment 3

[0092] Figure 4 A structural block diagram of an embodiment of a device for pruning a neural network model provided in Embodiment 3 of the present application. The device for pruning a neural network model may also be called a pruning device, and the device may include:

[0093] A model structure data acquisition module 410, configured to acquire model structure data of the target neural network model, the model structure data including node information of multiple nodes;

[0094] A prunable convolutional layer information determination module 420, configured to determine the pruned convolutional layer information in the target neural network model according to the node information of the plurality of nodes;

[0095] The prunable convolutional layer information sending module 430 is configured to provide the pruned convolutional layer information to the pruning algorithm of the upper layer, so that the pruning algorithm can The layer information determines the pruning result...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com