Pruning method applied to target detection and terminal

A target detection algorithm and target detection technology, applied in the field of computer vision model compression, can solve problems such as loss of precision, achieve the effects of reducing loss of precision, less time-consuming, and simple pruning process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

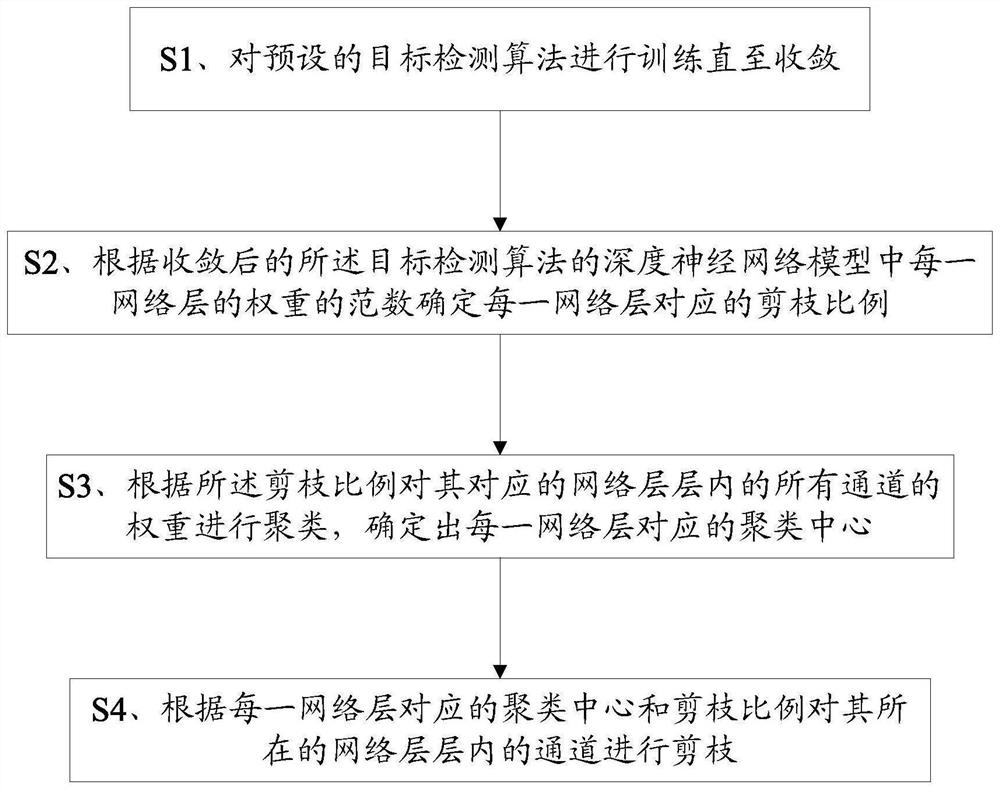

[0074] Please refer to figure 1 , a pruning method applied to target detection, including steps:

[0075] S1. Train the preset target detection algorithm until it converges;

[0076] Wherein, the preset target detection algorithm includes but is not limited to currently popular deep learning target detection algorithms, such as algorithms based on deep learning such as Yolov3, SSD, faster rcnn, retinanet, etc.;

[0077] Specifically, you can use the existing data set to train the preset target detection algorithm, train the target detection algorithm to convergence, and use the test standard map of pascalvoc to evaluate the model to obtain objective evaluation data;

[0078] S2. Determine the pruning ratio corresponding to each network layer according to the sum of the norms of the weights of each network layer in the deep neural network model of the target detection algorithm after convergence;

[0079] Determine the weight norm mean value corresponding to each network laye...

Embodiment 2

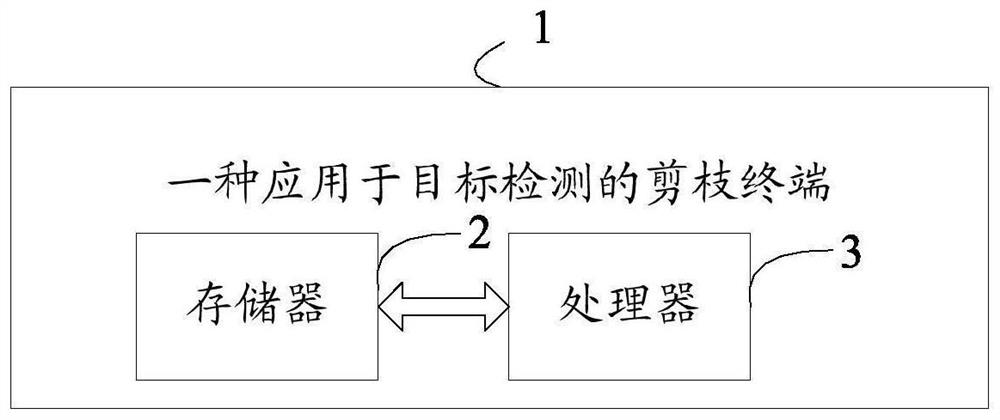

[0118] Please refer to figure 2 , a pruning terminal 1 applied to target detection, comprising a memory 2, a processor 3 and a computer program stored on the memory 2 and operable on the processor 3, the processor 3 implements the computer program when executing the computer program Each step in the first embodiment.

Embodiment 3

[0120] Test the above pruning method applied to object detection:

[0121] Considering that the compression strengths of different algorithms are inconsistent, this embodiment hopes to compare the loss of precision after compression under the same compression strength. Therefore, this embodiment reproduces the second method and the third method described in the background technology method to prune Yolov3 (only one pruning, no iterative pruning), and set a uniform compression of 0.2percent for each layer of the backbone network darknet53 of the Yolov3 algorithm, that is, each layer retains 0.8 times the original channels, then The original channel and the current pruned channel number are compared according to the order of each layer of darknet53, as shown in Table 1 (only the channels of the convolutional layer are compared, and the shortcut needs to have the same dimension to be added. In order to reduce the complexity, the shortcut is not cut uniformly. layer channel):

[...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com