Neural network inference method based on software and hardware cooperative acceleration

A technology of software-hardware collaboration and neural network, applied in the computer field, can solve problems such as no software-hardware system collaborative acceleration, and achieve the effect of improving utilization rate and computing performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

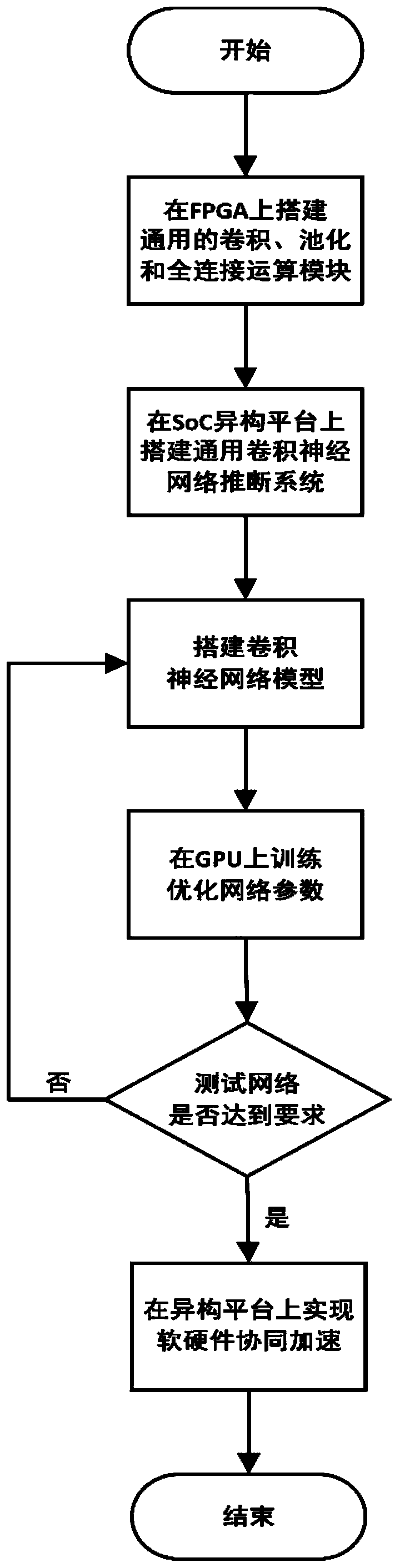

[0026] The present invention will be further described in detail below in conjunction with specific drawings and embodiments.

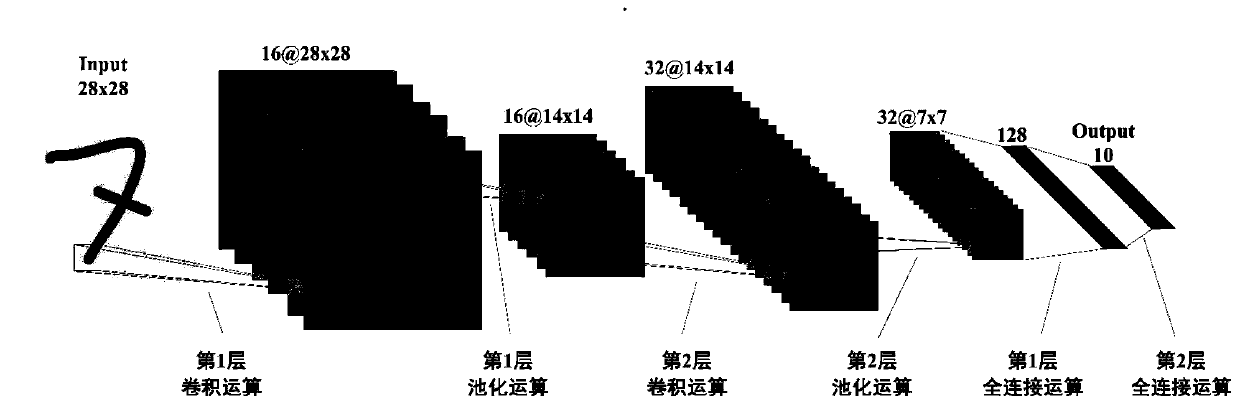

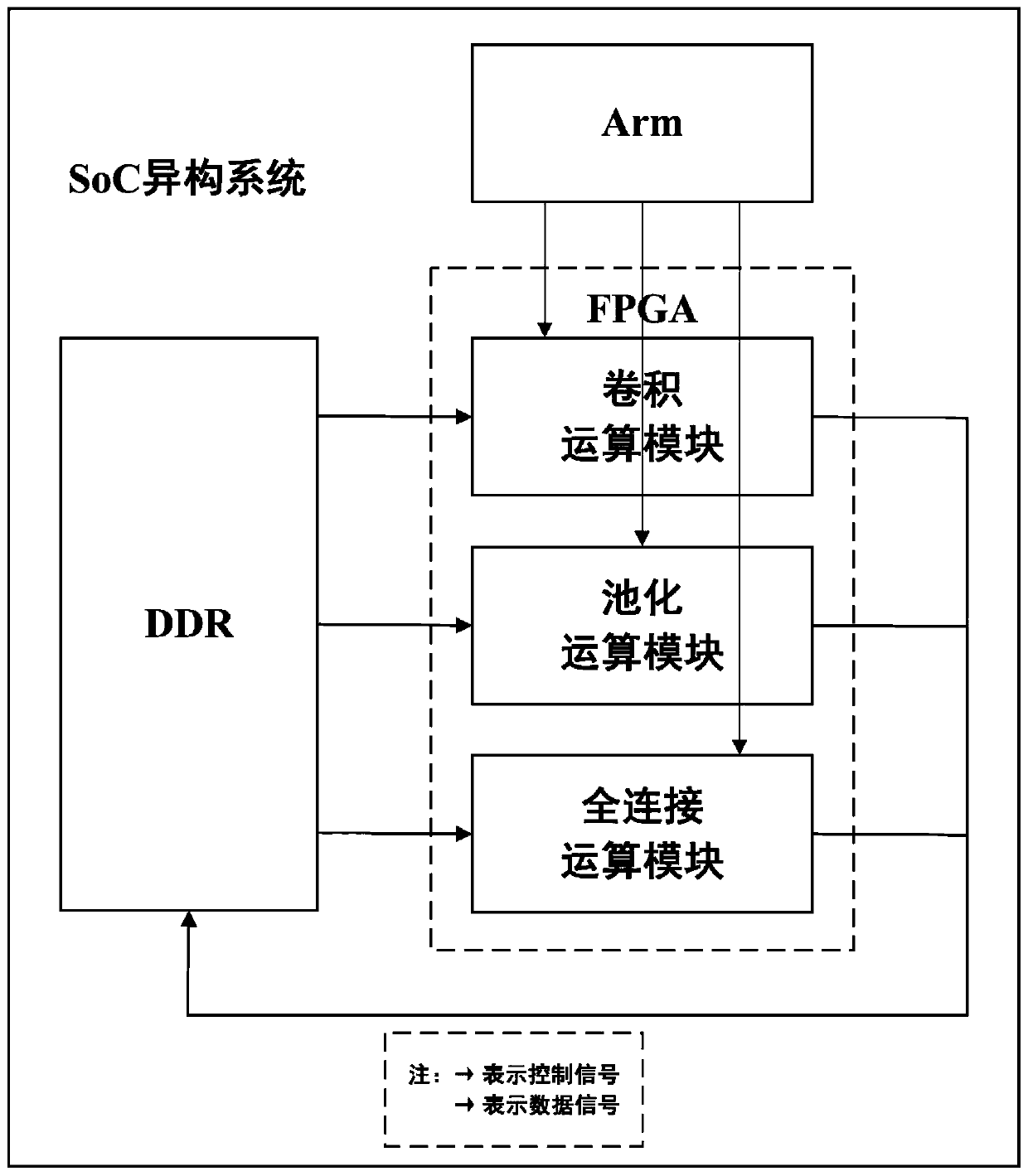

[0027] The neural network inference method proposed in the present invention is suitable for convolutional neural networks or other neural networks that also have alternately connected convolutional layers and pooling layers, and pipelined fully connected layers. The neural network includes an input layer, an N-layer convolutional layer, an N-layer pooling layer, a K-layer fully connected layer, and an output layer, where N and K are both positive integers, and N≥K; the input signal of the i-th pooling layer Is the output signal of the i-th convolutional layer, the output signal is the input signal of the i+1th convolutional layer, and the input signal of the first convolutional layer is the output signal of the input layer, i∈[1,N] ; The input signal of the jth fully connected layer is the output signal of the j-1 fully connected layer, j∈[2, K]; the in...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com