Data processing method and device based on hybrid memory

A hybrid memory and data processing technology, applied in the computer field, can solve problems such as low data processing efficiency, and achieve the effect of improving efficiency and storage capacity

- Summary

- Abstract

- Description

- Claims

- Application Information

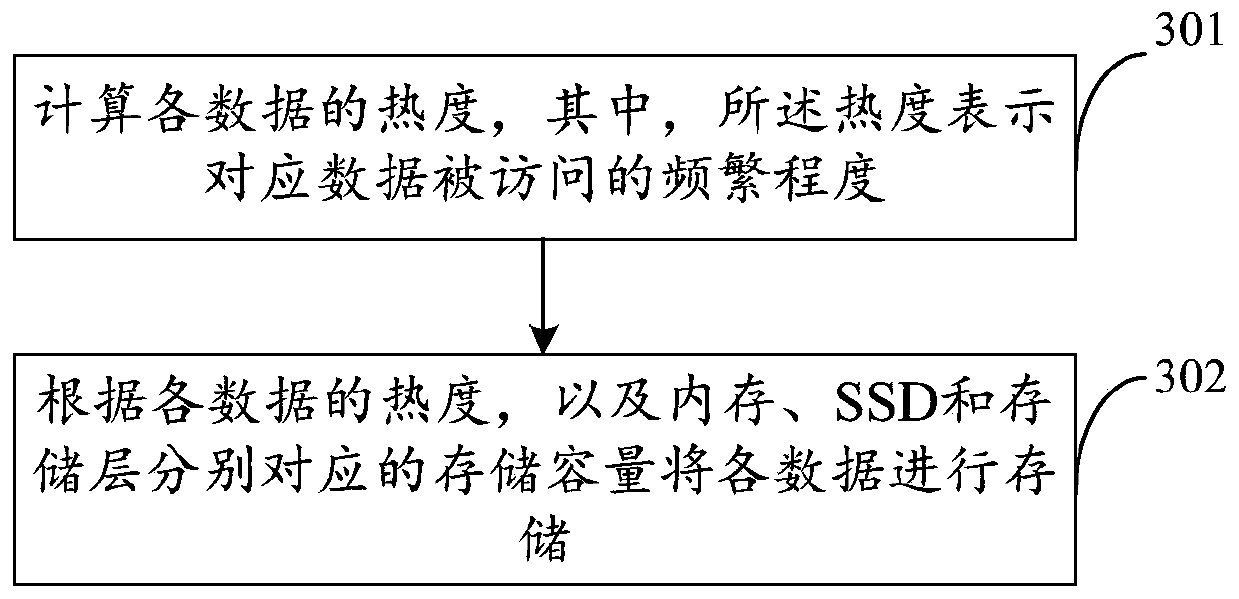

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The technical solutions in the embodiments of the present application will be described below with reference to the drawings in the embodiments of the present application.

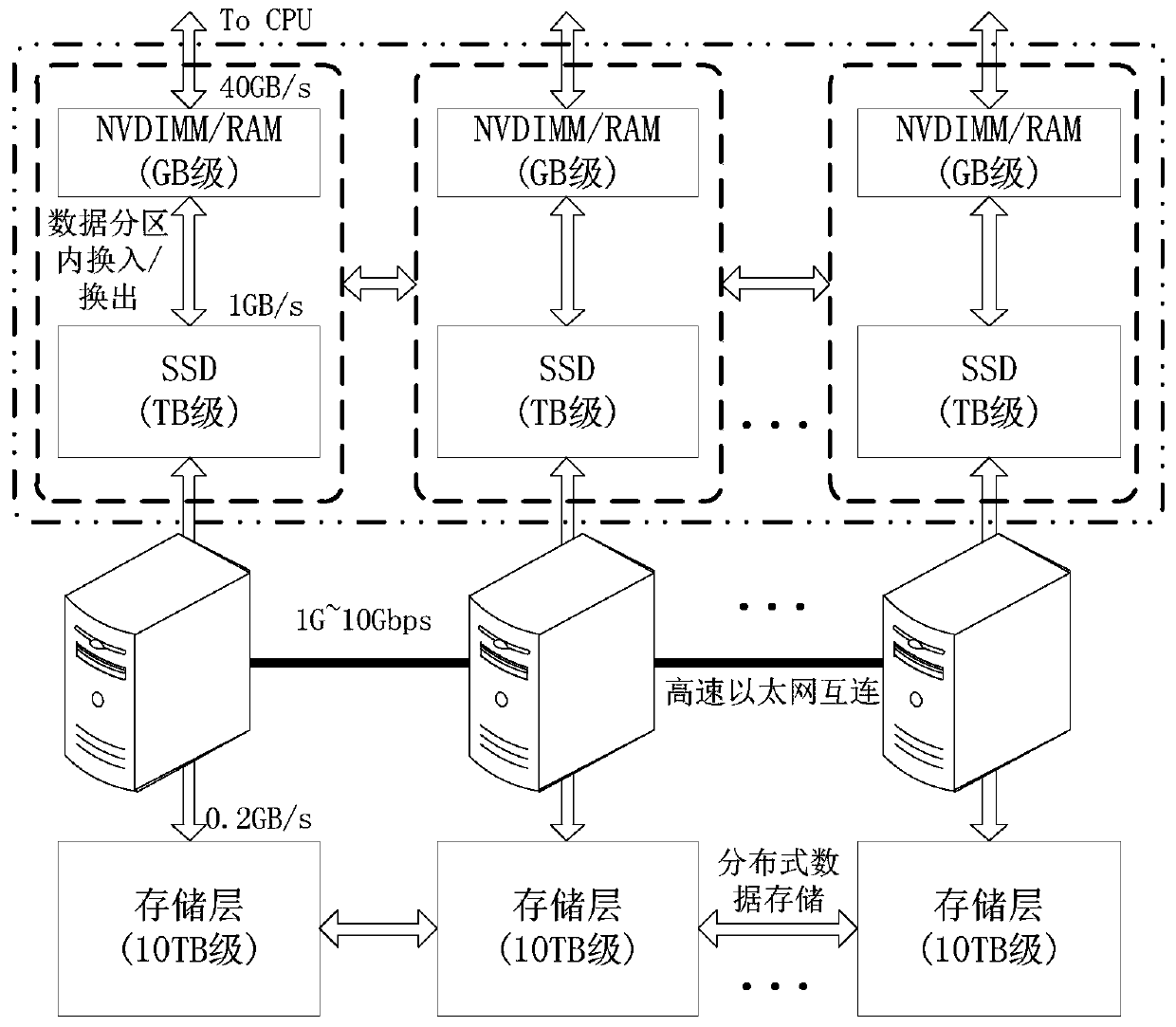

[0030] Since single-node in-memory computing is limited by hardware resources, it faces the problem of hardware scalability when processing larger-scale data. In the context of the rapid development of large-scale distributed data processing technologies represented by MapReduce, people have also begun to implement in-memory computing on distributed systems. This kind of memory computing uses a cluster composed of multiple computers to build a distributed large memory. Through unified resource scheduling, the data to be processed is stored in the distributed memory to achieve fast access and processing of large-scale data.

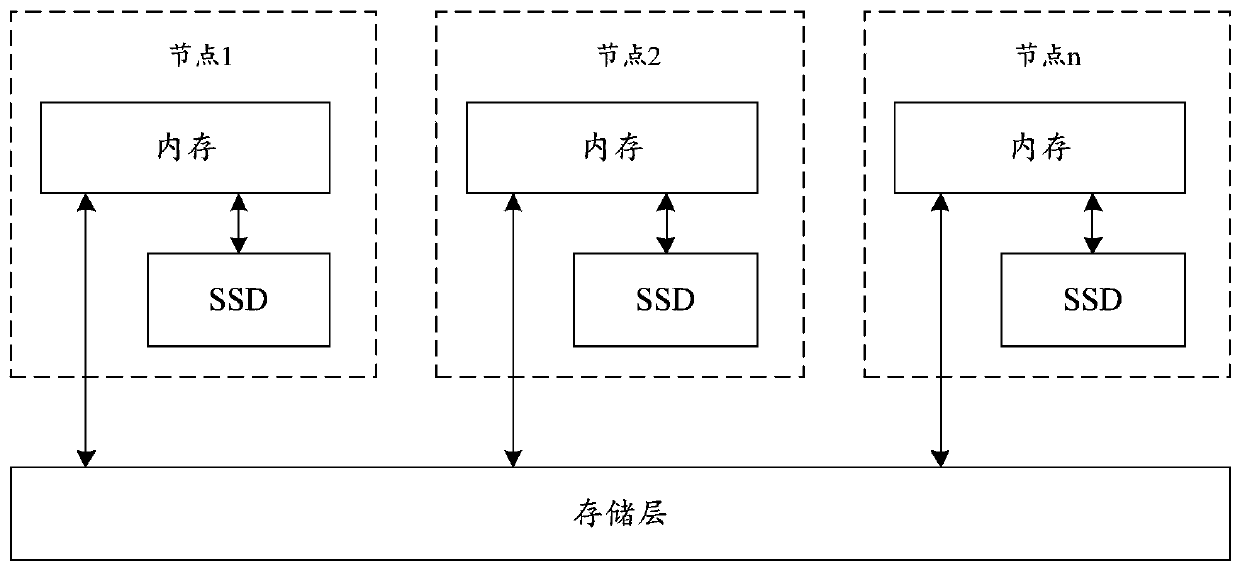

[0031] figure 1 A schematic structural diagram of a distributed storage system provided for the embodiment of this application, such as figure 1 As shown, the system includes ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com