Augmented reality navigation method based on indoor natural scene image deep learning

A technology of natural scene images and natural scenes, which is applied in the field of augmented reality navigation based on deep learning of indoor natural scene images, and can solve the problem that markers such as QR codes are easily damaged by human beings, augmented reality navigation is difficult to popularize, and positioning accuracy is low. problems, to achieve the effect of improving planning efficiency, making it difficult to find, and having a strong sense of reality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0082] The specific implementation manners of the present invention will be described in detail below in conjunction with the accompanying drawings.

[0083] In this embodiment, a smart phone with eight cores and 6G memory is used, and the resolution of the camera is 1920*1080, and the internal parameters are calibrated in advance, and the default is unchanged; the feature points of the indoor natural scene appearing in the camera of the mobile phone are identified and tracked register;

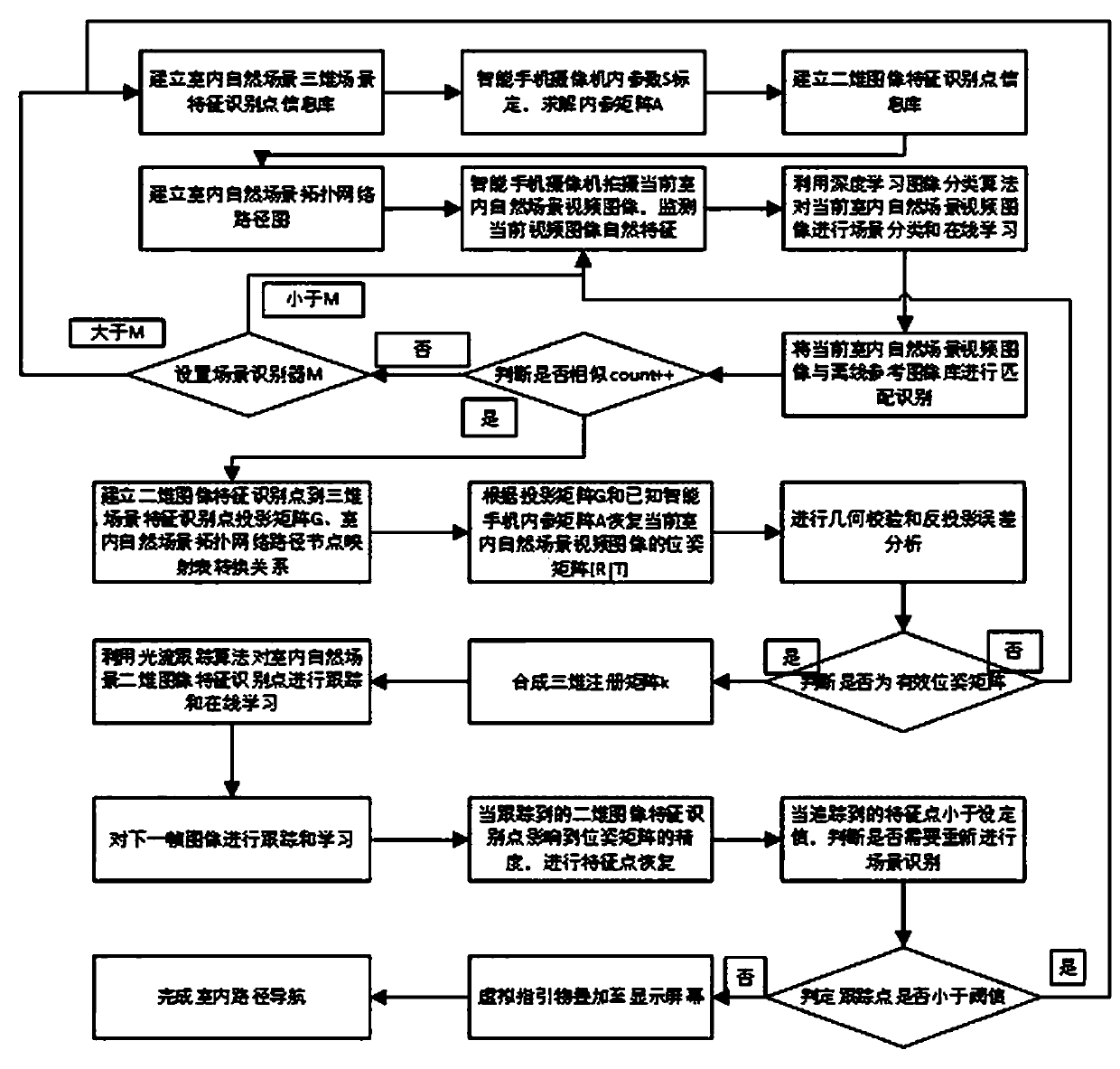

[0084] Such as figure 1 As shown, an augmented reality navigation method based on deep learning of indoor natural scene images includes the following steps:

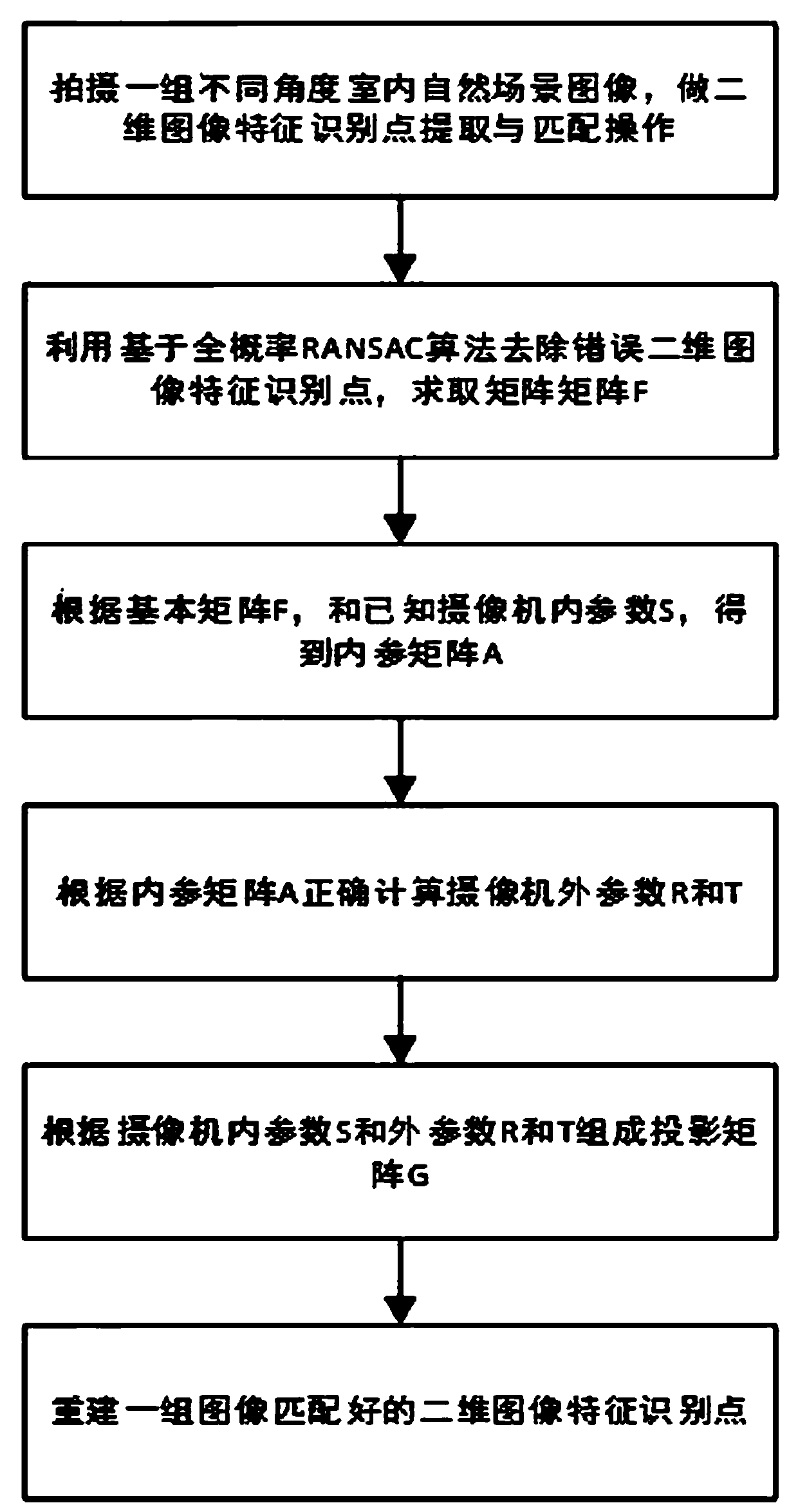

[0085] Step 1: If figure 2 as shown, figure 2 In order to establish the flow chart of the 3D scene feature recognition point information library of indoor natural scenes, according to the basic principle of 3D reconstruction of indoor natural scenes based on 3D scene feature recognition points, use a 3D laser scanner to scan indoor...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com