Image multi-scale feature extraction method based on cellular neural network

A multi-scale feature and neural network technology, applied in the field of image processing, can solve the problems of high dimensionality of extracted features, large consumption of computing resources, complex network structure, etc., and achieve low feature dimensionality, fast feature extraction speed, and feature robustness strong effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

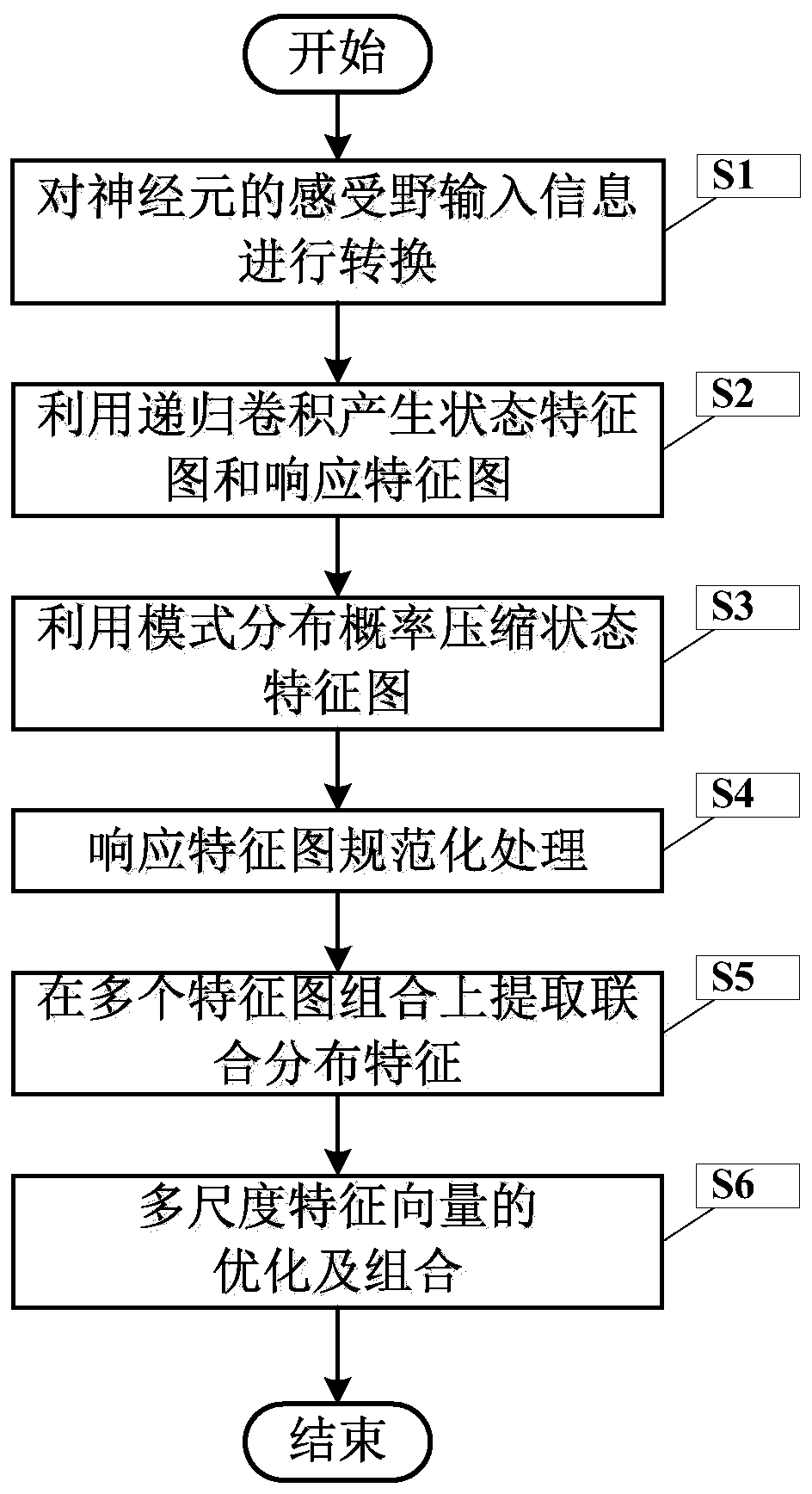

[0057] figure 1 It is a flow chart of the method for extracting multi-scale features of an image based on a cellular neural network in the present invention.

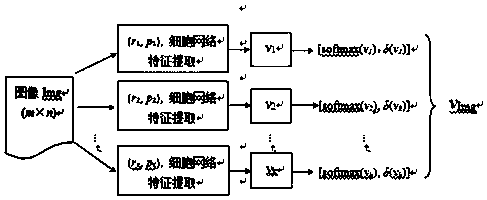

[0058] In this example, if figure 1 Shown, a kind of image multiscale feature extraction method based on cellular neural network of the present invention comprises the following steps:

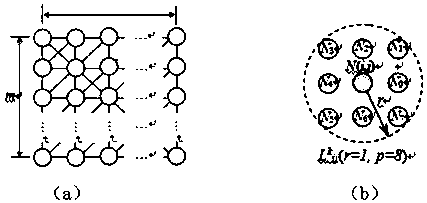

[0059] S1. Convert the input information of the neuron's receptive field

[0060] In this example, if figure 2 As shown in (a), the cellular neural network is a two-dimensional network structure, with m rows and n columns of neurons evenly arranged, and the neurons are locally connected. In image processing, the size of the network is consistent with the size of the image to be processed, and there is a one-to-one correspondence between neurons and pixels. also, figure 2 (b) shows a central neuron N(i,j), and its k-th neighborhood receptive field L whose radius is r and the total number of neighborhood neurons is p k The 8 neuro...

specific example

[0103] The following takes the image sample "000000.ras" in the texture data set Outex_TC_00010 as an example to further describe the technical implementation process of the present invention in detail.

[0104] The Outex_TC_00010 data set has a total of 24 types of texture samples, the lumen condition is inca, each type of texture includes 9 different angles, and each angle includes 20 texture images, so the entire database contains 24 × 9 × 920 = 4320 texture images, and The dimensions of each image are 128×128 pixels. In this example, the first 20 samples of each type of image are selected from small to large for training, and the rest of the texture images are used to test the accuracy of texture recognition.

[0105] 1) Initialization of the algorithm: construct a cellular neural network with a neuron array of 128×128, and set the initial value C=R x =1, I=0, X(t=0)=0, and then set L respectively 1 (3,8), L 2 (5,16) and L 3 (7,24) The neighborhood sampling scales of t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com