Distributed stochastic gradient descent method

A stochastic gradient descent, distributed technology, applied in the field of machine learning, can solve the problems of long waiting time for recycling, poor model parameters, etc., and achieve the effect of shortening the whole training time, accelerating the convergence speed, and efficient training process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

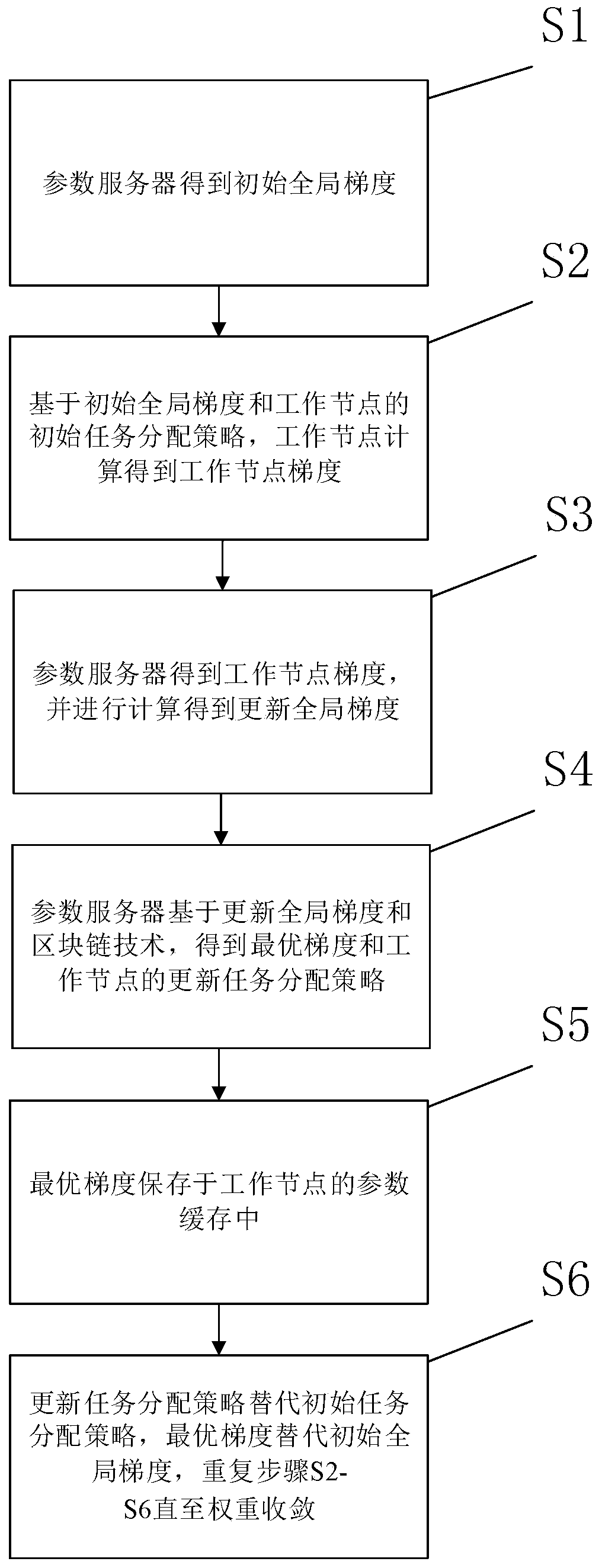

[0054] This embodiment provides a distributed stochastic gradient descent method, such as figure 1 shown, including the following steps:

[0055] Step S1: The parameter server obtains the initial global gradient;

[0056] Step S2: Based on the initial global gradient and the initial task assignment strategy of the working node, the working node calculates the gradient of the working node;

[0057] Step S3: The parameter server obtains the gradient of the working node, and performs calculations to obtain an updated global gradient;

[0058] Step S4: Based on the updated global gradient and blockchain technology, the parameter server obtains the optimal gradient and the updated task allocation strategy of the working nodes;

[0059] Step S5: The optimal gradient is saved in the parameter cache of the working node;

[0060]Step S6: Update the task allocation strategy to replace the initial task allocation strategy, the optimal gradient to replace the initial global gradient, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com