All-weather unmanned autonomous working platform in unknown environment

An unknown environment and unmanned autonomous technology, applied in the field of artificial intelligence and visual navigation, can solve problems such as poor accuracy, insufficient autonomy, and poor environmental adaptability, and achieve the effects of improving obstacle avoidance accuracy, saving computing time, and high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

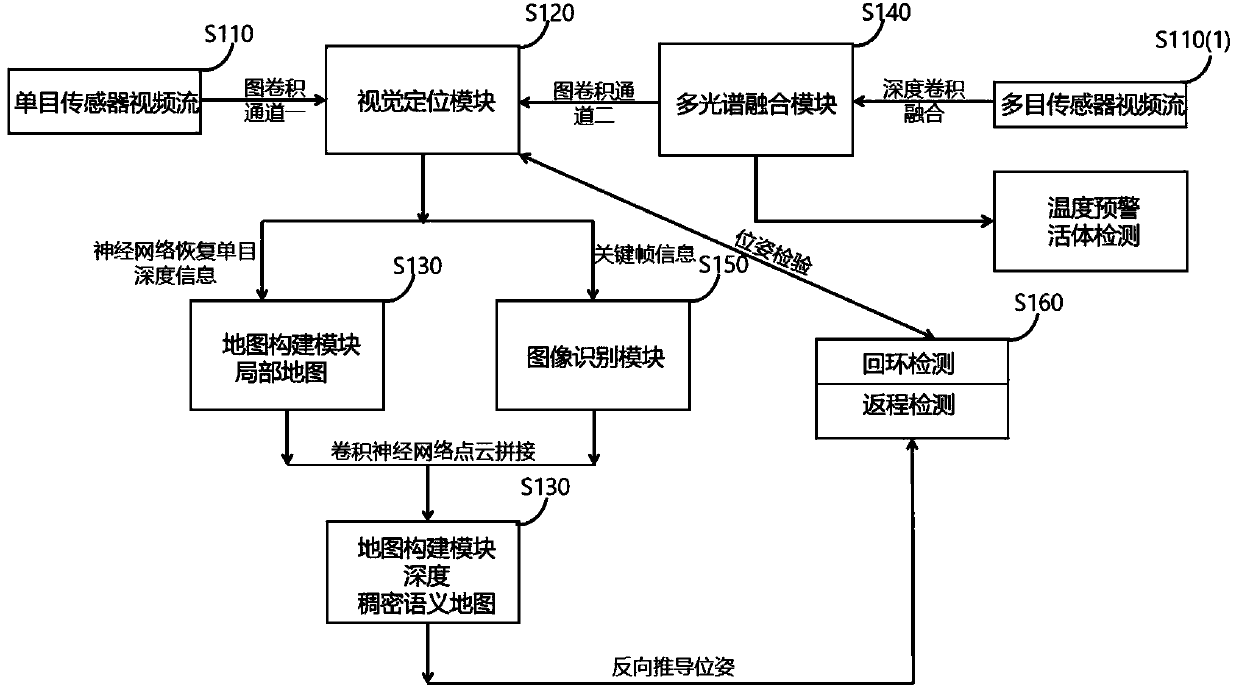

[0042] Describe technical scheme of the present invention in detail below in conjunction with accompanying drawing:

[0043] An unmanned autonomous working platform in an all-weather unknown environment, including: a visual positioning module, a multi-spectral image fusion module, an image recognition module, a map construction module, and a loopback and return detection module. Described visual localization module utilizes GCN (graph convolutional neural network) to select key frame in video stream, generates binary feature point descriptor and carries out pose solution; Described map drawing module receives sparse feature point cloud data from localization module And carry out local map drawing; Described multi-spectral image fusion module carries out image fusion to key frame and transmits to image recognition module, and described image recognition module classifies multi-spectral fusion image, finds target object and carries out semantic map construction; Finds After the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com