Target detection method based on deep learning

A target detection and deep learning technology, applied in the field of computer vision, can solve problems such as robustness sensitivity, low detection efficiency, and time-consuming, and achieve strong robustness, improved detection accuracy, and reduced missed and false detections The effect of the phenomenon

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The specific embodiments provided by the present invention will be described in detail below in conjunction with the accompanying drawings.

[0039] A method for object detection based on deep learning, comprising:

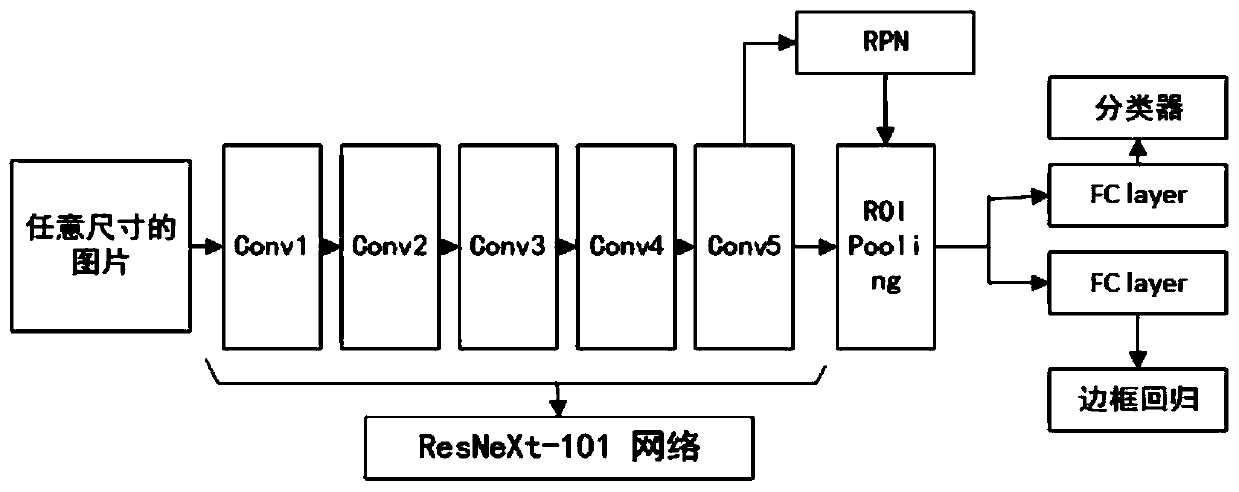

[0040] 1) In the Faster RCNN method, the VGG16 network used to extract image features is replaced by a 101-layer residual with stronger expressive ability and deeper layers;

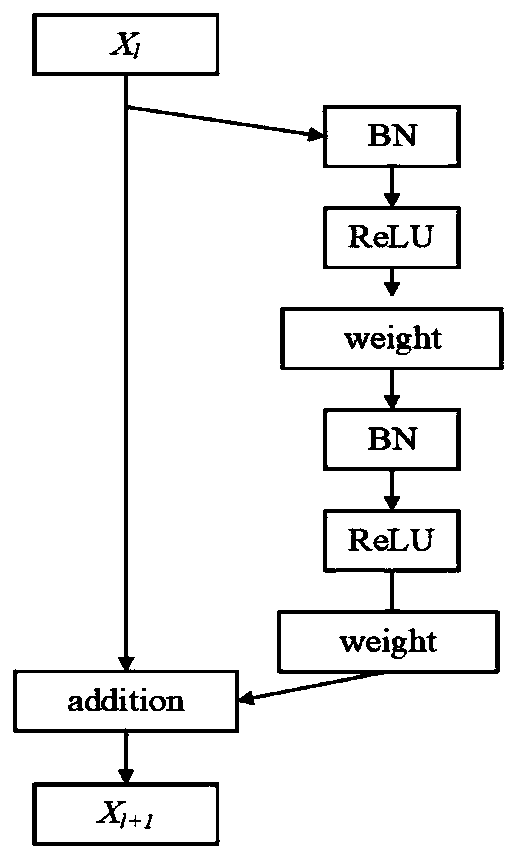

[0041] The ResNeXt network with a 101-layer structure is used to learn the target features. The ResNeXt network is an upgraded version of the ResNet network. The ResNeXt network retains the basic stacking method of the ResNet network. It is stacked by a parallel block with the same topology. Just split the path of ResNet into 32 independent paths (called "base"). These 32 paths perform convolution operations on the input image at the same time, and finally accumulate and sum the outputs from different paths as the final result. . This operation makes the division of labor in the netwo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com