Edge computing platform container deployment method and system based on load prediction

A load prediction and edge computing technology, applied in computing, neural learning methods, instruments, etc., to avoid resource consumption, reduce container migration, and avoid feedback lag

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] Since the computing nodes of edge computing are often non-dedicated, there is already a long-running task on the node before the computing task is sent to the node. This task is called the original task, and the resource occupation caused by it is called the original load. .

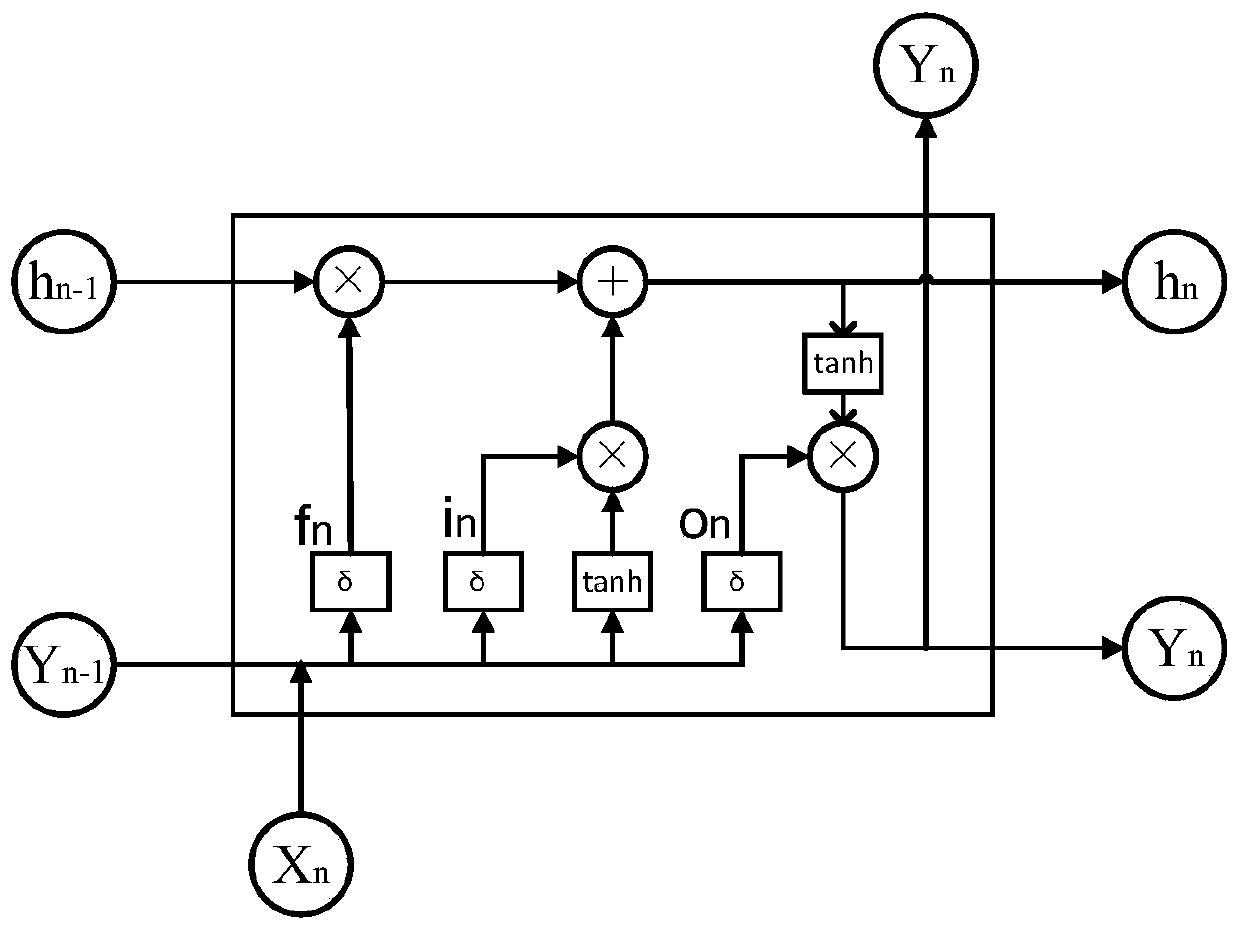

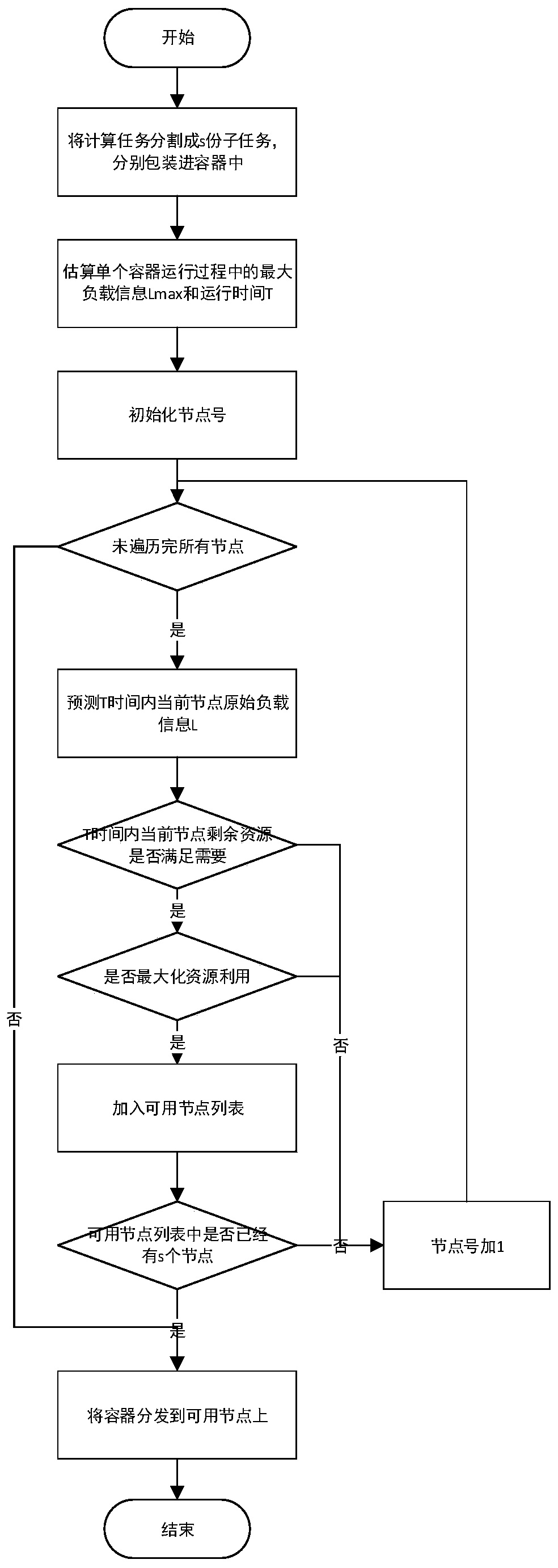

[0036] see figure 1 The edge computing platform container deployment system based on load forecasting provided by the present invention includes an original load monitoring system, a node load forecasting system and a computing task management system. The original load monitoring system runs on computing nodes for a long time, and the node load forecasting system and computing task The management system runs on the central node for a long time. Multiple computing nodes are connected to the node load forecasting system to send the original load information of the nodes to the node load forecasting system. The node load forecasting system receives the original load information of the nodes and manag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com