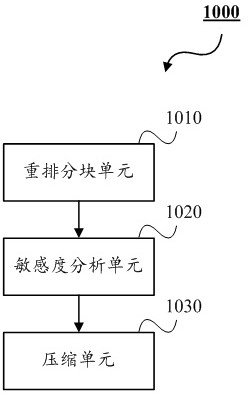

A deep neural network compression method and device

A neural network and compression method technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve the problems of large computational load of neural network, unsatisfactory compression effect, and speed up computing speed, so as to release storage resources, Small changes, the effect of speeding up the calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] The drawings are for illustration only and should not be construed as limiting the invention. The technical solutions of the present invention will be further described below in conjunction with the accompanying drawings and embodiments.

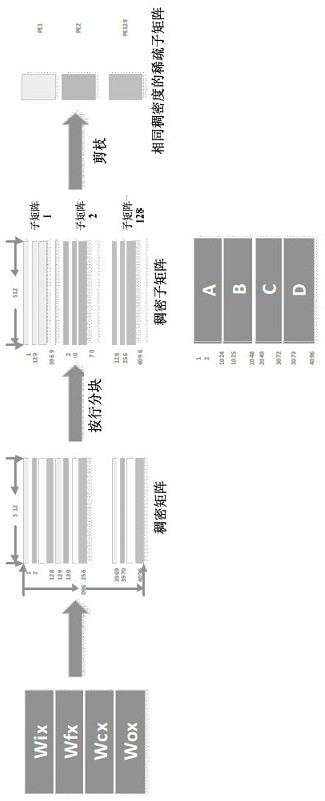

[0041] In the following, an example of network sparsification in LSTM neural network is used as a preferred embodiment of the present invention to specifically describe the method for compressing the neural network according to the present invention.

[0042] In the LSTM neural network, the forward calculation is mainly a combination of a series of matrix and vector multiplication, as shown in the following formula:

[0043]

[0044] Two types of LSTM are given in the formula: the simplest LSTM structure on the right; the LSTMP structure on the left, whose main feature is the addition of peephole and projection operations on the basis of simple LSTM. Whether it is LSTM or LSTMP structure, it mainly includes four matrices: c (unit ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com