Deep neural network compression method of considering load balance

A neural network and compression ratio technology, applied in the field of deep neural network compression considering load balancing, can solve problems such as limited acceleration, CPU and GPU cannot fully enjoy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0078] Inventor's past research results

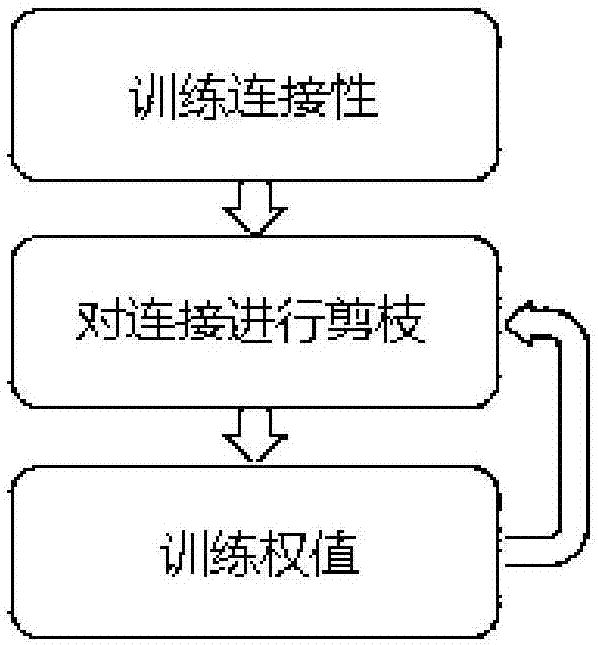

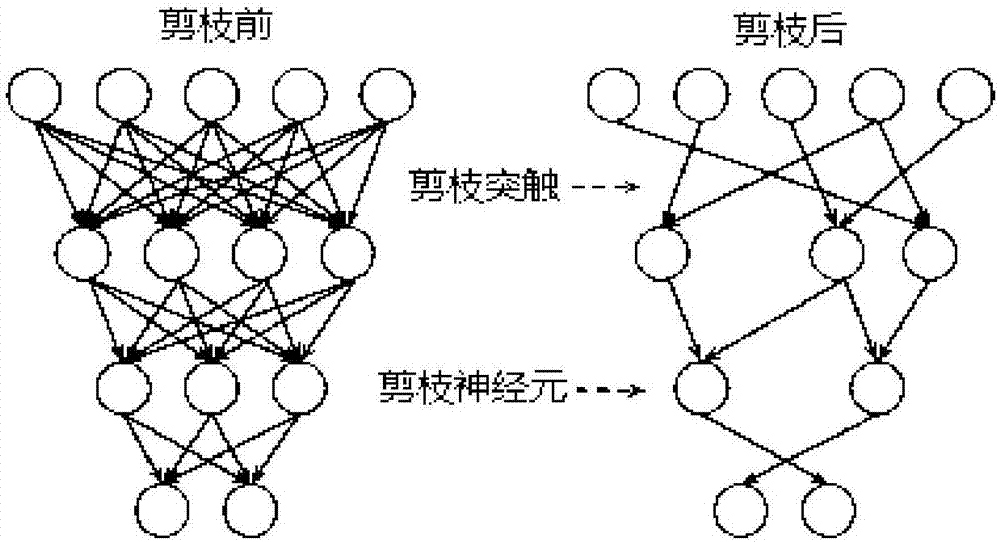

[0079] As in the inventor's previous article "Learning both weights and connections efficient neural networks", a method for compressing neural networks (eg, CNN) by pruning has been proposed. The method includes the following steps.

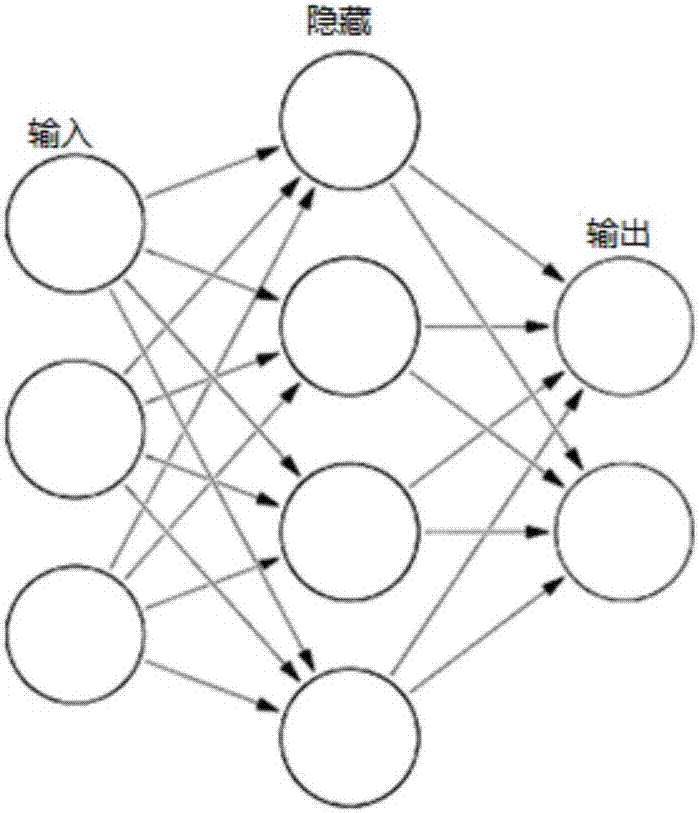

[0080] In the initialization step, the weights of the convolutional layer and the FC layer are initialized to random values, wherein a fully connected ANN is generated, and the connection has a weight parameter,

[0081] In the training step, the ANN is trained, and the weight of the ANN is adjusted according to the accuracy of the ANN until the accuracy reaches a predetermined standard. The training step adjusts the weight of the ANN based on the stochastic gradient descent algorithm, that is, randomly adjusts the weight value, and selects based on the accuracy change of the ANN. For an introduction to the stochastic gradient algorithm, see "Learning bothweights and connections for efficient neural ne...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com