A bridge vehicle wheel detection method based on a multilayer feature fusion neural network model

A neural network model and feature fusion technology, applied in the field of bridge vehicle wheel detection based on deep learning, can solve the problems of reduced classification task accuracy, final algorithm performance impact, and increased network burden.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

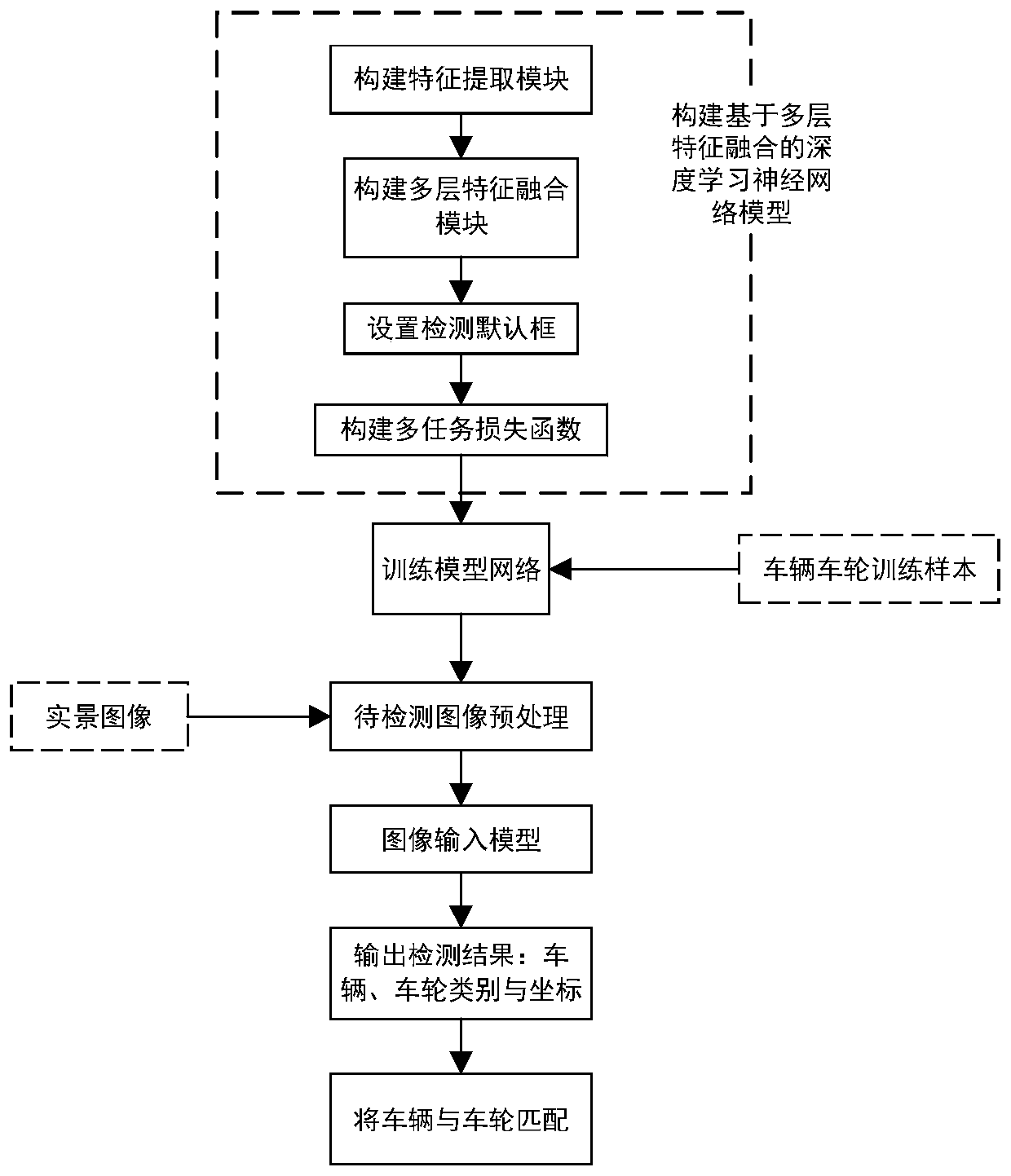

[0085] In order to make the object, technical scheme and advantages of the present invention clearer, below in conjunction with embodiment, specifically as figure 1 The shown algorithm flow chart further describes the present invention in detail. It should be understood that the specific embodiments described here are only used to explain the present invention, but not to limit the present invention.

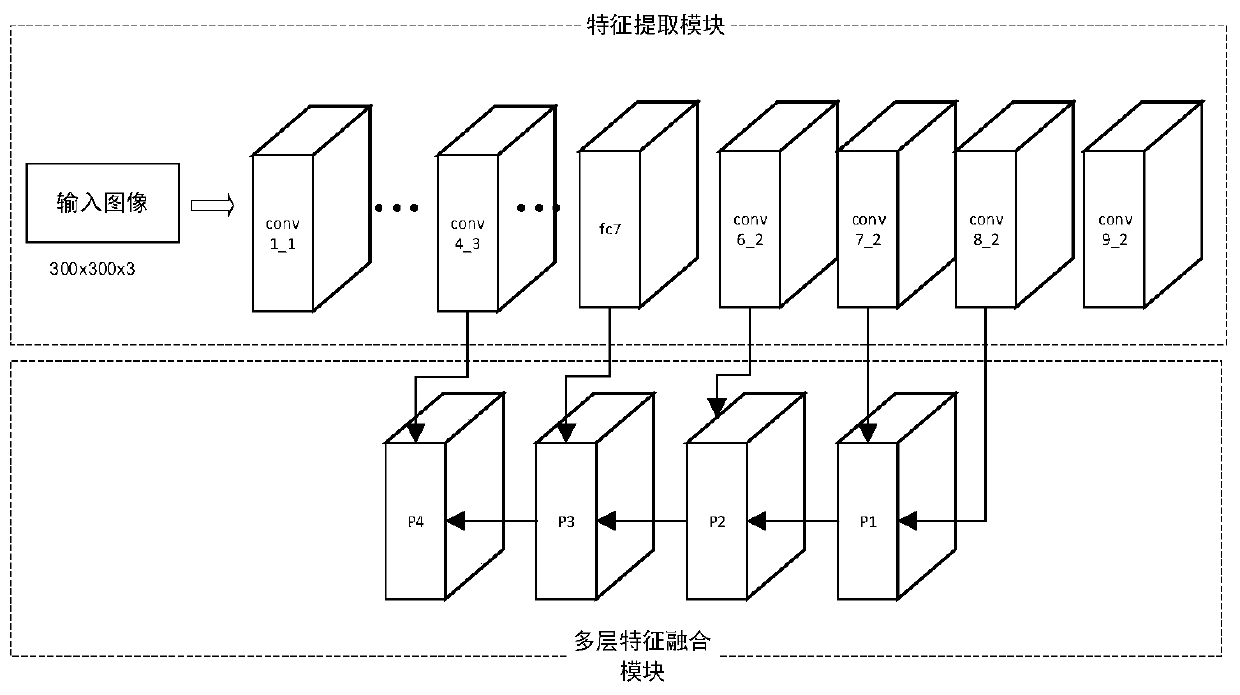

[0086] Step 1: Construct a deep learning neural network model based on multi-layer feature fusion. The specific description is as follows. The construction of a multi-layer feature fusion neural network model consists of a feature extraction module and a multi-layer feature fusion module, which are used to extract a feature from the image to be detected. Series of feature maps of different sizes. The deep learning neural network model based on multi-layer feature fusion adds a multi-layer feature fusion module on the basis of the feature extraction module, which integrates the ...

specific Embodiment approach

[0163] figure 1 It is a flow chart for realizing the method of the present invention, and the specific embodiments are as follows:

[0164] 1. Build a feature extraction module;

[0165] 2. Build a multi-layer feature fusion module;

[0166] 3. Build a multi-task loss function;

[0167] 4. Adjust the image size of all training sets to 300*300;

[0168] 5. The initial learning rate of training is set to 0.001, and the number of iterations is set to 10w times. After 6w iterations, the learning rate is reduced to 10 -4 , after 8w iterations, the learning rate is reduced to 10 -5 .

[0169] 6. Repeatedly input the training image for model training, calculate the loss value according to the training loss function, and use the stochastic gradient descent (SGD) algorithm to adjust the model parameters until the number of training iterations reaches the set value;

[0170] 7. Perform image enhancement preprocessing on the image to be detected;

[0171] 8. Adjust the size of the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com