LRU flash memory cache management method based on dynamic page weight

A dynamic page and cache management technology, applied in the field of storage systems, can solve the problems of low hit rate, high write consumption, lack of flash cache area management methods, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

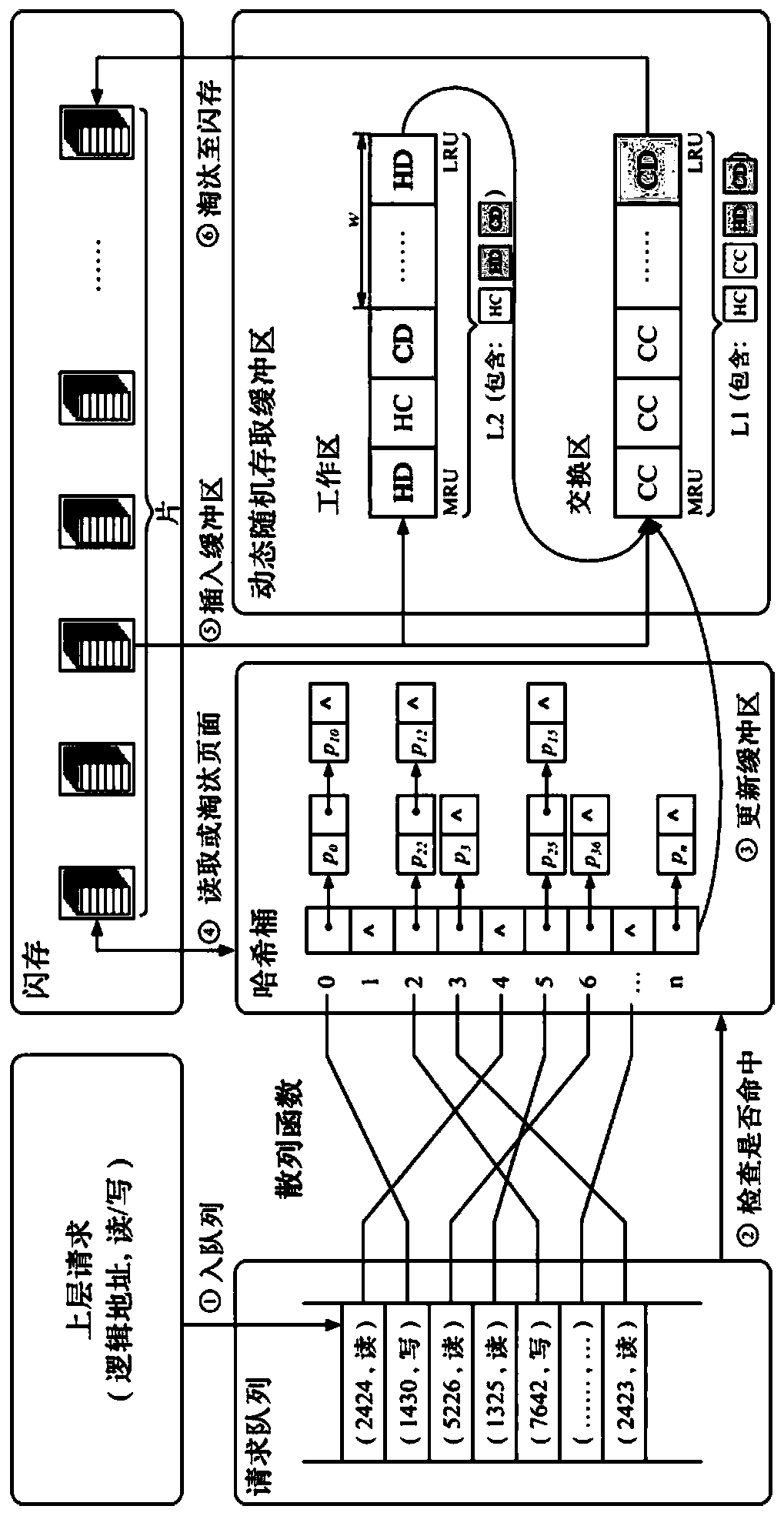

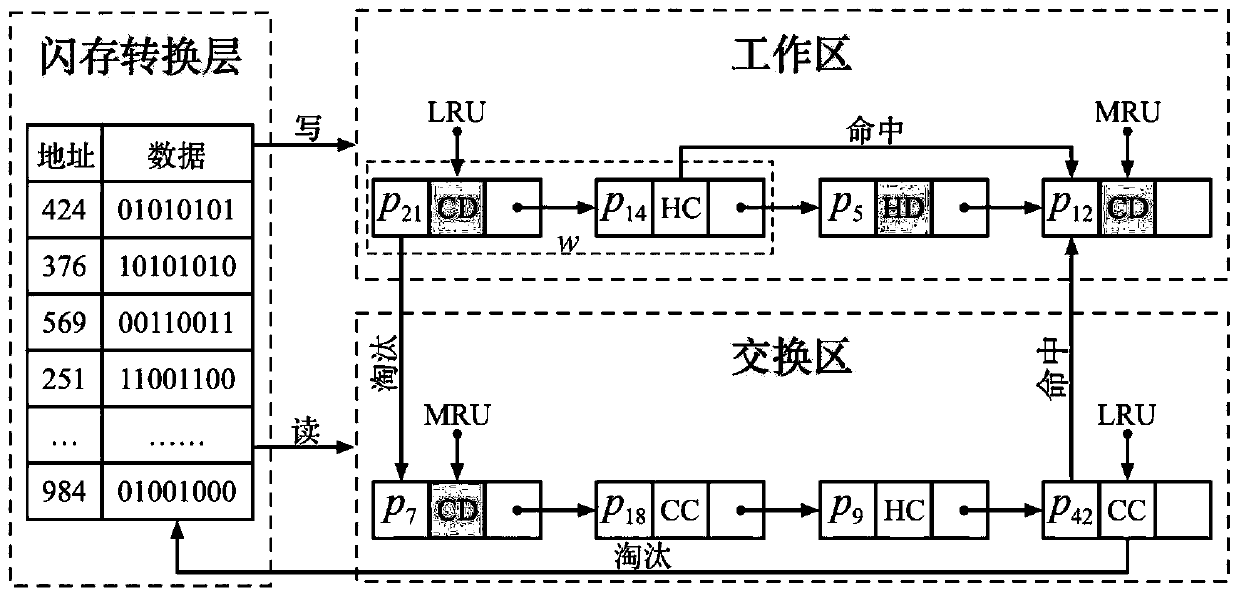

[0064] A dynamic page weight-based flash memory cache management method provided by the present invention will be further described below with reference to the accompanying drawings.

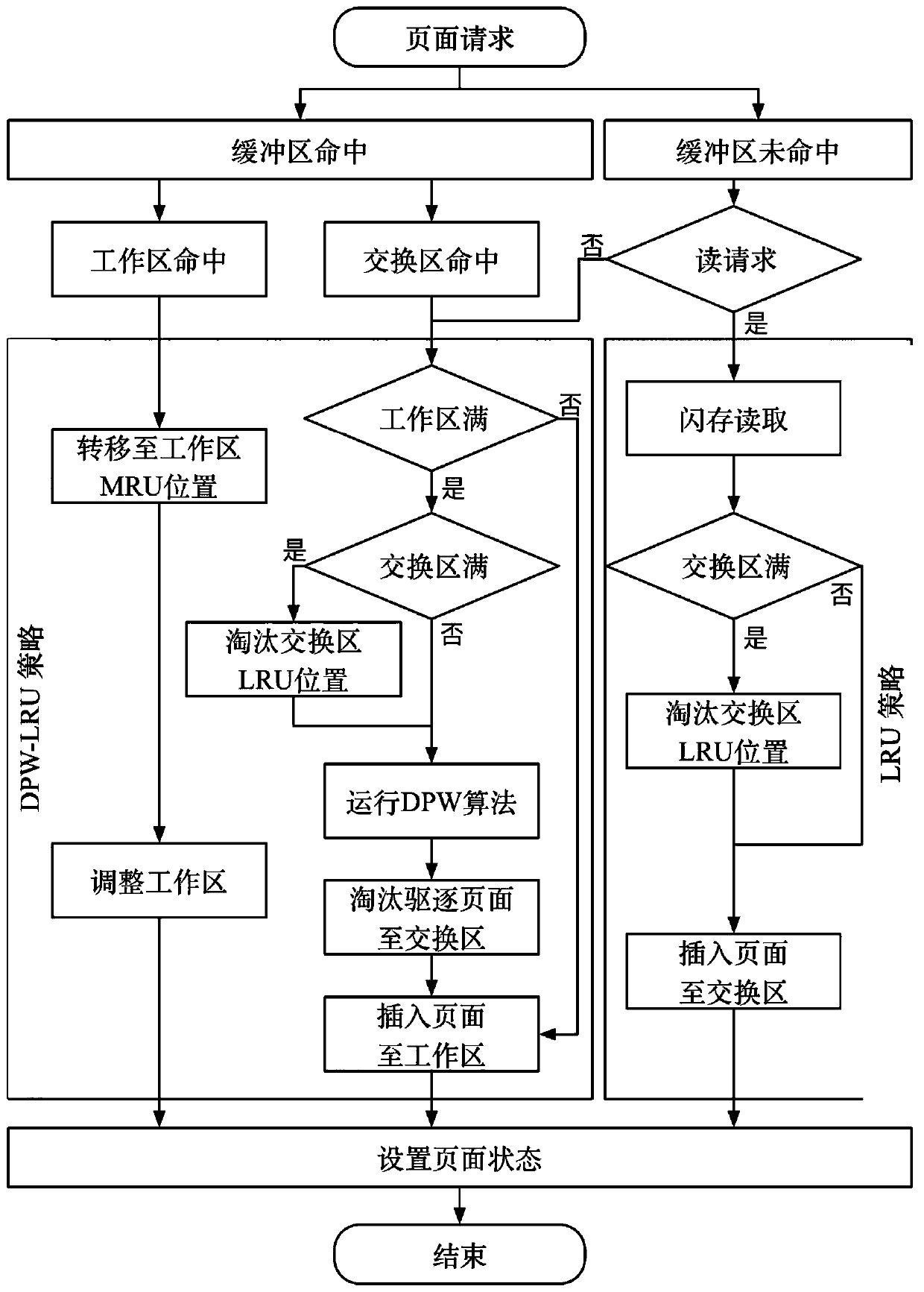

[0065] see figure 1 , shown as a flow chart of the present invention, the specific implementation of the present invention is as follows:

[0066] Step S1: Read the page requests in the request queue and identify and classify the page request type and the area where they are located, specifically including the following steps:

[0067] Step S11: Preprocess the page request queue, set the page request as R, which contains the request number R pid and page request mode R am ∈{read,write}. The request queue S can be expressed by the following formula:

[0068] S={R 1 , R 2 ,...,R j ,...,R n},1≤j≤n

[0069] Request queue S is multiple page requests R pid A collection of , where n represents the total number of requests in the request queue S, and j represents the page request number. Set ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com