External corpus speech identification method based on deep convolutional neural network

A neural network and deep convolution technology, applied in the field of external corpus speech recognition, can solve the problems of not using the original speech signal features and not being able to obtain the optimal solution, and achieve the goal of improving sentence recognition accuracy, strengthening recognition rate, and high recognition accuracy Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] The present invention will be further described below in conjunction with accompanying drawing.

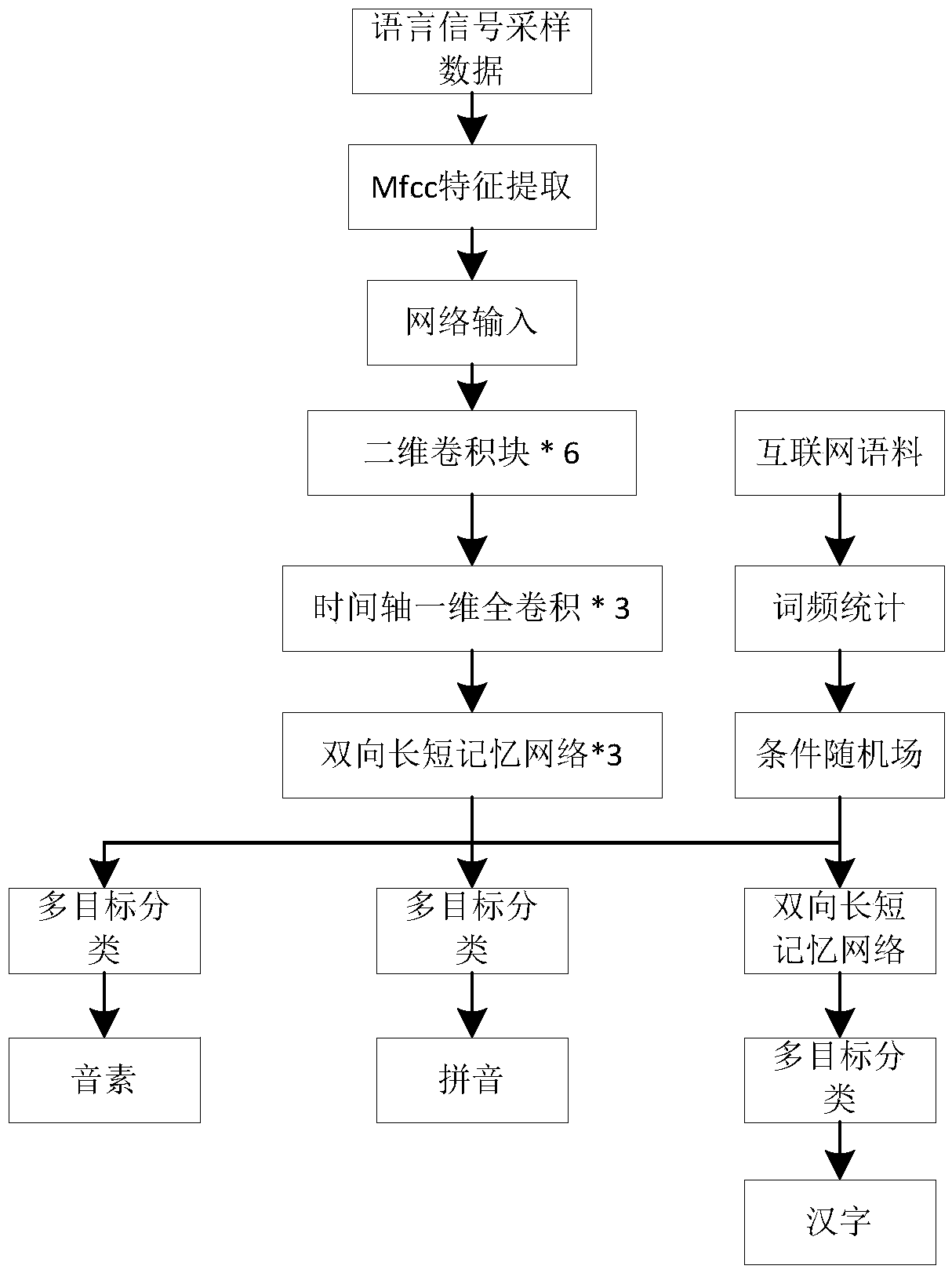

[0048] Such as Figure 1-3 As shown, a deep convolutional neural network-based external corpus speech recognition method, its specific implementation includes the following steps:

[0049] Step 1. Obtain voice annotation data and Internet corpus

[0050] The speech annotation data described in 1-1 is the recording data of a paragraph, and the speech annotation data is analyzed by manual extraction to obtain the Chinese character sequence, pinyin sequence and phoneme sequence corresponding to the speech annotation data.

[0051] 1-2 Each Chinese character has pinyin, and one pinyin may correspond to multiple Chinese characters. Specifically: a pinyin is split into initials and finals. In the same way, the consonants and finals are split into phonemes, and multiple phonemes correspond to one consonant and final.

[0052] 1-3 When obtaining the voice annotation data, the f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com