Method for solving motion blurring of star sensor image based on assistance of MEMS (Microelectro Mechanical System) gyroscope

A star sensor, motion blur technology, applied in image enhancement, image analysis, image data processing and other directions, can solve the problem of low accuracy of star point images, and achieve overcoming the influence of blur kernel accuracy, high practical value, and improved accuracy. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

[0037] Specific implementation mode one: combine figure 1 Describe this embodiment, a kind of MEMS gyro-assisted star sensor image solution motion blur method based on this embodiment specific process is:

[0038] Step 1: Acquire the starry sky image I with motion blur through the star sensor origin , adopt the method of spatial filtering to image I of the starry sky with motion blur origin Perform denoising to obtain image I filtered ;

[0039] Step 2, use the threshold method to image I filtered The stripes in are segmented, and the center position coordinates are calculated for each segmented stripe;

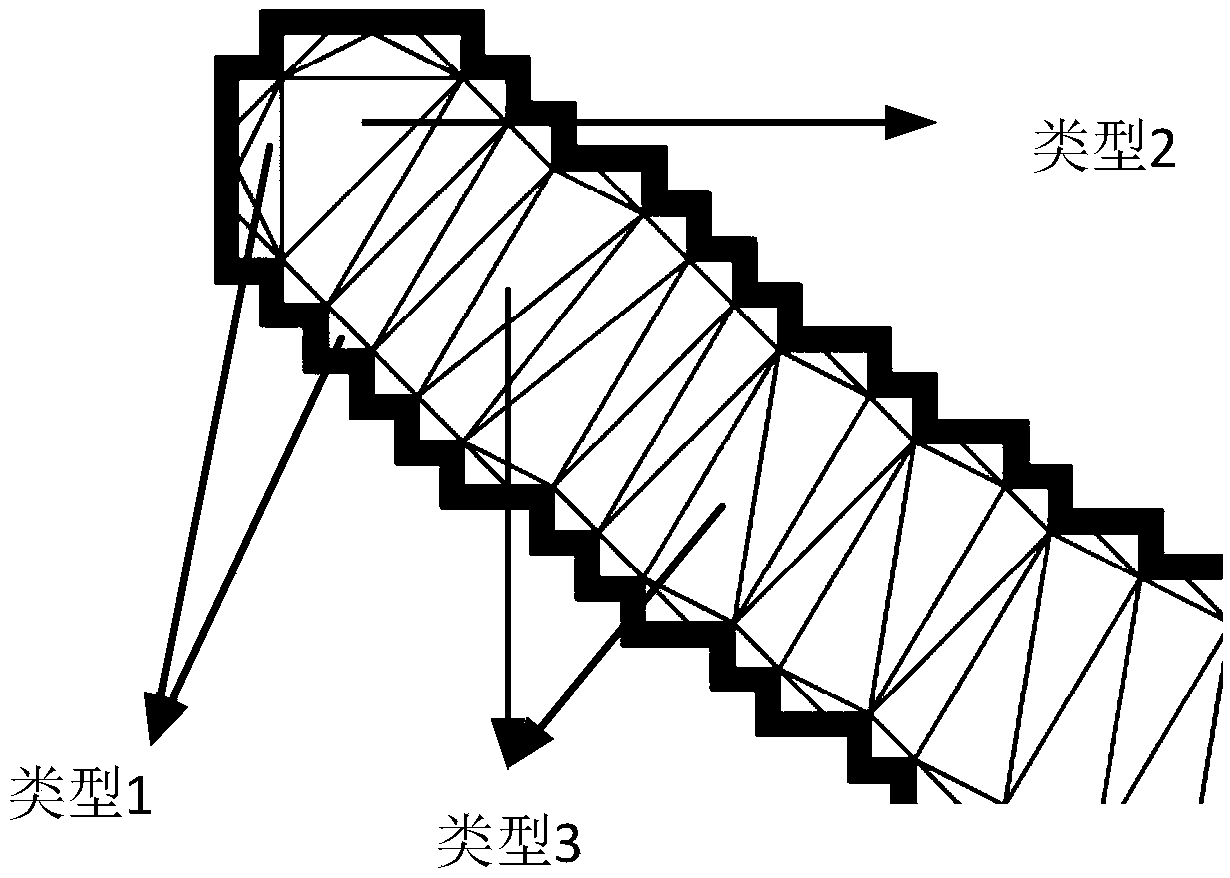

[0040] Step 3, read the angular velocity output by the MEMS gyroscope within the exposure time of the star sensor, and use the angular velocity and the center position coordinates of each stripe in step 2 to calculate the position of the star point in image I within the exposure time of the star sensor. filtered According to the trajectory of motion on the fringe, the b...

specific Embodiment approach 2

[0044] Specific embodiment two: the difference between this embodiment and specific embodiment one is: in said step one, gather the starry sky image I with motion blur by star sensor origin , adopt the method of spatial filtering to image I of the starry sky with motion blurorigin Perform denoising to obtain the denoised image I filtered ; The specific process is:

[0045] Step one, construct a two-dimensional Gaussian filter template with a size of 5×5 and σ=1.4:

[0046]

[0047] σ is the standard deviation of the two-dimensional Gaussian filter template;

[0048] Step 1 and 2, by taking the obtained starry sky image I with motion blur origin Perform convolution operation with the filter template to obtain the denoised image, the calculation formula is:

[0049]

[0050] Among them: I origin Acquisition of starry sky images with motion blur for star sensors, I filtered is the denoised image, for the convolution operation.

[0051] Other steps and parameters are...

specific Embodiment approach 3

[0052] Specific embodiment three: the difference between this embodiment and specific embodiment one or two is that: in the step two, the threshold value method is used to image I filtered The stripes in are segmented, the specific process is:

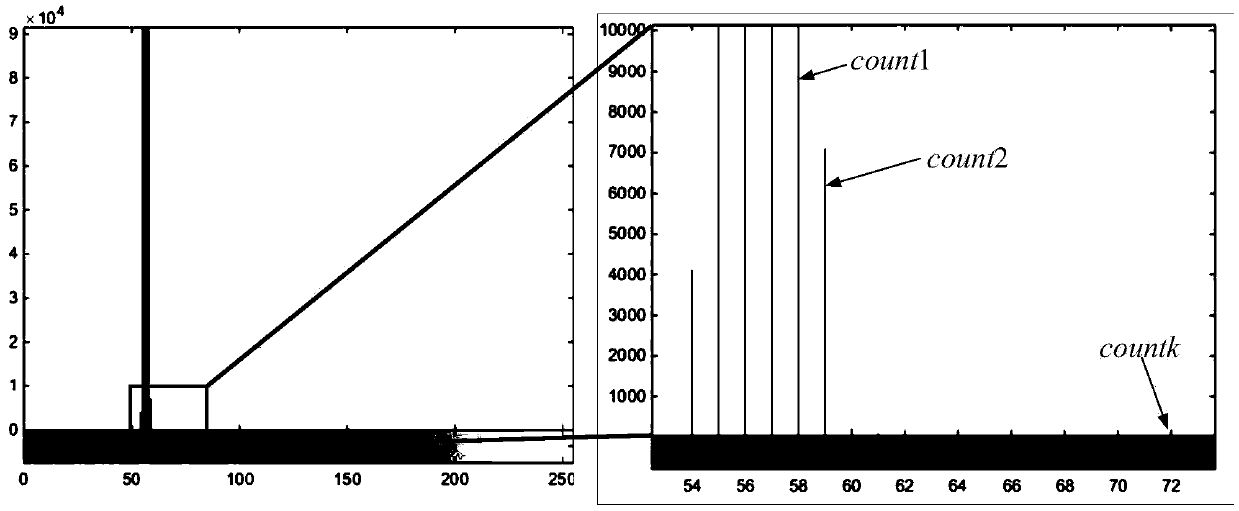

[0053] Step 21, establish a brightness histogram for the image after denoising, count the number of each brightness pixel in the image after denoising, record it as counti, i=0,1,2,...,255, see the histogram figure 2 ;

[0054] Among them, i is the gray level of the image;

[0055] Step 22, calculate the difference d_count between the ratios of two adjacent counti i , the calculation formula is:

[0056]

[0057] if d_count i threshold ;

[0058] Step two and three, build and denoise the image I filtered Binary images of equal size I seg (x img ,y img ), and define the image I seg (x img ,y img ) pixel coordinate system, the origin of which is located in the image I seg (x img ,y img ) The position of the first row a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com