Visual and auditory perception integrated multitask collaborative identification method and system

A recognition method and multi-task technology, applied in the field of multi-source heterogeneous data processing and recognition, can solve the problems of complex deep neural network model, difficult to achieve rapid and balanced configuration of network resources, and huge number of parameters.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

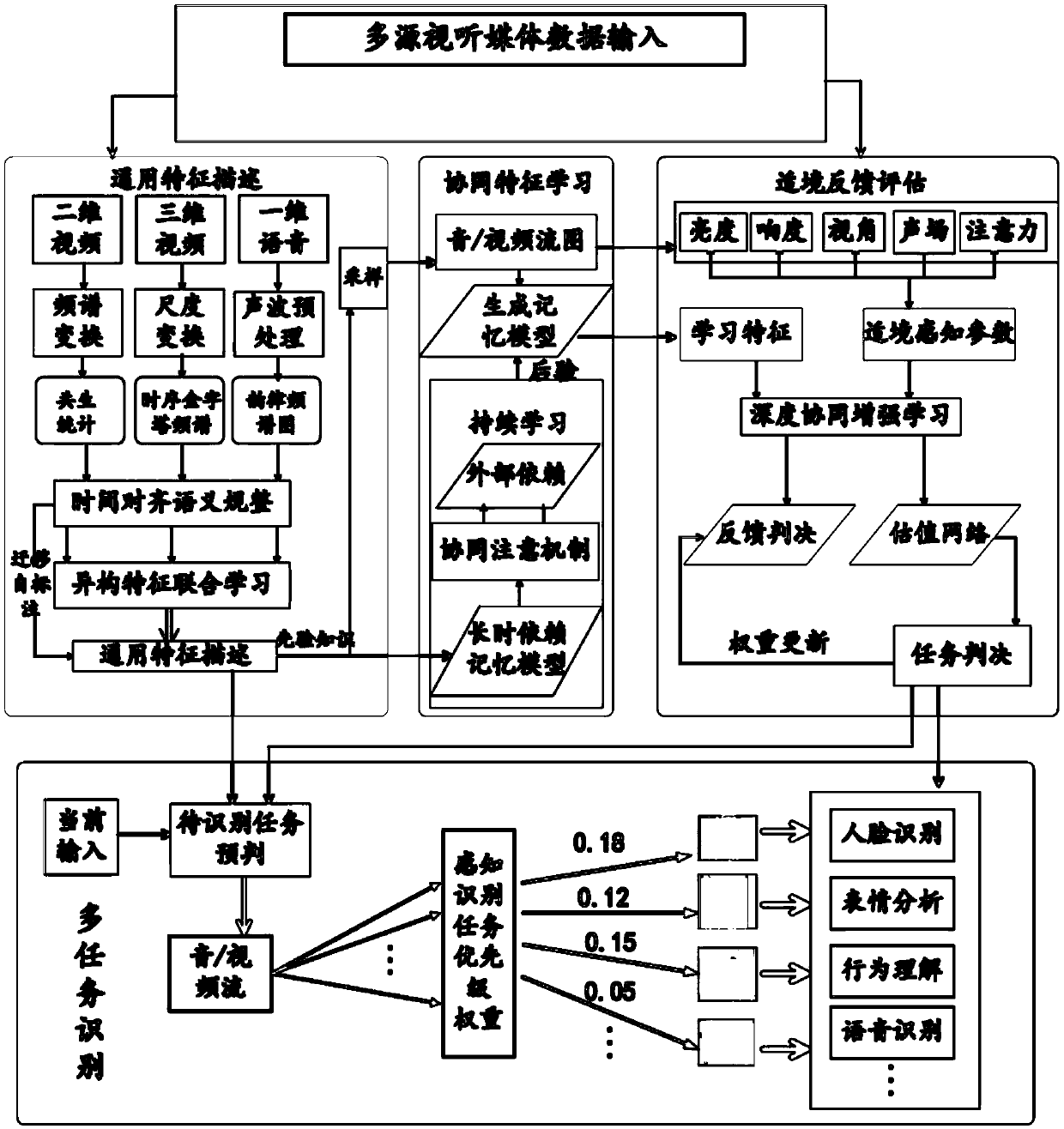

[0082] Such as figure 1 As shown, Embodiment 1 of the present invention provides a multi-task cooperative recognition method and system that integrates audio-visual perception.

[0083] A multi-task cooperative recognition system that integrates audio-visual perception disclosed in Embodiment 1 of the present invention includes:

[0084] The general-purpose feature extraction module is used to establish a time-synchronous matching mechanism for multi-source heterogeneous data, realize a multi-source data association description model based on potential high-level shared semantics, realize efficient support between different channel data, information complementarity, and maximize the realization of data de-redundancy;

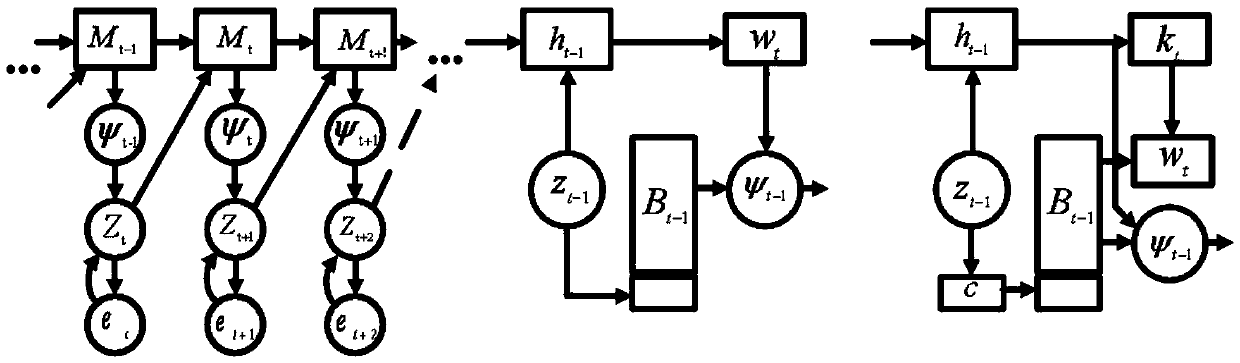

[0085] The deep collaborative feature learning module is used to establish a long-term dependent generative memory model, explore a semi-supervised continuous learning system based on collaborative attention and deep autonomy, realize dynamic self-learning with...

Embodiment 2

[0095] Embodiment 2 of the present invention provides a method for multi-task discrimination using the above-mentioned system. The method includes: a general feature description of massive multi-source audio-visual media perception data, including establishing a time synchronization matching mechanism for multi-source heterogeneous data, and realizing based on A multi-source data association description model with potential high-level shared semantics; deep collaborative feature learning for long-term memory of continuous input streaming media data, including establishing a long-term dependent generative memory model, and exploring semi-supervised continuous learning based on collaborative attention and deep autonomy System; intelligent multi-task deep collaborative enhanced feedback recognition model under the adaptive framework, including adaptive sensing computing theory based on the cooperative work of agents, introducing adaptive deep collaborative enhanced feedback and mul...

Embodiment 3

[0103] Such as figure 1 As shown, Embodiment 3 of the present invention provides a multi-task cooperative recognition method that integrates audio-visual perception.

[0104] First, a transfer algorithm is explored to establish a general feature description method for multi-source audiovisual media perception data.

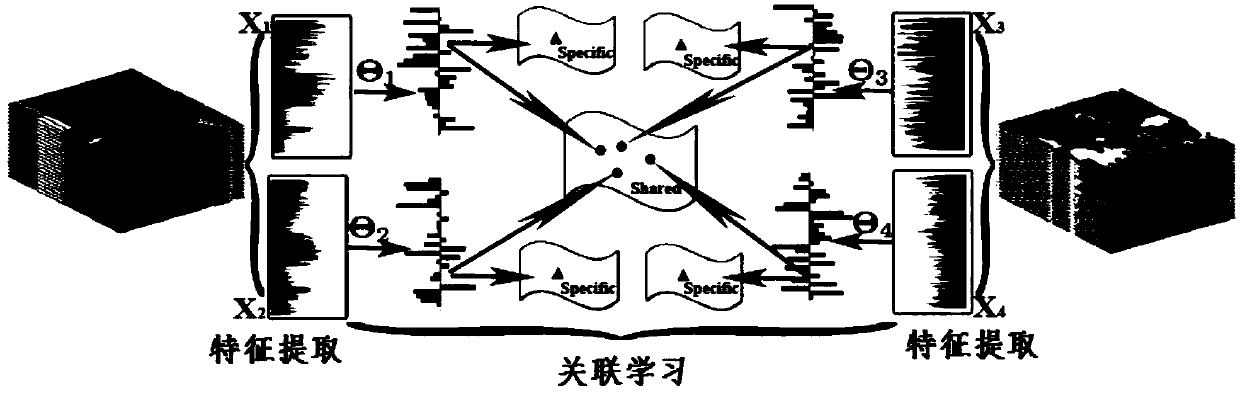

[0105] In order to achieve efficient collaborative analysis for different audiovisual tasks, and to extract highly robust and versatile feature descriptions for multi-source audiovisual perception data, as a prototype feature for subsequent collaborative learning, it is first necessary to analyze the audiovisual perception data. features. Most of the audio data actually acquired is a one-dimensional time series, and its descriptiveness is mainly reflected in its spectrum-time clues. It needs to use the spectral transformation of the auditory perceptual domain combined with the prosodic information of adjacent audio frames to describe. The visual perception data ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com