WGAN model method based on depth convolution nerve network

A deep convolution and neural network technology, applied in the field of deep learning neural network, can solve the problems of slow speed, the discriminator cannot indicate the network training direction, and the generator cannot learn the characteristics of the data set, etc., to achieve a directional effect.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

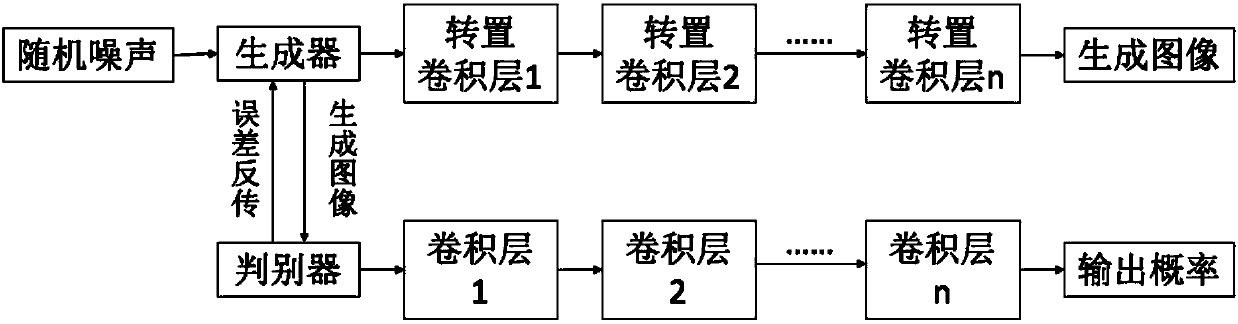

[0025] This embodiment discloses a WGAN model method based on a deep convolutional neural network, which specifically includes the following steps:

[0026] Step S1, constructing a Wasserstein Generative Adversarial Network WGAN model, which includes a generator and a discriminator.

[0027] Step S2, constructing the discriminator into a deep convolutional neural network structure;

[0028] The discriminator is constructed in the form of a deep convolutional neural network. It is divided into several layers, and each layer has a corresponding convolution kernel, that is, a corresponding weight parameter.

[0029] Step S3, constructing the generator into a transposed convolutional neural network structure;

[0030] The number of convolutional network layers of the generator is the same as that of the discriminator, and the convolution kernel is the transpose of the convolution kernel of the discriminator.

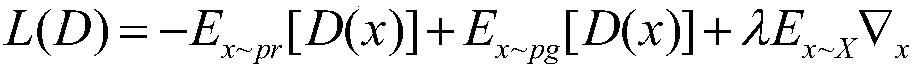

[0031] Step S4, adopting the loss function of the Wasserstein distan...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com