Interaction robot intelligent motion detection and control method based on nerve network

A technology of robot intelligence and neural network, which is applied in the field of behavior detection and motion control of educational interactive robots, can solve problems such as failure of behavior analysis, error detection, and impact on the execution results of the detection process, achieving high accuracy, small amount of calculation, and high sensitivity. high effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

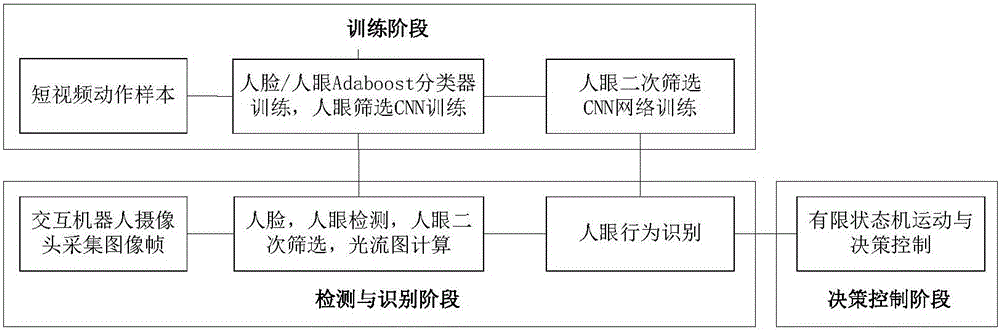

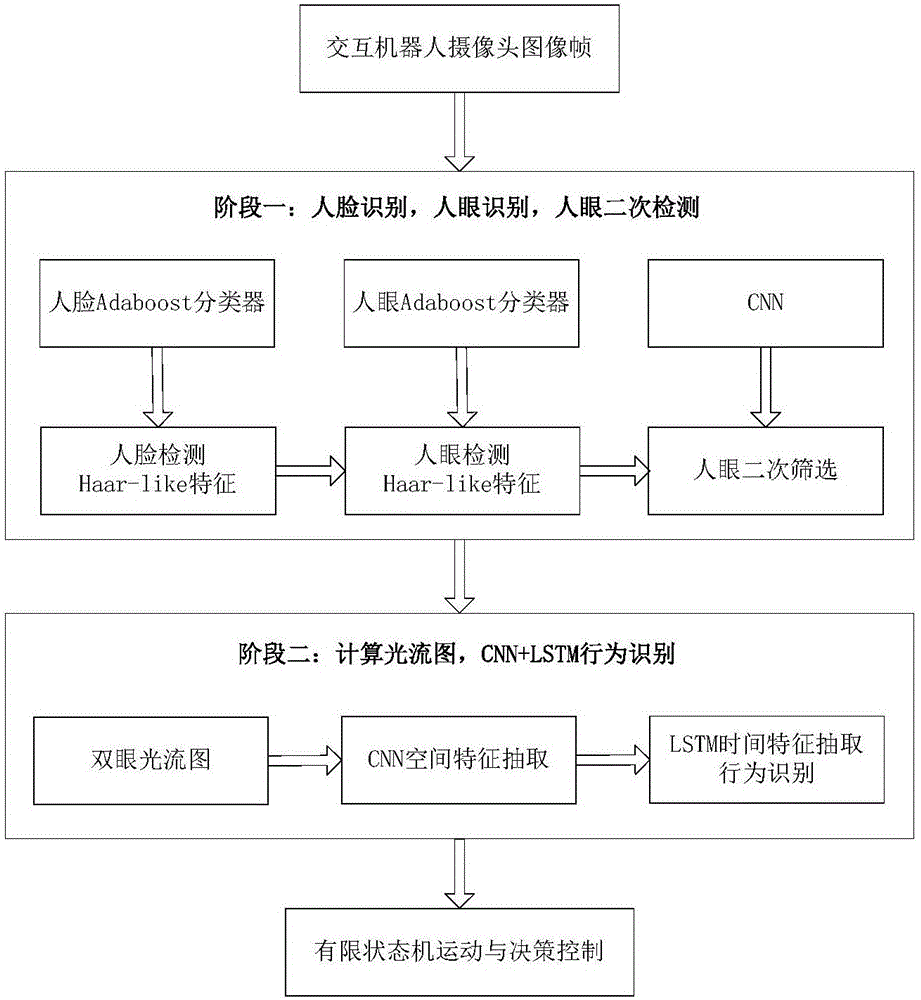

[0019] Attached below Figure 1-4 , to further describe the present invention.

[0020] A kind of neural network-based interactive robot intelligent motion detection and control method of the present invention comprises the following steps:

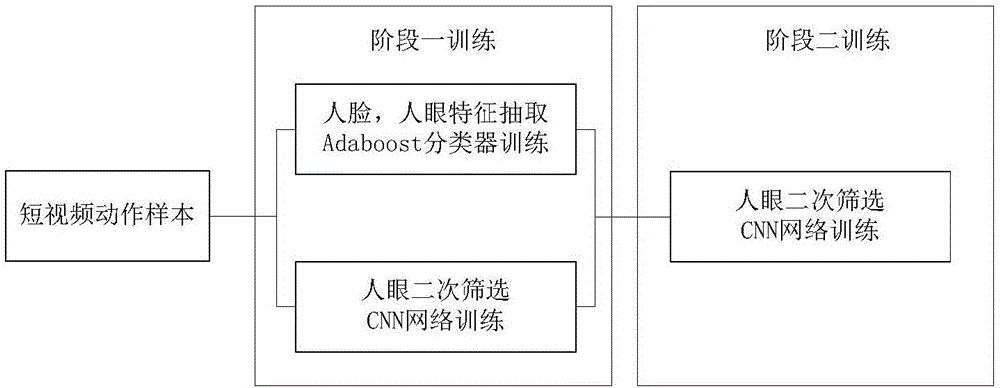

[0021] Step 1. Preprocessing. Such as figure 2 , Use the interactive robot camera to collect short videos of the eye movements of the interactive human. Each video is 2 seconds long. The eye movements include three types of movements: moving to the left, moving to the right, and returning to looking straight ahead. In order to ensure the robustness of the system, collect as many samples of different interacting people as possible in different backgrounds.

[0022] Step 2. Phase one training. Such as figure 2 , for the short video action samples collected above, a video frame picture is collected every 5 frames, and the human face and human eye position calibration frame are manually marked to generate human face and human eye photo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com