Convolutional neural network-based target tracking method and apparatus

A convolutional neural network and target tracking technology, applied in image data processing, instruments, calculations, etc., can solve the problems of inability to distinguish different individuals, low robustness, and limited tracking performance, so as to overcome the inability to distinguish Different individuals in the same object, improve computational efficiency, and avoid the effect of overfitting

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] To further illustrate the various embodiments, the present invention is provided with accompanying drawings. These drawings are a part of the disclosure of the present invention, which are mainly used to illustrate the embodiments, and can be combined with related descriptions in the specification to explain the operating principles of the embodiments. With reference to these contents, those skilled in the art should understand other possible implementations and advantages of the present invention. The present invention will be further described in conjunction with the accompanying drawings and specific embodiments.

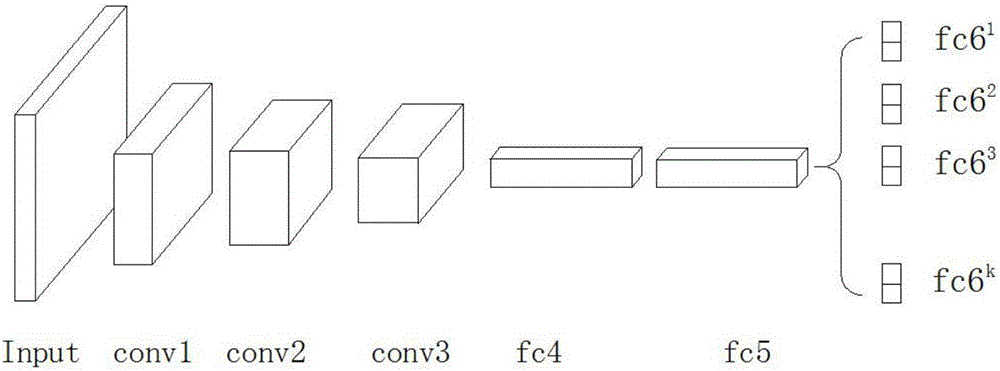

[0029] Due to different environments, such as changes in lighting conditions, blur caused by motion, or changes in scale, the texture features of objects still show some commonality, but there are differences among different objects. Therefore, the convolutional neural network designed by an embodiment of the present invention includes two parts, which ar...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com