Multimode recurrent neural network picture description method based on FCN feature extraction

A technology of cyclic neural network and image description, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve problems such as inability to generate more complete image descriptions, loss, and inability to generate image descriptions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

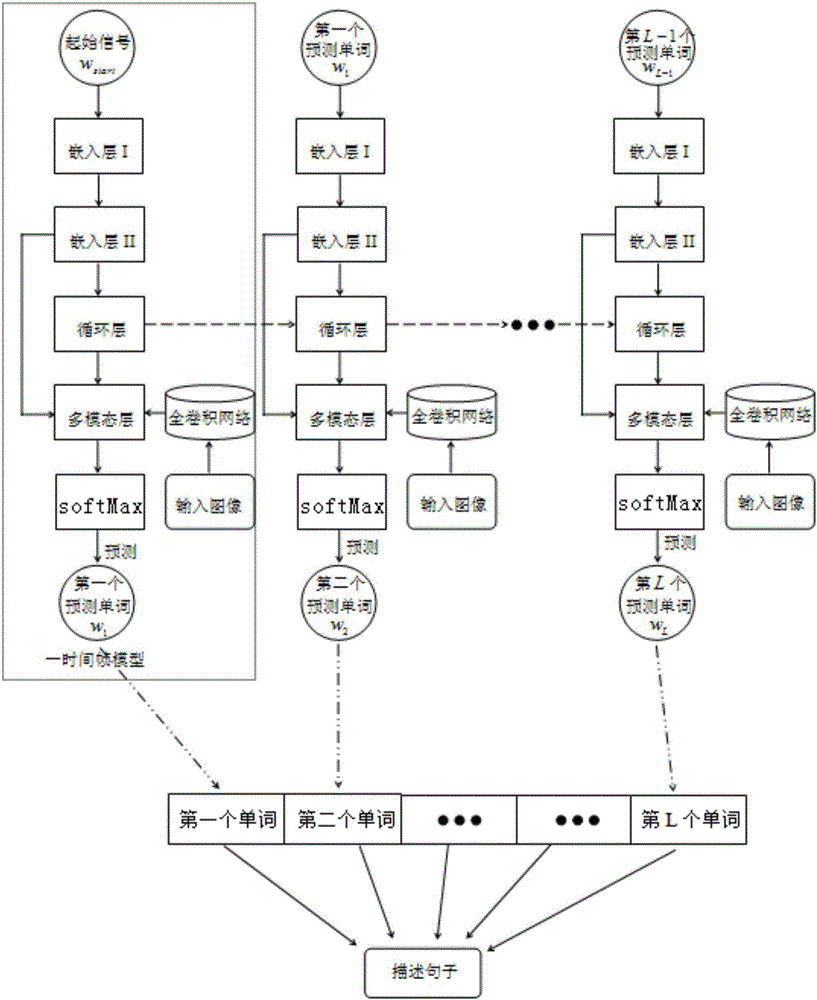

[0049] Such as Figure 1-2 As shown, a multimodal recurrent neural network image description method based on FCN feature extraction includes the following steps:

[0050] S1 construction and training of fully convolutional network FCN

[0051] S1.1 Acquire images: Download the PASCAL VOC dataset from the Internet, which provides a set of standard excellent datasets for image recognition and image classification. And use this data set to fine-tune and test the model;

[0052] S1.2 Adjust the existing trained convolutional neural network model Alex Net to obtain a preliminary full convolutional network model;

[0053] S1.3 Delete the classification layer of the Alex Net convolutional neural network, and convert the fully connected layer to a convolutional layer;

[0054] S1.4 Perform 2x upsampling on the result of the convolution of the highest pooling layer 5 to obtain an upsampling prediction of the pooling layer 5, and the prediction result has rough image information. Th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com