Sensor data-based deep learning step detection method

A technology of deep learning and detection methods, applied in the field of computer vision, which can solve the problems of difficult visualization and interpretation of data and difficulty in training network models.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026] It should be noted that, in the case of no conflict, the embodiments in the present application and the features in the embodiments can be combined with each other. The present invention will be further described in detail below in conjunction with the drawings and specific embodiments.

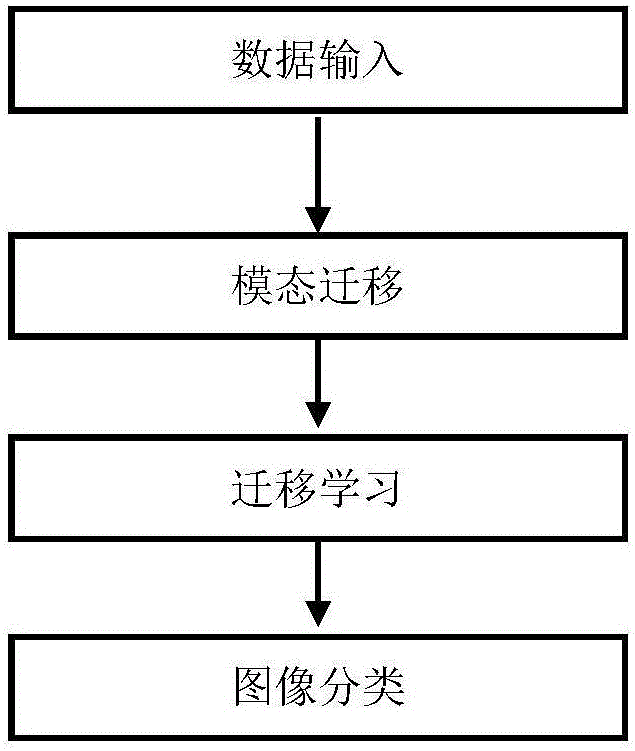

[0027] figure 1 It is a system flowchart of a sensor data-based deep learning footstep detection method of the present invention. Mainly including data input; modality transfer; transfer learning; image classification.

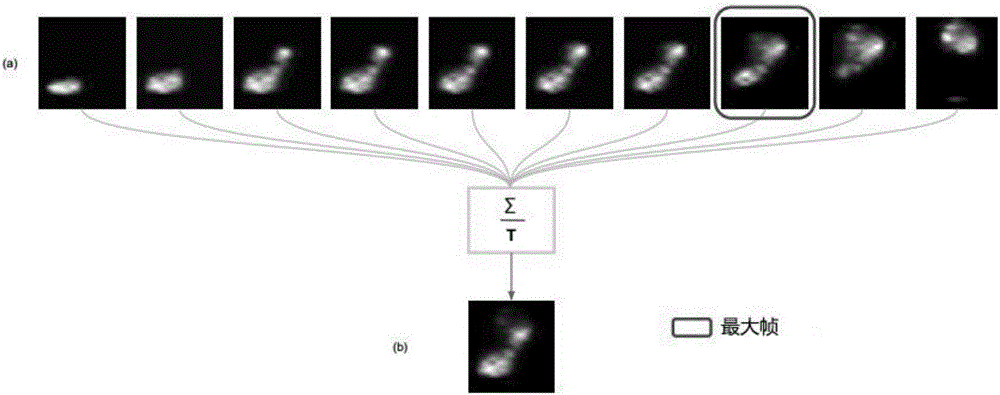

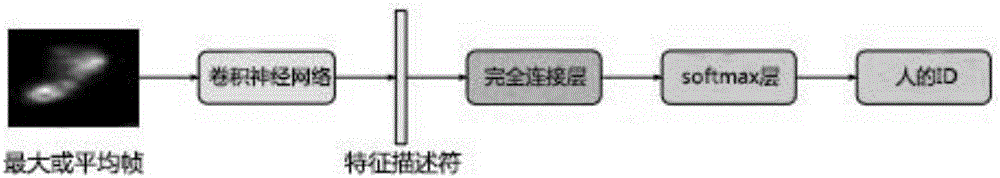

[0028] Wherein, in the data input, the footstep data obtained by people walking on the pressure-sensitive matrix is selected as the gait data set, and the data set is composed of 13 people's footstep samples; each person records 2-3 steps in each walking sequence. footsteps, with a minimum of 12 samples recorded per person; each walking sequence is a separate data sequence labeled with a specific person’s ID, which defines the class label for the convolutional neura...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com