RGB-D visual odometry taking ground constraint into consideration in indoor environment

A visual odometer and indoor environment technology, applied in the field of autonomous positioning of mobile robots, can solve problems such as slow algorithm speed, great influence, and difficulty in meeting the real-time output requirements of the odometer, and achieve the effect of improving estimation accuracy and rapidity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0051] The present invention will be further described below in conjunction with the accompanying drawings.

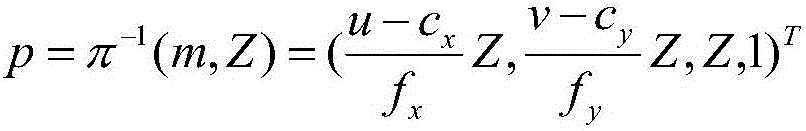

[0052] The invention discloses an RGB-D visual odometry method considering ground constraints in an indoor environment, using an RGB-D camera as a sensor input device to realize the visual odometry function, including ORB feature extraction and matching, ground plane detection and constraint addition, RANSAC motion transformation estimation, construction of motion transformation error evaluation function to evaluate motion transformation estimation results, odometer result output and other steps. The invention adopts the ORB algorithm for feature extraction and matching of color images, and improves the rapidity of feature detection on the basis of ensuring the accuracy requirements; uses the depth image to detect point cloud ground, and corrects the output result of visual odometer pose transformation in combination with ground plane constraints, improving Estimated a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com